Listen to the article

The Hidden Language of Extremism: How Coded Terms Evade Social Media Moderation

When most people see the word “CAFE,” they think of coffee shops and hot beverages. But in certain corners of social media, these four letters carry a much darker meaning: “Camarada Arriba Falange Española” (“Comrade, Up with the Spanish Phalanx”) – a covert expression of support for Francisco Franco’s fascist regime.

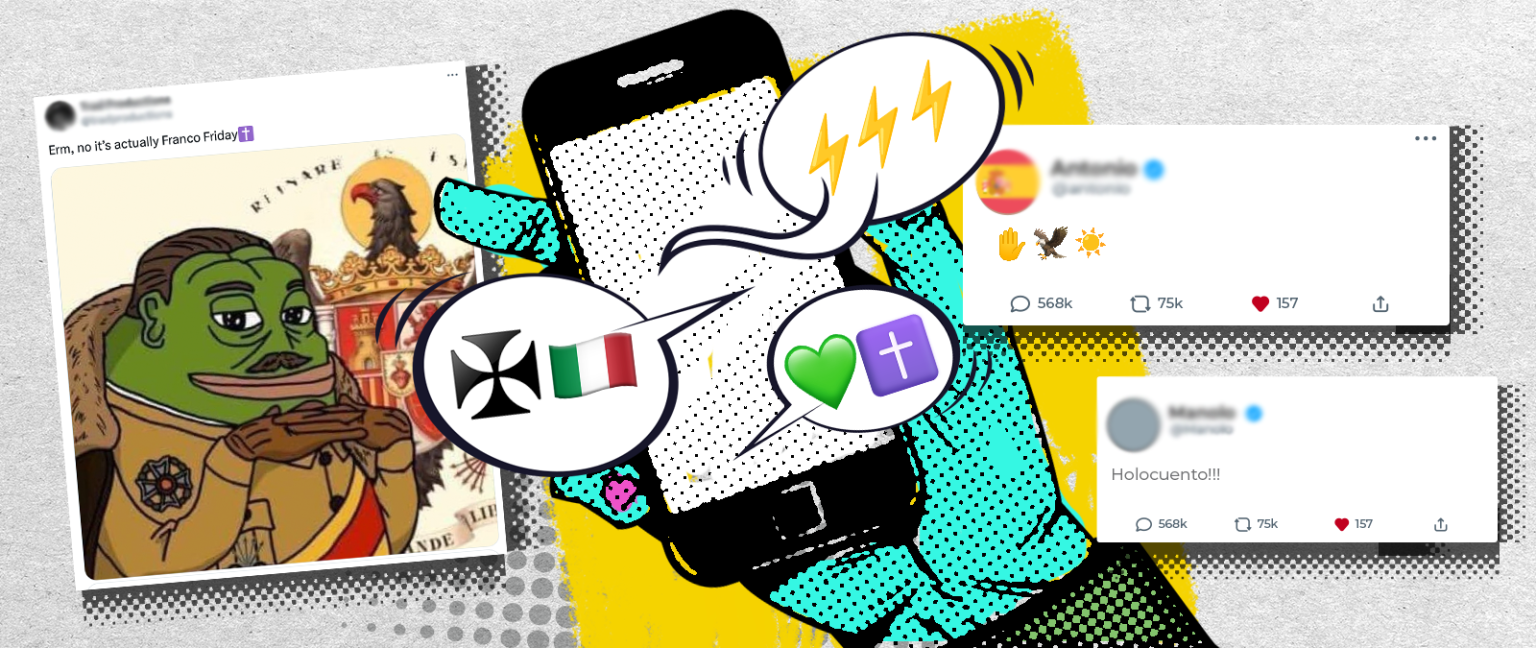

This linguistic sleight of hand is just one example of how extremist content continues to flourish online despite platform restrictions. From coded references to Hitler and Mussolini to hidden symbols and strategically deployed emojis, these tactics enable users to spread extremist ideologies while evading detection from content moderators.

“The difficulty in keeping up with such keywords lies in the fact that they are based on a constantly evolving lexical and ideological repertoire,” UNESCO told Maldita.es, which conducted a cross-border investigation with Italy’s Facta examining how fascist discourse infiltrates modern social media.

The researchers identified numerous coded terms used across platforms. In antisemitic circles, the number “1488” combines two white-supremacist symbols: the “Fourteen Words” slogan about protecting white children, and “88,” which stands for “Heil Hitler” (H being the eighth letter of the alphabet). Similarly, the innocent-sounding abbreviation “TJD” (“Have a totally joyful day”) masks its true meaning: “Total Jewish Death.”

Holocaust denial – despite overwhelming historical evidence – employs terms like “Holocuento” (“Holo-story”) or “Holoengaño” (“Holo-hoax”), while Anne Frank, the Jewish girl who documented her life in hiding, is mockingly referred to as “Ana Fraude.”

Hitler himself is referenced through coded terms like “Austrian painter,” “the mustachioed one,” or “Uncle A.” In Spain, supporters of Franco’s regime use “FF” for “Franco Fridays” to commemorate the dictator weekly. Similarly, Italian Mussolini enthusiasts employ nicknames like “Mascellone” (“big jaw”) or “Gran Babbo” (“big daddy”).

Beyond text, emojis serve as visual codes. The eagle (🦅) symbolizes Nazi Germany and Franco’s Spain, while the raised hand (✋) represents the fascist salute. Triple lightning bolts (⚡️⚡️⚡️) reference the SS, Hitler’s paramilitary organization that played a central role in the Holocaust.

Communication experts describe this phenomenon as a “dog whistle” – a message with dual meanings, harmless to outsiders but loaded with significance for insiders. This approach creates what UNESCO calls “plausible deniability,” allowing users to evade moderation systems by claiming innocence if questioned.

Memes represent another powerful vehicle for extremist content. By disguising radical ideas as humor, these visual elements can reach younger audiences who might otherwise reject overtly extremist messaging. “Is the humor really to spread ideology or is it to ridicule it? Those are completely different things,” notes Richard Kuchta, senior analyst at the Institute for Strategic Dialogue.

The notorious Pepe the Frog meme exemplifies this strategy. Originally a harmless cartoon character, Pepe was appropriated by far-right groups who depicted him with Nazi symbols and antisemitic messages. The meme eventually became so mainstream that even Donald Trump shared it during his 2016 presidential campaign.

Social platforms struggle to combat these evolving tactics. A 2020 analysis by the Institute for Strategic Dialogue found that Facebook’s algorithm actively promoted Holocaust-denial content, with at least 36 Facebook groups dedicated to denying the Jewish genocide reaching over 366,000 users. Another study revealed hundreds of TikTok accounts openly supporting Nazism.

Platform responses remain inconsistent. When searching for “Hitler” or “Nazi” on TikTok, users receive a warning and links to reliable Holocaust information. However, searches for “Francisco Franco” or “Francoism” trigger no such alerts. Similarly, the platform blocks “Kalergi Plan” in English but allows the Spanish equivalent “Plan Kalergi” to return multiple conspiracy videos.

Experts recommend several approaches to combat this problem. UNESCO suggests platforms add verification labels directing users to accurate information. They also advocate for better training of content moderators, who may not recognize region-specific references like Spain’s “Blue Division” – Spanish volunteers who fought alongside Nazi Germany.

The European Union’s Digital Services Act holds platforms liable for illegal content once they’re informed of its presence. However, the cat-and-mouse game continues, with extremists constantly developing new codes and tactics to stay ahead of moderators.

“Whenever there is clearly illegal content, platforms are required to manage and remove it,” explains Kuchta. “But this does not affect freedom of expression, since we are talking about clearly illegal content.”

As digital spaces evolve, so too will the methods used to spread extremist ideologies. Recognizing these coded patterns represents a crucial first step in preventing the normalization of harmful content across social media platforms.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

10 Comments

Worrying to see how extremists are finding ways to bypass content moderation. We need more robust and nuanced approaches to tackle coded language and disinformation on social media.

Absolutely. Moderating nuanced and evolving language is a huge challenge, but critical to limit the spread of harmful ideologies.

This is a concerning trend. Disinformation and denialism undermine public discourse and can have real-world consequences. More must be done to address these issues.

Agreed. Platforms need to stay vigilant and adapt their tactics as extremist groups find new ways to evade detection.

The ability of extremists to subvert content moderation with coded language is very concerning. Platforms need to invest in more sophisticated tools and techniques to combat this.

Absolutely. Constant innovation and collaboration will be essential to stay ahead of evolving disinformation tactics.

The use of coded language to spread extremist views is very troubling. Platforms must work harder to identify and remove this kind of content. Transparency and collaboration will be key.

Definitely. Dealing with the constantly evolving tactics of bad actors is an ongoing challenge, but one that needs urgent attention.

This article highlights the insidious ways that fascist and extremist ideologies can infiltrate social media. We must remain vigilant and continue to call out these harmful narratives.

Agreed. Disinformation and denialism pose a serious threat to democracy and free discourse. Tackling this issue should be a top priority.