Listen to the article

European Study Reveals TikTok Leads in Misinformation Prevalence Among Social Platforms

A groundbreaking study of online misinformation across Europe has found that approximately one in five posts users encounter on TikTok contains false or misleading information, the highest rate among major social media platforms.

Science Feedback, leading a consortium of European fact-checking organizations, released these findings on December 26, 2025, marking the first comprehensive, cross-platform measurement of disinformation across the European Union’s digital landscape.

The SIMODS project analyzed approximately 2.6 million posts with a combined 24 billion views across Facebook, Instagram, LinkedIn, TikTok, X/Twitter, and YouTube in France, Poland, Slovakia, and Spain. The research covered high-stakes topics including health, climate change, the Russia-Ukraine war, migration, and national politics.

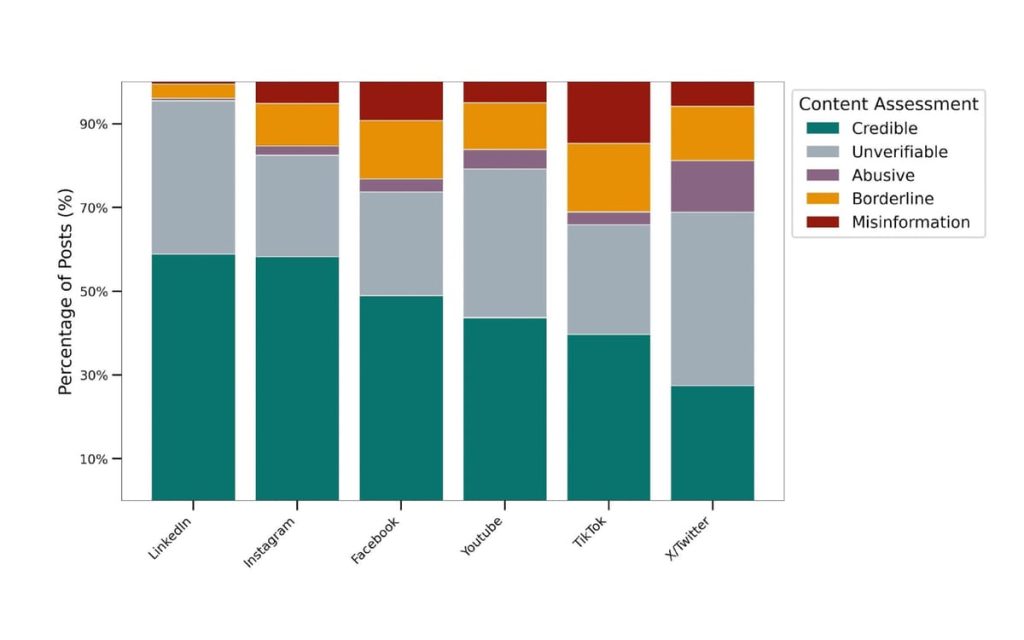

TikTok showed the highest misinformation prevalence at approximately 20% of exposure-weighted posts, followed by Facebook at 13% and X/Twitter at 11%. YouTube and Instagram registered around 8%, while LinkedIn demonstrated the lowest rate at just 2%.

“This represents a significant methodological breakthrough in measuring online misinformation,” said Emmanuel Vincent, Science Feedback executive director who led the consortium. “Previous attempts couldn’t construct reliable prevalence measures due to limited data collection scale.”

When researchers expanded their analysis to include “problematic” content—material that supports disinformation narratives without making verifiably false claims, plus hate speech—the numbers climbed significantly. Under this broader definition, TikTok and X/Twitter topped the list at 34% and 32% respectively, with Facebook following at 27%.

The findings come at a critical juncture as many platforms have scaled back their anti-disinformation efforts since early 2025, reducing fact-checking programs and related teams, often citing political pressures in the United States as justification.

Particularly concerning was the discovery that accounts repeatedly sharing misinformation attract substantially more engagement per follower than credible sources on nearly all platforms. YouTube showed the most pronounced disparity, with low-credibility channels receiving approximately eight times more engagement than high-credibility channels per 1,000 followers. Facebook followed at seven times, while Instagram and X/Twitter exhibited ratios of approximately five times.

“This indicates systematic amplification advantages for recurrent misinformers,” the report states, suggesting platform algorithms and user behavior patterns combine to reward misleading content with disproportionate reach.

Health misinformation dominated across platforms, representing 43.4% of all misinformation posts identified. Content about the Russia-Ukraine war accounted for approximately 24.5%, followed by national politics at 15.5%, with climate change and migration each representing 6.6%.

The research coincides with significant regulatory developments in Europe. In February 2025, the European Commission integrated the Code of Practice on Disinformation into the Digital Services Act framework, creating the Code of Conduct on Disinformation. As of July 1, 2025, this Code became operational under the DSA with formal auditing and compliance mechanisms.

Despite these regulatory advancements, researchers faced substantial obstacles obtaining platform data. The team invoked DSA Article 40.12 in December 2024, requesting random samples of 200,000 posts per language from each platform. Only LinkedIn fully complied with this request. TikTok granted API access in March 2025—too late for inclusion in the first wave. Meta and YouTube did not respond to requests, while X/Twitter denied the application, stating the project didn’t meet regulatory requirements.

The study also revealed gaps in platform efforts to prevent monetization by misinformation sources. On YouTube, approximately 76% of eligible low-credibility channels showed signs of monetization, compared to 79% for high-credibility channels. Google Display Ads appeared on 27% of low-credibility websites versus 70% for high-credibility sites.

“Platforms must reduce the spread and impact of misleading content and avoid incentivizing it financially,” the report states. “Our results show that misleading content remains prevalent, recurrent misinformers benefit from persistent engagement premiums, and demonetization efforts are not fully operational.”

The consortium plans to publish a second measurement in early 2026 to track changes over time, hoping for improved platform cooperation as DSA enforcement mechanisms mature. The research represents a critical shift toward evidence-based platform regulation in Europe, providing comparable, scientifically sound measurements to inform policy debates previously dominated by assertions rather than systematic evidence.

As digital platforms continue evolving their content moderation approaches, such independent measurement becomes increasingly vital for protecting users’ rights to accurate information and holding platforms accountable under European regulatory frameworks.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

13 Comments

This is concerning. As a platform focused on short-form video, TikTok’s algorithms may be amplifying misinformation more than other social media. Platforms need to prioritize fact-checking and take stronger action against false content.

Absolutely. Misinformation is a serious issue that can have real-world consequences. Platforms must be more vigilant and transparent about their content moderation practices.

This EU study highlights the urgent need for stronger regulations and oversight of social media platforms. Misinformation thrives in the absence of clear policies and enforcement. Platforms must be held accountable.

Absolutely. Meaningful regulation is crucial to address this problem. Policymakers should work closely with platforms and fact-checkers to develop and implement effective standards.

This is worrying, but not surprising given TikTok’s rapid growth and user demographics. Short-form video presents unique challenges for content moderation. The platforms need to invest more in AI-powered detection and human review.

The findings on TikTok’s misinformation rates are alarming but not entirely surprising. Short-form video can be a breeding ground for false and misleading content. Platforms must prioritize fact-checking and user education.

While the high misinformation rates on TikTok are concerning, I’m glad to see this issue being studied more comprehensively across Europe. Addressing online falsehoods will require a multi-stakeholder approach involving platforms, governments, and civil society.

Agreed. Collaborative efforts to combat misinformation are essential. Policymakers, tech companies, and fact-checkers must work together to develop and implement effective solutions.

I’m curious to see the full study methodology and data. It would be helpful to understand how misinformation was defined and detected across the different platforms. The findings highlight the need for improved media literacy education.

Good point. Without more details, it’s difficult to fully assess the reliability of the results. Transparency around the research approach is crucial for understanding the scale of the problem.

The high misinformation rates on TikTok are concerning, but not entirely unexpected given its viral nature and young user base. Platforms need to improve content moderation and invest in digital literacy programs.

While the findings are troubling, I’m glad to see this issue being studied more comprehensively across Europe. Addressing misinformation will require a multi-stakeholder approach involving platforms, governments, and civil society.

Agreed. Collaborative efforts to combat online falsehoods are essential. Policymakers, tech companies, and fact-checkers must work together to develop effective solutions.