Listen to the article

New Study Reveals Effective Strategies to Combat Misinformation on Social Media

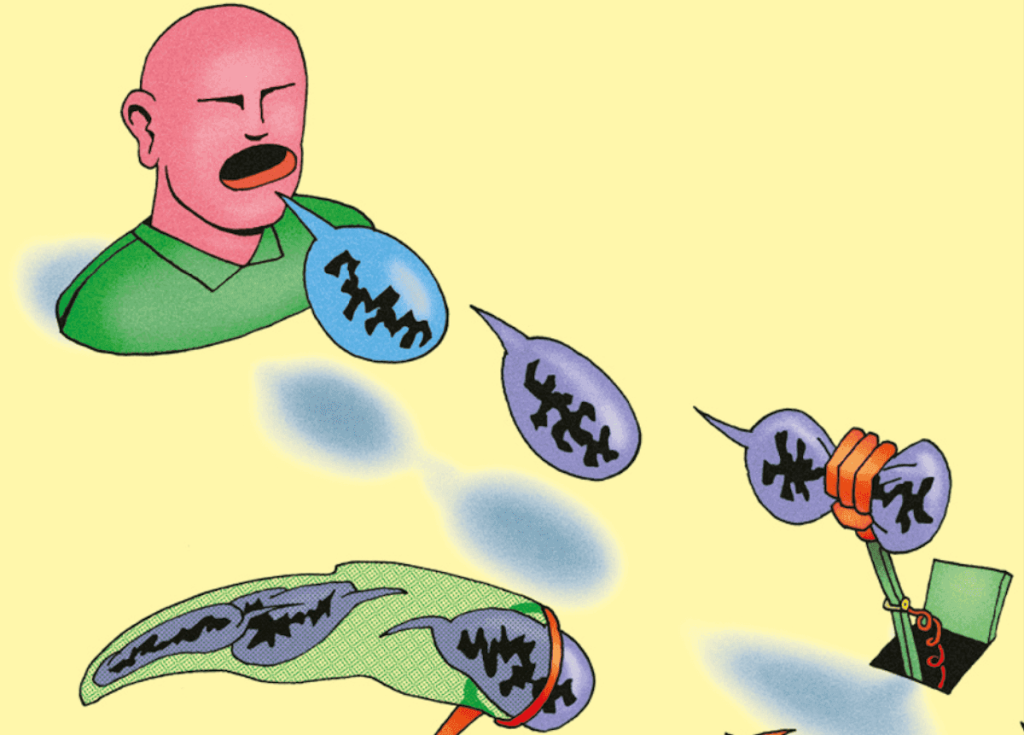

A recent empirical study suggests that encouraging internet users to think before sharing content could be a cost-effective approach to reducing the spread of misinformation online. The research, conducted during the 2022 U.S. midterm elections, found that simple interventions—particularly those that activate users’ concerns about their reputation—can significantly decrease the sharing of false information while potentially increasing the circulation of factual content.

The study, co-authored by Émeric Henry, Head of Sciences Po Department of Economics, tested various approaches with 3,501 American Twitter (now X) users who were exposed to political news tweets containing both misinformation and factual information.

“A delicate balance needs to be struck between combating false information and protecting freedom of expression,” note the researchers, highlighting the regulatory challenges faced by governments and platforms. While the European Union has introduced the Digital Services Act (DSA) to regulate platforms, its current focus remains primarily on illegal content rather than political misinformation.

The research team, including Sergei Guriev, Émeric Henry, Theo Marquis, and Ekaterina Zhuravskaya, divided participants into four groups to evaluate different intervention strategies. The control group received no special instructions, while three test groups experienced different treatments: requiring an extra click before sharing, displaying a “nudge” message reminding users about the prevalence of fake news, or offering fact-checking information.

All three interventions reduced the sharing of false information compared to the control group, where 28 percent of participants shared misinformation. Requiring an extra click reduced false information sharing by 3.6 percentage points, the nudge message by 11.5 points, and fact-checking by 13.6 points.

However, the treatments had markedly different effects on the sharing of truthful content. While requiring an extra click showed negligible impact on sharing accurate information, the fact-checking approach actually decreased truthful sharing by 7.8 percentage points from the control group’s 30 percent baseline. Most notably, the nudge message encouraging users to think carefully increased the sharing of factual content by 8.1 percentage points.

The researchers identified three key mechanisms that influence sharing behavior: updating beliefs about content veracity, increasing the importance of reputational concerns, and changing the cost of sharing. Surprisingly, treatments designed to change users’ beliefs about information accuracy, such as fact-checking, showed minimal impact compared to interventions that heightened reputational concerns.

“The desire of individuals not to appear ill-informed in the eyes of their audience, thereby damaging their reputation, could be an effective lever,” the researchers explain. This reputational mechanism helps explain why interventions prompting users to consider the consequences of sharing false information were most effective at simultaneously reducing misinformation while boosting factual content sharing.

The study suggests that algorithmic fact-checking might be more efficient than professional human verification, as it can intervene earlier in the sharing process and at lower cost, despite being potentially less accurate. “As a result, despite involving significant investment, fact-checking by professional verifiers could be less effective than fact-checking by an algorithm, which is faster and less costly, but more prone to error,” the authors note.

These findings have significant implications for social media platforms and policymakers seeking cost-effective strategies to combat misinformation. While long-term solutions like digital literacy education remain essential, the study demonstrates that simple nudges leveraging users’ reputational concerns can yield immediate benefits, particularly during sensitive periods like election campaigns.

The research also reveals an interesting complementary effect between short-term interventions and long-term education: users concerned about their reputation are less likely to spread misinformation if they believe their audience is more alert to false content due to better education.

As social media continues to serve as a primary information source for many users, these insights offer promising approaches to improve information integrity without heavy-handed regulation or excessive content moderation that might impinge on freedom of expression.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

12 Comments

Misinformation is a complex challenge without easy solutions. This study offers some intriguing ideas, but the implementation details and unintended impacts will be crucial.

Agreed, the balance between content moderation and free speech is a delicate one that regulators will have to navigate carefully.

The spread of false information online can have serious consequences. I’m glad to see researchers exploring ways to counter this issue while respecting free expression.

Curious to see how the EU’s Digital Services Act will approach the regulation of political misinformation versus illegal content.

Combating misinformation online is a complex challenge. Strategies that activate users’ reputational concerns could be a cost-effective approach to reducing the spread of false information. Regulators will need to carefully balance content moderation with protecting free expression.

Interesting study findings. Platforms and governments must find the right balance between limiting misinformation and preserving open discourse.

Fact-checking and reputation-based approaches seem like promising strategies to address misinformation. It will be important to ensure these interventions don’t unfairly impact certain groups or viewpoints.

Agreed, any content moderation efforts must be carefully implemented to uphold democratic principles and avoid censorship.

Misinformation on social media is a growing concern, especially around political issues. I’m curious to learn more about the specific interventions tested in this study and their effectiveness.

Yes, the regulatory challenges are significant. Striking the right balance between content moderation and free speech will be critical.

Interesting findings, though the long-term effectiveness of reputation-based interventions remains to be seen. Ongoing research and stakeholder collaboration will be key to addressing this issue.

Absolutely, misinformation is a multi-faceted problem that will require a nuanced, adaptable approach from platforms, policymakers, and the public.