Listen to the article

In the quiet Louisiana town of Thibodaux, a disturbing case of AI-generated nude images has revealed the frightening intersection of artificial intelligence and teenage bullying, leaving school administrators scrambling to respond to a technology they weren’t prepared to face.

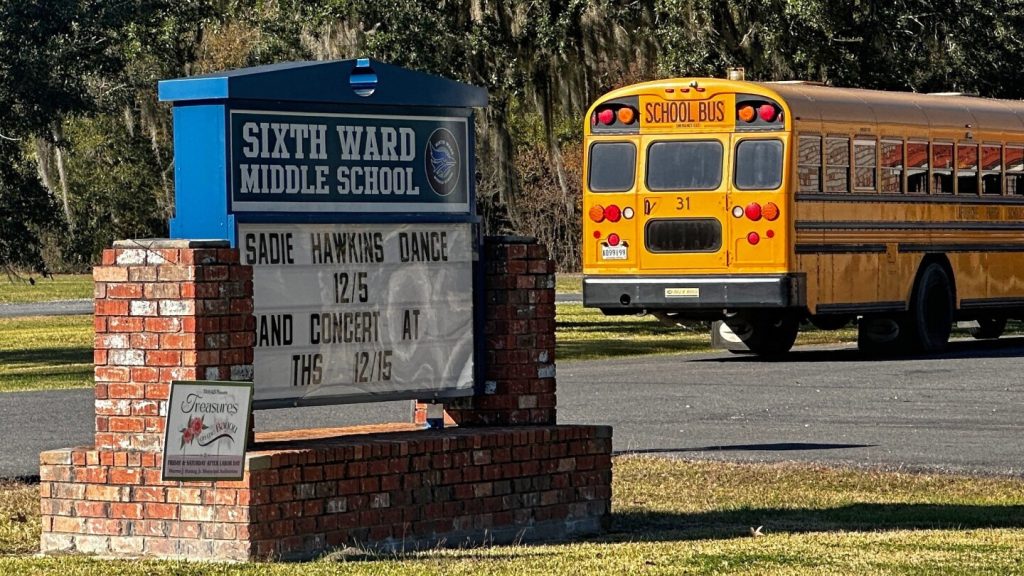

The ordeal began when several eighth-grade girls at Sixth Ward Middle School discovered that explicit, fake nude images of themselves were circulating among classmates on Snapchat. A 13-year-old girl and her friends immediately sought help from their school guidance counselor and a sheriff’s deputy assigned to the school, but the ephemeral nature of Snapchat messages made evidence difficult to capture.

“Kids lie a lot,” the school principal, Danielle Coriell, would later say at a disciplinary hearing, expressing skepticism about the situation. “They blow lots of things out of proportion on a daily basis.”

But for the victims, the harassment was painfully real. While adults questioned the existence of the images, the bullying continued unabated throughout the school day.

“I went the whole day with getting bullied and getting made fun of about my body,” the 13-year-old girl later testified. When she boarded the school bus that afternoon and saw a classmate showing one of the AI-generated nudes to another student, her frustration boiled over. She attacked the boy, encouraging others to join her.

The consequences were swift and severe – but only for her. She was removed from Sixth Ward Middle School for more than 10 weeks and sent to an alternative school. According to her attorneys, the boy suspected of sharing the images faced no school discipline.

Law enforcement eventually took a different approach. The Lafourche Parish Sheriff’s Department charged two boys with violating Louisiana’s new law against disseminating AI-generated explicit images – a crime that carries serious penalties. The investigation ultimately uncovered fake nude images of eight female middle school students and two adults.

The case highlights how unprepared many schools are for the emerging threat of AI deepfakes. While educational institutions across America are developing policies on artificial intelligence in the classroom, few have updated their cyberbullying protocols to address this powerful new technology.

“When we ignore the digital harm, the only moment that becomes visible is when the victim finally breaks,” explained Sergio Alexander, a research associate at Texas Christian University who specializes in emerging technology.

The Lafourche Parish School District had only begun developing AI policies, and their cyberbullying training dated back to 2018 – well before the recent explosion in accessible AI image generation tools that can easily create convincing fake nudes from ordinary photos pulled from social media.

“Most schools are just kind of burying their heads in the sand, hoping that this isn’t happening,” said Sameer Hinduja, co-director of the Cyberbullying Research Center and professor of criminology at Florida Atlantic University.

For the 13-year-old at the center of this case, the impact was devastating. Her father, Joseph Daniels, described how she began skipping meals and struggling with depression after being sent to the alternative school. She stopped completing schoolwork and required therapy for anxiety and depression.

“She just felt like she was victimized multiple times – by the pictures and by the school not believing her and by them putting her on a bus and then expelling her for her actions,” Daniels explained.

Research shows that suspensions and expulsions can derail a student’s educational trajectory. Suspended students often become disconnected from their peers, disengaged from school, and face lower grades and graduation rates.

After seven weeks and multiple hearings, the school board finally allowed her to return to campus, though under strict probation terms that prohibited participation in extracurricular activities, including basketball – a sport she had hoped to play.

“I was hoping she would make great friends, they would go to the high school together and, you know, it’d keep everybody out of trouble on the right tracks,” her father said. “I think they ruined that.”

The case illustrates the complex challenges schools face in the AI era, where technology evolves faster than policies can adapt. As one of her attorneys argued before the school board: “She is a victim.” To which Superintendent Jarod Martin responded, “Sometimes in life we can be both victims and perpetrators.”

This emerging crisis of AI-generated explicit imagery targeting minors presents educators, law enforcement, and policymakers with urgent questions about how to protect vulnerable students while adapting discipline systems for technological threats that hardly existed just a few years ago.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

16 Comments

This case highlights the urgent need for better regulation and education around the use of AI technology, especially when it comes to minors. Schools must prioritize student safety and implement robust policies to prevent such abuses.

Absolutely. Policymakers and educators need to work together to stay ahead of the curve and ensure schools are equipped to handle the challenges posed by AI-driven harassment.

The fact that the victim was the one expelled after reporting the incident is utterly unacceptable. Schools need to implement clear policies that prioritize the safety and wellbeing of students, not dismiss their concerns.

Absolutely. This case highlights the urgent need for schools to develop comprehensive strategies to address the challenges posed by AI-generated content and protect victims of digital harassment.

The victim’s experience of being bullied and having her concerns dismissed is heartbreaking. Schools must take a more proactive and empathetic approach when dealing with AI-related incidents to support affected students.

Sadly, this is just the tip of the iceberg when it comes to the potential for AI abuse. More awareness and training is needed to protect vulnerable students from these emerging threats.

This is a disturbing case of how AI-generated images can be weaponized to bully and harass young victims. The school’s dismissive response is concerning and shows a lack of understanding about the serious impact of this technology on students’ wellbeing.

Definitely a worrying trend that schools need to address more proactively. They should educate students on the dangers of sharing AI-generated content and have clear policies to protect victims.

The dismissive attitude of the school principal is deeply troubling and shows a lack of understanding about the real and lasting harm caused by this kind of abuse. Schools need to prioritize supporting and empowering victims, not questioning their experiences.

Exactly. The school’s response was completely unacceptable and highlights the urgent need for better training and education for school administrators on the complex issues surrounding AI and digital harassment.

This case is a sobering reminder of the dark side of AI technology and the need for robust safeguards to prevent it from being used to exploit and harm young people. Schools must be better prepared to address these issues.

Agreed. The school’s response was woefully inadequate, and the victims deserved much more support and protection. Addressing AI-related harassment should be a top priority for educators.

It’s appalling that the school principal doubted the victims and failed to take swift action against the perpetrators. AI-generated content should never be used to violate someone’s privacy and dignity.

Agreed. The school’s negligence in this case is unacceptable and they need to be held accountable for their failure to protect these students.

The use of AI-generated nude images to bully and harass students is a disturbing new frontier in the digital age. Schools must take proactive steps to educate students, train staff, and implement strong policies to prevent and respond to such incidents.

Agreed. This case is a wake-up call for schools to take the threat of AI-enabled harassment seriously and ensure they have the necessary resources and protocols in place to protect their students.