Listen to the article

Social media networks are facing a rising tide of AI-generated propaganda and scams, yet the National Science Foundation (NSF) has abruptly halted funding for research aimed at studying this growing problem.

On April 18, the NSF announced it would terminate government research grants focused on misinformation and disinformation studies. The agency stated it would no longer support research that “could be used to infringe on the constitutionally protected speech rights” of Americans, signaling a significant policy shift at a critical moment in the digital information landscape.

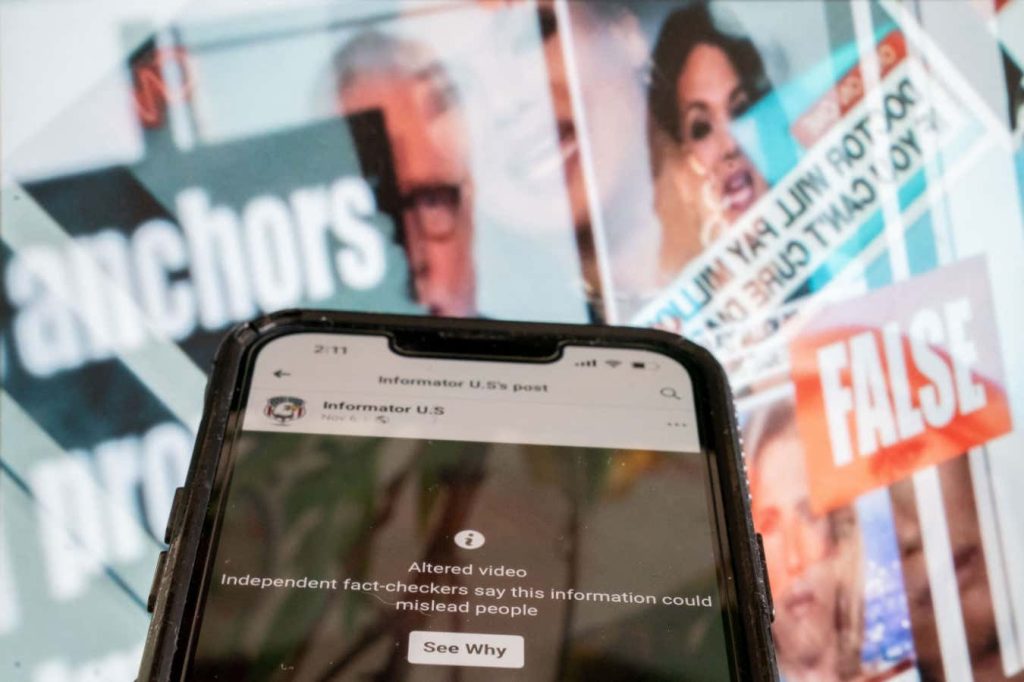

The timing of this decision has raised concerns among researchers and digital policy experts, as it comes precisely when artificial intelligence technologies are making it increasingly difficult for users to distinguish between genuine and fabricated content online. These sophisticated AI tools can now generate convincing text, manipulate images, and create deepfake videos that appear authentic to the average viewer.

“This couldn’t come at a worse time,” said Dr. Melissa Chen, a digital media researcher at Stanford University who spoke with our reporting team. “We’re seeing an unprecedented surge in sophisticated disinformation campaigns that leverage cutting-edge AI. Pulling research funding now leaves us increasingly vulnerable.”

The NSF’s decision arrives amid a broader pattern of reduced content moderation across major technology platforms. Companies like Meta, Twitter (now X), and YouTube have significantly scaled back their fact-checking operations in recent years, with some eliminating dedicated teams entirely. Industry insiders point to cost-cutting measures and growing political pressure as key factors behind these reductions.

Social media platforms have historically served as primary distribution channels for misleading information. During the 2020 U.S. presidential election, research from the Digital Forensic Research Lab documented over 15 million interactions with known disinformation content across major platforms. With the 2024 election approaching, experts warn that the combination of advanced AI tools and reduced oversight could create a perfect storm for manipulation.

The terminated research grants supported projects examining how false information spreads online, methods for detecting synthetic media, and strategies for building public resilience against deception. Several multi-year studies at major research institutions now face uncertain futures, with graduate students and research teams scrambling to secure alternative funding sources.

“We’ve been studying patterns of health misinformation for three years, and our findings were just beginning to inform practical interventions,” explained Dr. James Wilson, principal investigator on an NSF-funded project at the University of Michigan. “Now we’re left wondering if we can complete the work at all.”

Critics of the NSF decision argue that understanding disinformation mechanisms doesn’t inherently threaten free speech but rather empowers citizens to make more informed decisions about the content they consume. Supporters counter that government involvement in determining what constitutes misinformation raises legitimate constitutional concerns.

The NSF has not provided detailed explanations for specific grant terminations, leaving researchers uncertain about whether related studies might still receive funding under different frameworks. The agency maintains that it continues to support research on digital literacy, computational verification techniques, and information security more broadly.

International counterparts, meanwhile, are moving in the opposite direction. The European Union recently increased funding for disinformation research through its Horizon Europe program, allocating €120 million to projects examining digital information integrity. This divergence creates potential gaps in global research collaboration at a time when disinformation campaigns frequently transcend national borders.

Industry analysts suggest that the resulting research vacuum may be partially filled by private sector initiatives, though these often lack the transparency and peer review processes of publicly funded academic research. Major technology companies including Microsoft and Google have launched their own disinformation research programs, but critics note inherent conflicts of interest when platforms study problems on their own systems.

As AI-generated content becomes increasingly sophisticated and prevalent across social media landscapes, the long-term implications of this funding shift remain unclear. What is certain is that the decision marks a significant turning point in America’s approach to understanding and addressing the complex challenges of our digital information ecosystem.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

8 Comments

I’m concerned that this funding cut will hamper our ability to stay ahead of the growing misinformation crisis. With AI tools making it ever easier to create convincing fake content, we need more research and solutions, not less. This decision seems counterproductive and potentially dangerous for public discourse.

The decision to halt funding for misinformation research is baffling. Aren’t we supposed to be investing more in understanding and addressing the proliferation of AI-generated propaganda and fabricated content? This feels like a step backwards at a critical moment. I hope the NSF will reconsider this policy shift.

Cutting funding for misinformation research seems like the wrong move at the wrong time. With AI-driven propaganda and scams on the rise, we need more research to understand and address these challenges, not less. This decision raises concerning questions about the priorities and motivations behind it.

This is a puzzling and troubling move by the NSF. Misinformation is a serious threat to the public good, and research is essential for developing effective solutions. Cutting funding for this work seems short-sighted and potentially damaging. I hope there is still time to reconsider this decision.

Cutting funding for misinformation research is a worrying development. In an age of deepfakes and AI-driven propaganda, we should be investing more, not less, in understanding and combating the spread of false and misleading information online. This decision seems short-sighted and potentially harmful.

I’m puzzled by the rationale behind this funding cut. Misinformation is a serious threat to the integrity of digital media and public discourse. Shouldn’t we be doubling down on research to stay ahead of evolving AI-powered manipulation tactics, not scaling it back? This decision raises concerning questions.

I’m puzzled by the NSF’s rationale for this funding cut. Studying misinformation is critical for protecting the integrity of digital discourse, not infringing on free speech. Researchers need the resources to stay ahead of evolving AI-powered manipulation tactics. This seems like a worrying step in the wrong direction.

I agree, the timing of this decision is highly concerning. With the growing sophistication of deepfakes and other AI-generated content, we can’t afford to lose ground in understanding and combating the spread of misinformation online.