Listen to the article

Ukraine Warns of Escalating AI-Generated Disinformation Campaign

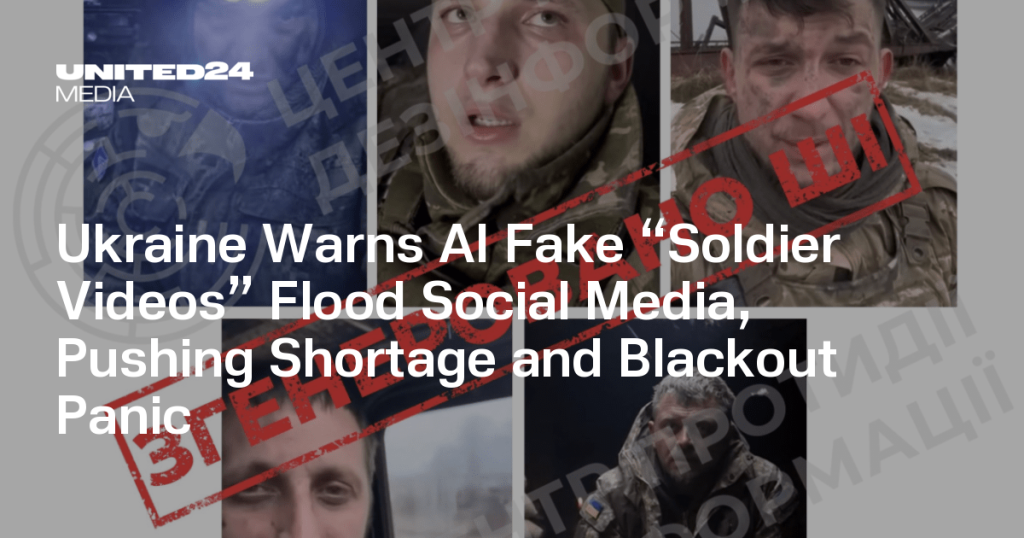

Ukraine’s Center for Countering Disinformation has issued an urgent warning about a persistent wave of sophisticated AI-generated fake videos circulating across social media platforms. These fabricated clips impersonate Ukrainian soldiers and aim to demoralize public support for the ongoing defense efforts.

According to a statement released by the center on January 19, the fake videos deliberately amplify false claims about critical shortages in food, military equipment, and logistical support for Ukrainian forces. The center emphasized that these fabricated testimonials exploit public anxieties, particularly around electricity outages that have affected civilian populations.

“These videos push dangerous narratives about the ‘futility’ of resistance and promote a false sense of hopelessness about Ukraine’s defense capabilities,” said a spokesperson from the center. “They’re specifically designed to undermine morale and weaken public resolve.”

Intelligence officials noted that the disinformation campaign employs advanced AI technology to create increasingly convincing content. The videos feature synthesized voices, staged emotional appeals, and realistic visual elements that make them difficult to immediately identify as fake. This heightened realism allows the content to spread rapidly through social media algorithms before fact-checkers can intervene.

The center’s analysis suggests that Russian propaganda operations are behind the campaign, leveraging AI tools to produce and distribute misleading content at an unprecedented scale with relatively limited resources. This represents a significant evolution in information warfare tactics that poses new challenges for media literacy and national security.

“The technological sophistication of these fakes has increased dramatically,” explained a cybersecurity expert familiar with the situation. “What would have required a professional studio and significant resources just a few years ago can now be accomplished using widely available AI tools, making disinformation campaigns more accessible and harder to trace to their source.”

This latest wave follows several previous disinformation operations documented by Ukrainian authorities. In November 2025, the Center published a comprehensive analytical report detailing how AI-generated videos have been systematically used to discredit Ukraine’s defense forces. Earlier campaigns included fabricated frontline appeals and false claims related to military mobilization efforts.

A particularly concerning example identified by the Center involved AI-generated videos circulated on TikTok that falsely portrayed Ukrainian troops surrendering en masse near the strategically important city of Pokrovsk. These videos were specifically designed to create the impression that Ukraine is losing ground and that international support for the country’s defense is futile.

Media literacy experts warn that the increasing quality of AI-generated content presents a significant challenge for societies worldwide, not just in conflict zones. “The line between authentic and fabricated content continues to blur,” said Dr. Maria Kowalski, a disinformation researcher at the European Digital Rights Institute. “Without proper verification tools and heightened public awareness, these campaigns will become increasingly effective.”

Ukrainian officials are urging social media platforms to enhance their detection capabilities and implement stronger measures against such content. They also emphasize the importance of public education about digital literacy and the verification of sources before sharing emotional content online.

The timing of this disinformation campaign coincides with critical developments in international support packages for Ukraine and important military operations in eastern regions, suggesting a strategic attempt to influence both domestic and international opinion at a pivotal moment in the conflict.

Security analysts note that as AI technology continues to evolve, the sophistication of such disinformation campaigns is likely to increase, highlighting the need for continued vigilance and investment in counter-disinformation capabilities across both government and civil society sectors.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

12 Comments

The Ukrainian government is doing the right thing by proactively addressing this issue. Spreading panic through fake videos is a common tactic, but it’s good to see them calling it out and trying to stay ahead of it.

Absolutely. Early detection and public awareness are key to countering the impact of these AI-generated disinformation campaigns. Kudos to Ukraine for taking a strong stance.

It’s good to see Ukraine taking proactive steps to address this issue. Raising awareness about the use of AI-generated fakes is an important first step in combating this kind of sophisticated disinformation.

Absolutely. Educating the public on the risks of AI-generated content and the tactics used to spread it will be crucial. Ukraine’s efforts to get ahead of this problem are commendable.

This is a concerning escalation of the information war surrounding the conflict in Ukraine. The use of AI-generated fakes to undermine public morale is a troubling tactic that needs to be taken seriously.

Agreed. Disinformation campaigns like this have the potential to cause real harm, especially when they exploit people’s fears and anxieties. Vigilance and fact-checking will be essential to combat the spread of these AI-generated falsehoods.

I’m curious to learn more about the specific AI techniques being used to create these fake soldier videos. It would be interesting to understand the technical capabilities that are enabling this level of realism and how it can be combated.

That’s a great point. Understanding the underlying AI technology will be crucial for developing effective countermeasures. Studying the methods used to fabricate the content could lead to better detection and mitigation strategies.

This is a concerning development, but it’s encouraging to see Ukraine taking a strong stance against these AI-generated fake videos. Maintaining public trust and morale will be crucial in the face of this kind of coordinated disinformation campaign.

I agree. Countering the spread of these fabricated videos through effective communication and fact-checking will be essential. Ukraine’s proactive approach is a good model for other countries facing similar challenges.

This is a concerning development, as AI-generated disinformation can be incredibly difficult to detect. Ukraine is right to warn about these tactics aimed at demoralizing the public. It’s crucial that people remain vigilant and verify information from trusted sources.

Agreed. The use of advanced AI to fabricate such convincing content is alarming. Maintaining public resolve in the face of this kind of coordinated propaganda campaign will be a real challenge.