Listen to the article

Russian Disinformation Campaign Intensifies on X Platform Ahead of German Election

A sophisticated Russian disinformation operation targeting Germany has dramatically escalated in recent weeks, with a particular focus on Elon Musk’s X platform, according to a report reviewed by POLITICO.

The analysis reveals an alarming spike in coordinated posts from ghost accounts, with daily activity surging from fewer than 50 posts throughout November and December to more than 3,000 in a single day by late January. This sudden escalation demonstrates what experts identify as an “overload” technique—a trademark of Russian influence operations designed to flood social media with waves of content that create an artificial appearance of viral momentum.

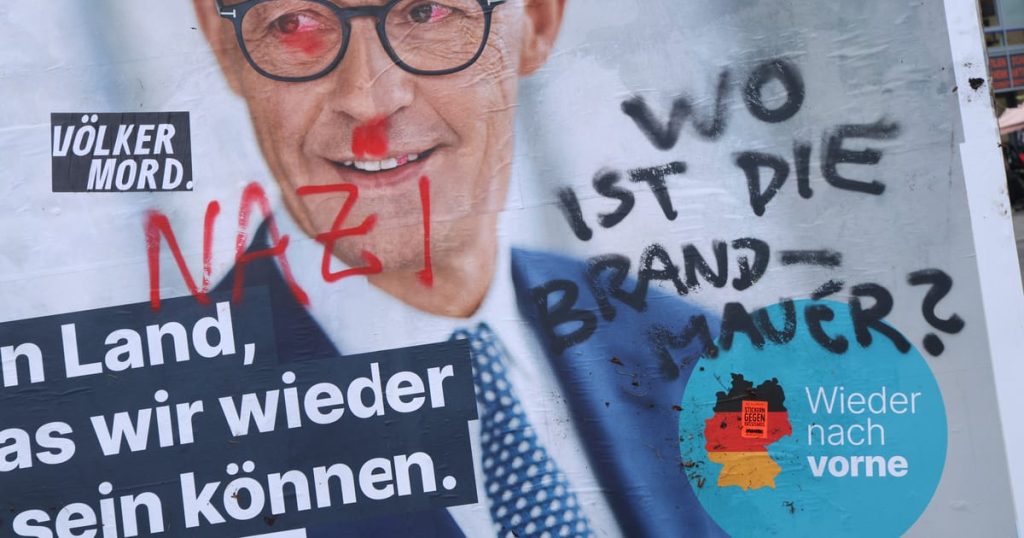

The timing of this disinformation surge appears deliberately calibrated to influence Germany’s upcoming election, where the Russia-friendly, far-right Alternative for Germany (AfD) party currently holds second place in opinion polls. The AfD has notably received public support from X owner Elon Musk, adding another layer of complexity to the platform’s central role in this influence operation.

Content analysis shows the campaign systematically targets Germany’s support for Ukraine, pushing narratives that Berlin prioritizes aid to Kyiv at the expense of German citizens. One documented example involves a fabricated corruption scandal linking German Economy Minister Robert Habeck to an unnamed “Ukrainian Culture Minister.” This false story first appeared on an obscure website in late January before being rapidly amplified by a network of coordinated accounts on X, generating hundreds of retweets within minutes of publication.

“The pattern is unmistakable,” said a cybersecurity expert familiar with the report who requested anonymity. “These aren’t organic conversations but carefully orchestrated information operations designed to manipulate public opinion during a critical election period.”

Technical analysis suggests a high degree of automation behind these operations. Researchers have identified suspicious posting patterns with accounts publishing content at precisely timed intervals—a telltale sign of bot networks rather than genuine human engagement. German fact-checking organization Correctiv has independently verified the automated nature of many of these posts in their own investigation.

The German government has recognized the severity of the threat and is actively implementing countermeasures. Berlin has intensified its counter-disinformation efforts, including sharing intelligence with international partners and considering sanctions against entities connected to the campaign. Officials are also weighing the option of publicly attributing these networks to expose the actors behind them.

“We are working on a ‘cultural shift’ within the Foreign Ministry,” a German official told POLITICO, describing growing institutional awareness of cyberthreats. “Ambassadors are becoming more vocal in their host countries. This ensures that when disinformation spreads, they have the credibility and networks to set the record straight.”

The escalation comes amid broader concerns about election interference across Europe and follows similar patterns observed during previous electoral contests in France, the United Kingdom, and other NATO countries. Security analysts note that the sophistication of these operations has increased, with better content localization and more convincing narratives tailored to specific national concerns.

The German case is particularly significant given the country’s economic and political influence within the European Union and its role as one of Ukraine’s most substantial military and financial supporters. Undermining German resolve could have far-reaching implications for European unity regarding Russia’s ongoing war against Ukraine.

As election day approaches, German officials face the challenge of countering this disinformation without amplifying its reach or undermining democratic discourse. The government’s response balances technical countermeasures with public awareness campaigns designed to help voters identify misleading content.

Tech platforms, particularly X, face mounting pressure to address their role in facilitating these influence operations, especially as evidence mounts that their systems may be exploited by foreign actors seeking to manipulate democratic processes.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

14 Comments

Content analysis will be crucial to understanding the specific narratives and talking points being pushed through this coordinated campaign. I hope researchers are able to shed more light on their tactics.

Absolutely. Detailed analysis of the disinformation content and its spread patterns will be key to developing effective countermeasures.

This report highlights the ongoing threat of Russian interference in European elections. Strengthening election security and media literacy should be top priorities for policymakers.

Agreed. Protecting the integrity of the electoral process and equipping the public to identify disinformation are critical challenges that must be addressed.

Interesting to see how Russia is trying to interfere in the German election again. We need to be vigilant against these coordinated disinformation campaigns that aim to sow division and confusion.

Absolutely. It’s critical that the public is aware of these tactics so they can be better equipped to spot and resist such propaganda efforts.

It’s alarming to see the scale of this Russian disinformation operation targeting the German election. Voters will need to be extremely vigilant in the coming months.

The use of ‘overload’ techniques to create an artificial appearance of viral momentum is a worrying trend. Democracies must find ways to combat these sophisticated influence operations.

The connection between Elon Musk’s X platform and the AfD party’s public support adds an extra layer of complexity here. I wonder if that relationship is being exploited in any way.

That’s a good point. The platform’s role in amplifying this disinformation campaign is quite concerning, given Musk’s public backing of the AfD.

The use of an ‘overload’ technique to flood social media is a classic Russian tactic. It’s troubling to see them ramp this up ahead of the German election.

Agreed. We’ve seen this playbook used in other elections as well. Robust fact-checking and media literacy efforts will be crucial to counter these influence operations.

The surge in coordinated posts from ghost accounts is certainly concerning. I wonder what specific narratives or talking points they’re trying to push to influence the election.

Good question. The report mentions the Russia-friendly AfD party is a target, so I imagine they’re trying to amplify messages that benefit that party’s prospects.