Listen to the article

BERT-LSTM Hybrid Model Shows Promise in Early Misinformation Detection

A new study addressing the challenges of misinformation on mobile social networks reveals how combining advanced language processing techniques could significantly improve detection capabilities, even at the earliest stages of content spread.

Researchers have developed a sophisticated hybrid model that merges BERT (Bidirectional Encoder Representations from Transformers) with LSTM (Long Short-Term Memory) networks to enhance the accuracy of identifying false information before it gains traction online.

The research focuses on three critical questions: how the BERT-LSTM hybrid performs compared to traditional machine learning and other deep learning models; whether it can achieve early-stage detection without relying on user engagement metrics; and its potential implications as an educational tool for digital literacy.

“The combination of BERT—known for contextual understanding—and LSTM—adept at handling sequential data—creates a powerful system that captures both semantic nuances and temporal patterns often missed by traditional models,” notes the research team.

Early detection is especially crucial in mobile environments where misinformation spreads rapidly. Unlike conventional approaches that frequently depend on user engagement metrics such as likes, shares, and comments, the hybrid model aims to identify false content based solely on textual analysis.

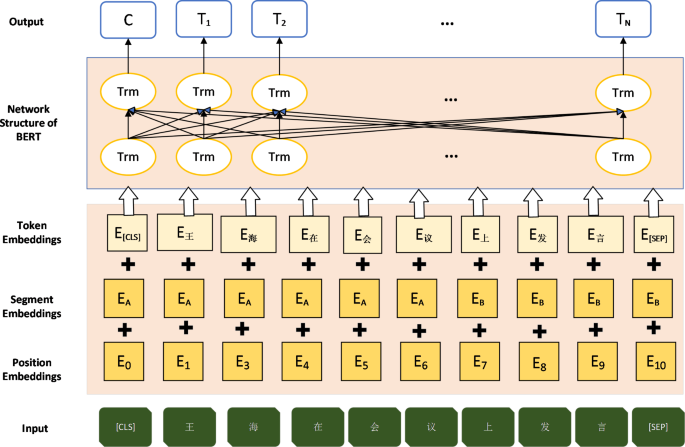

The BERT component of the system utilizes a transformer-based architecture that employs self-attention mechanisms to process input sequences in parallel, capturing contextual relationships between words. This model has been pre-trained through tasks including Masked Language Modeling (MLM) and Next Sentence Prediction (NSP), enabling it to understand bidirectional context.

LSTM networks complement this capability by modeling sequential data and capturing long-range dependencies. They use a sophisticated gating mechanism comprising input, forget, and output gates that control information flow, determining what should be remembered or discarded.

“LSTMs have been successfully applied to various sequence modeling tasks, including text classification and sentiment analysis,” the researchers explain. “Their ability to capture long-term dependencies makes them particularly suitable for processing social media content, where context and temporal relationships are crucial.”

The hybrid architecture processes data through multiple layers. Input text is first tokenized and embedded into a high-dimensional vector space. These embeddings are then processed through BERT’s transformer layers to capture contextual relationships. The resulting contextual embeddings pass to the LSTM layer, which focuses on sequential dependencies. Finally, a fully connected layer followed by a Softmax layer produces the classification output.

For their experiments, researchers created a custom data set using a specialized web crawler to aggregate content from social media platforms. The final collection comprised 39,940 items, including 20,176 fake news pieces and 19,764 real news items from Twitter, offering a balanced representation for training purposes.

Analysis of the content revealed interesting linguistic patterns. In English-language genuine news, words like “honesty,” “truth,” and “reliable” appeared frequently, while false news contained more terms like “fake,” “hoax,” and “scandal.” Similar patterns emerged in Chinese-language content, with authentic news featuring words equivalent to “truth,” “official,” and “report,” while misinformation used terms translating to “rumor,” “false,” and “hearsay.”

The team meticulously preprocessed and engineered features from the data set, including polarity analysis, comment length measurement, and word count. They removed punctuation and stop words from English content and performed word segmentation for Chinese text to improve model training quality.

What makes this approach particularly valuable is its potential application in scenarios where user engagement data is unavailable or limited—a common challenge in early-stage detection. By focusing solely on content analysis rather than relying on interaction metrics, the system could potentially identify misinformation before it achieves significant circulation.

Beyond technical advancements, the research explores how such systems might enhance digital literacy. As misinformation becomes increasingly sophisticated, tools that help individuals critically evaluate online content become essential for developing a more discerning public.

The researchers suggest their model could inform educational strategies that enhance users’ critical thinking skills, potentially through training programs or applications that help individuals distinguish between credible information and falsehoods.

While promising, the study acknowledges certain limitations. The data set, though carefully curated, may have size constraints that could affect the generalizability of findings across all types of misinformation and social media platforms.

As social media continues to evolve as a primary information source for billions worldwide, the development of effective, early-stage misinformation detection tools represents a crucial step toward creating healthier information ecosystems and promoting digital literacy in an increasingly complex media landscape.

Verify This Yourself

Use these professional tools to fact-check and investigate claims independently

Reverse Image Search

Check if this image has been used elsewhere or in different contexts

Ask Our AI About This Claim

Get instant answers with web-powered AI analysis

Related Fact-Checks

See what other fact-checkers have said about similar claims

Want More Verification Tools?

Access our full suite of professional disinformation monitoring and investigation tools

11 Comments

This is an interesting approach to tackling misinformation on mobile social networks. The BERT-LSTM hybrid model sounds like a promising way to improve early detection of false content, even before it gains traction. I’m curious to see how it performs compared to other methods and whether it can be effectively used as an educational tool for digital literacy.

This is an exciting development in the effort to combat misinformation, especially on mobile social networks where it can spread so rapidly. The combination of BERT and LSTM seems well-suited to the task, and I’m hopeful that the early detection capabilities of this model can make a meaningful impact. Looking forward to seeing how it performs.

This is an important development in the fight against online misinformation. The combination of BERT and LSTM seems well-suited to the task, and early detection capabilities could be a game-changer. I’m curious to see how this model performs compared to other methods and how it might be leveraged for digital literacy education.

As someone interested in the mining and commodities space, I’m curious to see how this technology could be applied to identify misinformation related to those topics. Accurate, timely information is crucial in these industries, so a model like this could have valuable applications.

Tackling misinformation is crucial, especially in the age of mobile social media. I’m encouraged to see the research team exploring this BERT-LSTM hybrid approach. Early detection is key, so if this model can reliably identify false content before it spreads, it could be a valuable tool. I’ll be following the developments with interest.

Glad to see research exploring ways to combat misinformation, which is a growing concern on mobile platforms. The combination of BERT and LSTM seems well-suited to capturing both semantic nuances and temporal patterns. Early detection will be crucial, so this hybrid model could be a valuable tool if it proves effective.

Agreed. Early detection is key, as misinformation can spread rapidly on social media. This model’s ability to identify false content before it gains momentum could be a real game-changer.

Misinformation in mobile social networks is a major challenge, so I’m glad to see research exploring new solutions. The BERT-LSTM hybrid model’s ability to capture semantic nuances and temporal patterns is intriguing. I’ll be interested to follow the development and real-world testing of this approach.

As someone who follows the mining and energy sectors, I’m particularly interested in how this technology could be applied to identify misinformation related to those industries. Accurate, timely information is so important, so a model like this could have really practical applications. I’ll be watching this space closely.

Enhancing digital literacy is so important, especially with the prevalence of misinformation online. This BERT-LSTM approach sounds like a promising step in the right direction. I’m curious to see how it performs compared to other methods and whether it can be successfully implemented as an educational tool.

The BERT-LSTM hybrid model sounds like a promising step forward in the fight against online misinformation. Improving early detection capabilities and leveraging the model for digital literacy education could be powerful. I’m curious to see the real-world results and how this approach compares to other techniques.