Listen to the article

As artificial intelligence reshapes digital landscapes, distinguishing fact from fiction on social media has become increasingly difficult for everyday users. AI-generated content now seamlessly blends with authentic posts, creating a challenging environment for information consumers navigating their daily feeds.

While completely foolproof detection methods remain elusive, experts suggest adopting a structured framework similar to those employed by misinformation researchers to evaluate content more effectively across platforms.

“The technology has advanced to where visual cues alone aren’t enough,” says Dr. Marcia Torres, digital media researcher at Stanford’s Center for Digital Ethics. “We’re seeing sophisticated AI content that requires a multi-faceted evaluation approach.”

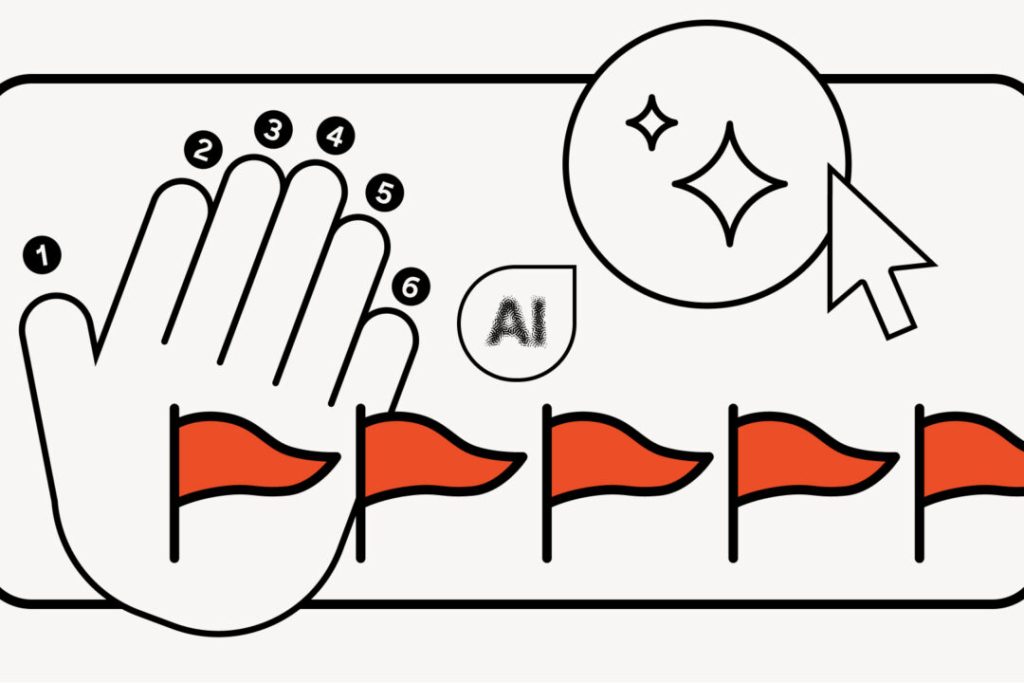

When assessing social media content, users should first examine the source. Reliable accounts typically maintain connections to established institutions and demonstrate consistent posting patterns. Suspicious indicators include randomly generated usernames with strings of numbers and newly created accounts with few followers.

Content evaluation forms the second critical layer of assessment. Posts containing vague information, outrageous claims, or assertions that contradict established knowledge should trigger skepticism. Many platforms now incorporate warning flags or community notes for potentially misleading content, while legitimate AI-created content may include disclosure tags such as #AI, #satire, or #AD.

Stylistic elements offer additional clues. AI-generated text often exhibits distinctive patterns, including wooden grammar, flowery language, unnatural repetition, and certain vocabulary preferences. Research from AI detection company GPTZero identifies words like “elevate,” “captivate,” and “tapestry” as commonly appearing in AI-generated content, alongside phrases such as “provided valuable insights” or “a rich tapestry.”

Emotional manipulation represents another red flag. Posts designed to trigger disproportionate emotional responses may warrant additional scrutiny. Studies published in the Journal of Operations Research indicate AI-driven misinformation frequently incorporates anger-inducing language and profanity to maximize engagement and sharing.

“Emotional manipulation is a cornerstone of effective misinformation,” explains Dr. Torres. “When content seems designed primarily to provoke rather than inform, that’s when your alarm bells should ring loudest.”

Different platforms present unique challenges for misinformation detection. On TikTok, where young users predominate, “content farms” mass-produce videos featuring AI voiceovers paired with on-screen text, often targeting political discourse. NewsGuard research documented numerous accounts producing multiple misleading videos daily in this format.

On X (formerly Twitter), deepfake videos with political themes represent a significant concern. The platform’s verification system presents additional complications, as a Cambridge University study found approximately half of synthetic profiles maintained verified status through the platform’s subscription model.

Facebook users face different challenges, with the platform’s algorithm increasingly surfacing high-engagement posts from non-connections. A study published in Harvard’s Misinformation Review highlighted how misleading Facebook content often attempts to direct users off-platform toward content farms, fraudulent stores, and scam websites.

Mozilla and other organizations have developed specialized tools to assist users, including TrueMedia.org, which scans social posts for synthetic elements, and Mozilla’s Deepfake Detector, which analyzes text across multiple detection engines. However, experts emphasize that while helpful, these tools cannot replace critical thinking skills.

“The technology for creating convincing fakes is evolving faster than our detection capabilities,” notes Sarah Chen, digital literacy advocate at the Information Integrity Institute. “Building foundational media literacy skills remains our best defense against misinformation.”

As AI technology continues advancing, experts recommend maintaining healthy skepticism online, verifying information through trusted sources, and recognizing that humans frequently overestimate their ability to identify AI-generated content. With proper vigilance and critical assessment, users can better navigate an increasingly complex information environment.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

28 Comments

Silver leverage is strong here; beta cuts both ways though.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Production mix shifting toward Social Media might help margins if metals stay firm.

Nice to see insider buying—usually a good signal in this space.

Nice to see insider buying—usually a good signal in this space.

If AISC keeps dropping, this becomes investable for me.

Good point. Watching costs and grades closely.

Production mix shifting toward Social Media might help margins if metals stay firm.

Interesting update on Attention to Detail: The Significance of Overlooked Elements. Curious how the grades will trend next quarter.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

The cost guidance is better than expected. If they deliver, the stock could rerate.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

I like the balance sheet here—less leverage than peers.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Production mix shifting toward Social Media might help margins if metals stay firm.

Exploration results look promising, but permitting will be the key risk.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

The cost guidance is better than expected. If they deliver, the stock could rerate.

Good point. Watching costs and grades closely.

Exploration results look promising, but permitting will be the key risk.

Good point. Watching costs and grades closely.

Exploration results look promising, but permitting will be the key risk.

Good point. Watching costs and grades closely.