Listen to the article

AI-Generated Imagery Infiltrates Mainstream Journalism, Raising Verification Concerns

A concerning trend is emerging in global media as AI-generated and manipulated images move beyond fringe platforms and into mainstream journalism, with potentially serious implications for public discourse and information integrity.

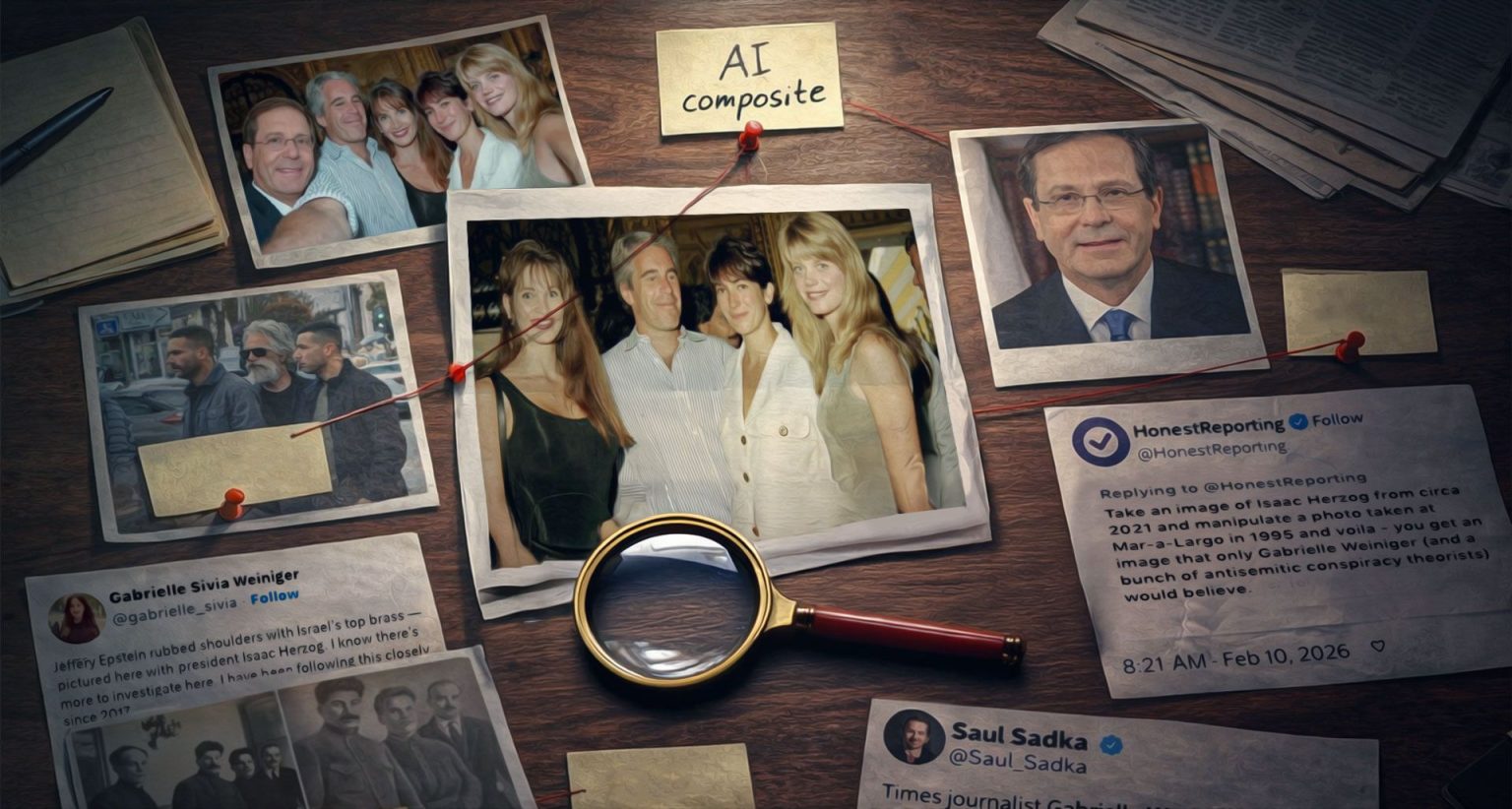

Earlier this month, two fabricated images involving Jeffrey Epstein began circulating widely online. The first clearly synthetic image purportedly showed Epstein walking in Tel Aviv, flanked by bodyguards and surrounded by Israeli street signs. The second, more consequential fabrication digitally inserted Israeli President Isaac Herzog into a well-known historical photograph of Epstein and Ghislaine Maxwell from the 1990s.

The incident took a troubling turn when Gabrielle Sivia Weiniger, a journalist covering the Middle East for The Times of London, reposted the composite Herzog-Epstein image before later acknowledging it was fake and apologizing for sharing it without verification.

While Weiniger’s subsequent apology was appropriate, media analysts point out that the damage had already been done. In today’s fast-moving information environment, initial impressions travel much further than corrections, with audiences remembering visual associations long after retractions are issued.

“This incident is not about one tweet,” notes media ethics researcher Dr. Samuel Kirkland. “It represents a structural vulnerability in modern journalism: the collapse of visual verification standards in an era where synthetic imagery is increasingly sophisticated and accessible.”

The case highlights how fabricated imagery can influence public perception before basic fact-checking occurs. While photo manipulation has a long history—from Soviet-era darkroom techniques that erased political enemies from photographs to modern digital alterations—what has fundamentally changed is accessibility and scale.

“What once required state infrastructure, chemical expertise, and physical negatives can now be achieved by anyone with generative software,” explains Dr. Lydia Morales, a digital forensics specialist. “Synthetic realism is scalable, instantaneous, and globally distributable. The barrier to entry has collapsed, but editorial discipline hasn’t caught up.”

Forensic analysis of both fake Epstein images revealed multiple red flags that should have triggered verification. The Tel Aviv street scene contained malformed Hebrew lettering inconsistent with Israeli municipal typographic standards, unnaturally symmetrical security personnel, and optical inconsistencies that professionals could identify without specialized tools.

The Epstein-Herzog composite displayed even more obvious problems: temporal impossibility (inserting Herzog’s current appearance into a 1990s photograph), lighting inconsistencies, anatomical distortions, and poor edge integration. These issues should have prompted basic plausibility assessments before publication.

Media experts emphasize that journalistic standards must adapt to this new reality. Before amplifying potentially significant images, baseline verification should include source checking, reverse image searches, metadata review, chronological plausibility assessment, and lighting and compositional scrutiny.

“The issue isn’t that AI exists,” notes digital media ethicist Marcus Chen. “The issue is that verification no longer consistently precedes amplification in newsrooms under pressure to be first rather than accurate.”

The consequences extend beyond individual incidents. In conflict zones and politically sensitive contexts, synthetic imagery can now be seamlessly injected into public discourse, potentially inflaming tensions or advancing disinformation. When journalists amplify composite visuals without forensic scrutiny, they undermine trust not just in their reporting but in visual documentation itself.

Industry leaders are calling for more rigorous verification protocols. Synthetic imagery often reveals itself through text anomalies (especially in multilingual content), unnaturally symmetrical human arrangements, inconsistent lighting, edge blending irregularities, chronological implausibility, and absence of verifiable source chains.

“Historically, photographs functioned as presumptive evidence. Today, images increasingly function as claims,” says visual communication professor Elaine Westbrook. “Verification must move from pixel-level trust to process-level scrutiny.”

The Epstein-Herzog composite serves as a warning for modern journalism. As synthetic imagery becomes more sophisticated, the profession faces a critical challenge: develop more rigorous verification standards or risk allowing fabricated visuals to shape public perception before anyone thinks to question their authenticity.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

14 Comments

As AI image generation capabilities advance, I’m curious what technological solutions or industry standards might emerge to help combat the spread of synthetic visuals. Robust authentication methods could be a game-changer.

That’s an excellent point. Developing reliable digital forensics tools and protocols for verifying media authenticity will be crucial in the years ahead.

This article highlights the complex challenges that AI-powered visual manipulation poses for journalism and public discourse. Maintaining trust in visual media will require vigilance, innovation, and a commitment to ethics and transparency.

Well said. The stakes are high, as the integrity of information and the public’s ability to discern truth from fiction are at risk. Tackling this issue will require a multifaceted approach.

This is a concerning development as AI-generated imagery becomes harder to detect. The erosion of visual evidence poses serious risks to public discourse and trust in journalism. Rigorous verification processes are crucial to maintain information integrity.

Absolutely. The speed at which these fabricated images spread online underscores the need for heightened scrutiny and fact-checking before publication.

The Epstein case highlights how easily manipulated visuals can be used to spread misinformation and propaganda. Media outlets must be extra vigilant in verifying sources and authenticity, even for seemingly innocuous images.

Agreed. The reposting of the fabricated Herzog-Epstein photo by a reputable journalist is a sobering example of how quickly these fakes can infiltrate mainstream reporting.

This article highlights the need for greater media literacy and critical thinking skills among the public. As AI-generated fakes become more convincing, equipping citizens to spot manipulation will be crucial.

You’re right. Educating the public on how to verify the authenticity of images and other media will be key to building resilience against the spread of disinformation.

The rise of AI-generated propaganda is a troubling trend that demands a coordinated response from media outlets, tech companies, and policymakers. Proactive measures to safeguard visual integrity are urgently needed.

Absolutely. Effective regulation, industry standards, and technological solutions will all be necessary to combat the erosion of visual evidence in the digital age.

The disruptive potential of AI-generated imagery in the context of propaganda and disinformation is deeply concerning. Maintaining public trust in visual evidence will be an ongoing challenge for journalists and media organizations.

Absolutely. The erosion of trust in visual media could have far-reaching implications for how information is consumed and understood by the public.