Listen to the article

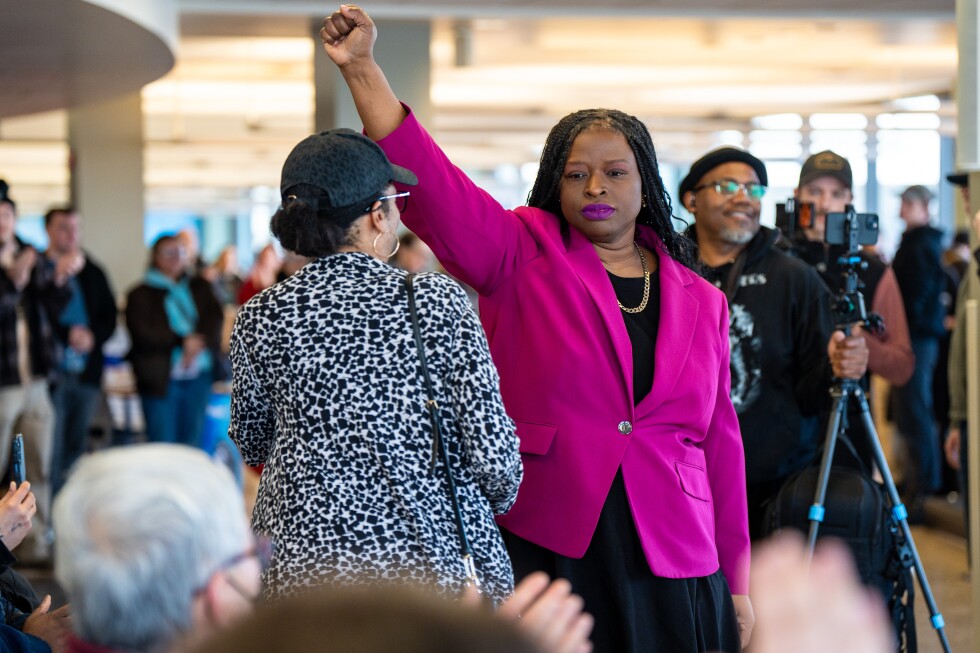

The Trump administration’s use of AI-generated imagery on official government channels is raising significant concerns among misinformation experts, particularly after the White House shared a doctored image of civil rights attorney Nekima Levy Armstrong appearing to cry following her arrest.

The controversy began when Homeland Security Secretary Kristi Noem’s account posted the original image of Levy Armstrong’s arrest. Shortly after, the official White House account shared an altered version showing her in tears. This manipulation comes amid a surge of AI-edited imagery circulating across political lines following the fatal shootings of Renee Good and Alex Pretti by U.S. Border Patrol officers in Minneapolis.

Rather than addressing criticism over the edited image, White House officials doubled down on their approach. Deputy Communications Director Kaelan Dorr declared on social media platform X that the “memes will continue,” while Deputy Press Secretary Abigail Jackson shared posts mocking those who expressed concern about the manipulation.

David Rand, an information science professor at Cornell University, suggests the White House’s characterization of the altered image as a “meme” appears designed to frame it as humor, potentially “shielding them from criticism for posting manipulated media.” He noted this instance differs from the administration’s previous sharing of more obviously cartoonish images.

Digital communication experts point out that memes have long conveyed layered messages that resonate differently with various audiences. Zach Henry, a Republican communications consultant who founded influencer marketing firm Total Virality, explained the strategy: “People who are terminally online will see it and instantly recognize it as a meme. Your grandparents may see it and not understand the meme, but because it looks real, it leads them to ask their kids or grandkids about it.”

The controversy highlights growing concerns about truth and trust in government communications. Michael A. Spikes, a Northwestern University professor and news media literacy researcher, warned that such alterations “crystallize an idea of what’s happening, instead of showing what is actually happening.”

“The government should be a place where you can trust the information, where you can say it’s accurate, because they have a responsibility to do so,” Spikes said. “By sharing this kind of content, and creating this kind of content… it is eroding the trust we should have in our federal government to give us accurate, verified information.”

The White House’s approach exists within a broader ecosystem of AI-generated misinformation related to Immigration and Customs Enforcement (ICE) actions, protests, and citizen interactions. Following Renee Good’s shooting by an ICE officer, numerous fabricated videos began circulating on social media depicting confrontations with immigration authorities.

Jeremy Carrasco, a content creator specializing in media literacy and debunking AI videos, explained that many such videos come from accounts engaged in “engagement farming” – capitalizing on trending topics to generate clicks. He noted that viewers who oppose ICE and DHS might consume this content as “fan fiction,” representing wishful thinking about pushback against these agencies.

Carrasco expressed concern that many viewers struggle to distinguish between authentic and fabricated content, even when AI-generated material contains obvious errors like street signs with gibberish text. This problem extends beyond immigration issues, as evidenced by the explosion of manipulated images following the capture of deposed Venezuelan leader Nicolás Maduro earlier this month.

Experts predict AI-generated political content will only become more prevalent. While technological solutions like watermarking systems developed by the Coalition for Content Provenance and Authenticity could help verify media authenticity, Carrasco believes widespread implementation remains at least a year away.

UCLA Professor Ramesh Srinivasan warned that AI systems will “exacerbate, amplify and accelerate these problems of an absence of trust, an absence of even understanding what might be considered reality or truth or evidence.” He cautioned that social media platforms’ algorithms tend to favor extreme and conspiratorial content – precisely the type that AI tools can easily generate.

“It’s going to be an issue forever now,” Carrasco concluded. “I don’t think people understand how bad this is.”

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

12 Comments

The Trump administration’s use of AI-generated imagery raises serious concerns about misinformation and eroding public trust. Doctoring images is a dangerous precedent that undermines transparency and accountability in government.

I agree, the White House’s dismissive response is concerning. They should be addressing these issues directly instead of doubling down on the use of manipulated media.

As someone with a keen interest in the mining and energy sectors, I’m deeply concerned about the potential ripple effects of this trend. Eroding public trust can undermine important policy decisions and investment in critical industries.

That’s a valid concern. Maintaining transparency and credibility is essential for the mining and energy sectors to function effectively and earn the public’s confidence. This controversy is a wake-up call for stronger safeguards.

This controversy highlights the complex challenges we face with the rapid advancement of AI technology. While AI can have many beneficial applications, we must be vigilant about its potential misuse for political purposes.

Absolutely. The line between authentic and fabricated imagery is becoming increasingly blurred, and strong safeguards are needed to preserve the integrity of information shared by government officials.

The use of AI-generated imagery by the Trump administration is a worrying development that could have serious implications for the public’s trust in their government. Factual, unbiased information is essential for an informed citizenry.

I share your concerns. Manipulating images to spread misinformation is a dangerous tactic that must be condemned. The public deserves honesty and transparency from their elected leaders.

This controversy highlights the need for robust policies and regulations around the use of AI-generated content, especially in official government communications. We must ensure the integrity of information is maintained.

Agreed. Clear guidelines and accountability measures are necessary to prevent the misuse of AI technology for political gain. The public’s trust is at stake, and that should be the top priority.

As an expert in the mining and commodities sector, I’m concerned about the broader implications of this trend. Eroding public trust can undermine confidence in critical industries and policies, with far-reaching economic consequences.

That’s a good point. Maintaining transparency and credibility in the mining and energy sectors is crucial, especially when it comes to issues like environmental regulations and resource development.