Listen to the article

Misinformation Paradigm Fails to Deliver After a Decade of Effort

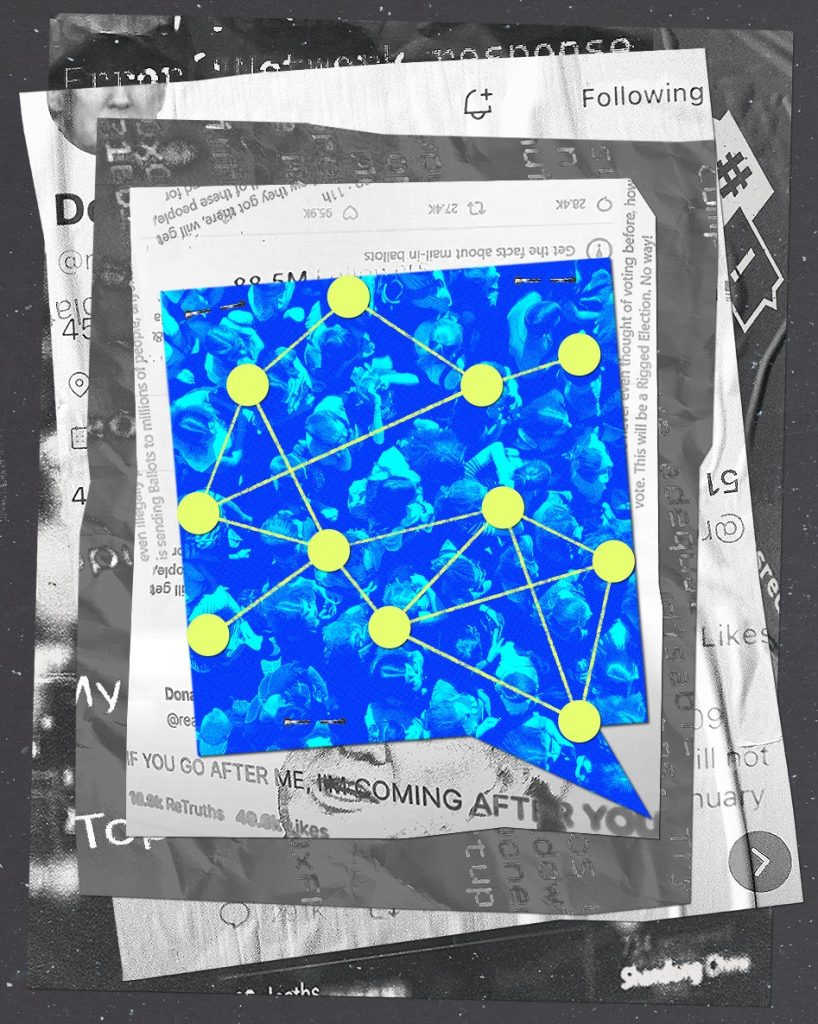

After nearly a decade of concentrated efforts to combat misinformation, researchers and experts are questioning whether the approach has been effective at all. Despite significant investment by platforms, researchers, and civil society groups, the spread of false information continues largely unabated, with polarization and distrust in institutions reaching new heights.

“The early months of Donald Trump’s second administration have, much like his first four years, been defined by lies: strange lies, self-serving lies and inhumane lies,” note Zeve Sanderson and Scott Babwah Brennen, who lead technology policy centers at NYU.

Since 2016, misinformation has dominated public discourse. Oxford Dictionaries selected “post-truth” as its word of the year that year, followed by “fake news” in 2017 and “misinformation” in 2018. World leaders surveyed by the World Economic Forum have ranked misinformation and disinformation as the highest short-term risks for two consecutive years, surpassing climate events, inflation, and war.

Despite this recognition, a fundamental question remains: has the fight against misinformation achieved anything meaningful?

“Given all this, it’s hard to feel confident that the work of the last decade has made measurable progress in curing our so-called ‘information disorder,'” the researchers note. “Did we even understand the problem to begin with?”

The Rise of the Misinformation Paradigm

The dominant approach to misinformation emerged as social media platforms grew in influence, allowing falsehoods to spread at unprecedented speed and scale. The paradigm centered on a binary premise: information is either true or false. False information was seen as dangerous primarily because it could persuade people to believe untrue things.

This framework led to predictable solutions. Fact-checking exploded from a niche practice to a mainstream journalistic function. Technology platforms invested billions in content moderation systems. Facebook alone reportedly spent $13 billion on safety and security efforts over a five-year period, with substantial resources dedicated to identifying and limiting misinformation.

Researchers focused on measuring the effectiveness of corrections and debunking efforts, debating strategies like prebunking versus debunking, and whether repeating false claims while debunking them might inadvertently reinforce them.

A Failed Approach

Despite these investments, misinformation remains rampant. Movements like “Stop the Steal,” climate denial, and vaccine skepticism persist. According to a 2021 survey by the Cato Institute and YouGov, most Americans distrust social media platforms to moderate content fairly. Many companies have scaled back their misinformation policies and enforcement efforts.

“After years of going all in on the misinformation paradigm, we’re arguably worse off,” the researchers write.

Critics have identified three main problems with the dominant approach. First, a definitional critique points to the challenge of categorizing information as true or false in dynamic situations. The COVID-19 lab leak theory exemplifies this problem—initially dismissed as misinformation and moderated across platforms, it’s now considered a credible possibility by intelligence officials.

Second, the prevalence critique challenges assumptions about how much misinformation people actually encounter. Research suggests fake news represents just 0.15% of Americans’ daily media diet. Even when definitions are expanded to include low-quality news sources, the proportion remains relatively small at 5-10%.

Third, the causal critique questions whether online misinformation significantly impacts political attitudes and behaviors. People generally aren’t easily persuaded by new information; instead, they tend to interpret information through the lens of their existing worldview. Digital misinformation largely “preaches to the choir” rather than converting new believers.

Beyond True and False

The researchers argue that the fundamental problem lies in treating all falsehoods as the same type of communicative act. This approach fails to account for how communication actually functions in society.

Take former President Trump’s claim that Haitian immigrants were eating pets in Springfield, Ohio. While thoroughly debunked, the false claim wasn’t primarily about facts—it aimed to increase attention on immigration and communicate visceral disgust for immigrants. Fact-checking did little to address the claim’s actual purpose.

“Information can be communicated to shape identities, influence culture, strategically impact the media environment and more,” the researchers note. “The truth, of course, matters, but it also clearly does not define the myriad effects of information.”

This dynamic may explain why feared scenarios about AI-generated misinformation disrupting the 2024 election didn’t materialize. Most AI-generated content that circulated was “cartoons and agitprop” that reinforced existing beliefs rather than changing minds.

A Path Forward

Rather than abandoning efforts to combat harmful false information, the researchers call for a more holistic approach that recognizes how communication functions in society and addresses broader challenges like trust, identity, and polarization.

Several promising efforts point in this direction. Some newsrooms are experimenting with direct communication between journalists and readers to meet community information needs. For example, a partnership between the Information Futures Lab, Factchequeado, and We Are Más created a bilingual WhatsApp group to answer questions from Hispanic communities in South Florida.

Technologists and policymakers are developing “middleware”—third-party software that sits between platforms and users—to give people more control over their social media experience. Internet scholar Ethan Zuckerman recently filed a lawsuit against Meta to establish legal protections for such tools.

Researchers are also broadening their focus beyond truth and falsehood to examine how information relates to identity, community, and power. This work explores the “deep memetic frames” through which people interpret the world and how these frames shape politics and relationships.

“Our challenge now is to expand the scope of how we defend democracy in the digital age while preserving the institutional momentum of the past decade,” the researchers conclude. “The misinformation paradigm, for all its limitations, has shown that rapid, large-scale coordination around democratic challenges is possible.”

The task ahead is to maintain that coordination while developing more effective approaches to strengthen democracy—not just by correcting falsehoods, but by building healthier information environments from the ground up.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

18 Comments

Combating misinformation is an ongoing challenge, especially as new technologies enable the rapid dissemination of false narratives. I hope this assessment leads to more impactful and coordinated efforts to address this problem.

Absolutely, the scale and complexity of misinformation make it a difficult issue to tackle. But the risks of inaction are too great – we need to keep searching for better ways to counter this threat to democracy and public discourse.

This is a critical issue that deserves serious attention. Tackling misinformation requires a multi-pronged approach involving tech platforms, researchers, and civil society. I’m curious to see what new strategies emerge from this reflection on past failures.

Agreed, the spread of misinformation is deeply concerning and the stakes are high. Finding effective solutions will require innovative thinking and collaboration across sectors.

Combating misinformation is a complex challenge, but one that must be addressed. I’m hopeful that this assessment will lead to more effective, coordinated strategies for identifying and countering the spread of false narratives, particularly in technical domains.

Absolutely, a comprehensive, evidence-based approach is essential. Identifying the root causes and underlying dynamics of misinformation will be key to developing more impactful interventions.

The failure to curb misinformation is deeply concerning, especially given its potential impact on critical industries like mining and energy. I’m curious to see what new approaches emerge from this assessment and how they might be applied to address this challenge.

Agreed, the stakes are high when it comes to misinformation in these sectors. A fresh, multidisciplinary approach is needed to develop more effective solutions.

This is a crucial issue that deserves serious attention. Misinformation poses a significant threat to informed decision-making, particularly in areas like mining, energy, and commodities. I’m hopeful that this assessment will lead to more effective solutions.

Absolutely, the impact of misinformation in these sectors can be dire. Developing robust strategies to counter false narratives and promote factual, evidence-based discourse is essential.

This is a critical issue that deserves serious attention. Misinformation can have significant real-world consequences, especially in areas like mining, energy, and commodities. I’m looking forward to seeing the proposed new strategies to address this challenge and promote more informed decision-making.

Agreed, the stakes are high when it comes to misinformation in these sectors. Developing effective solutions will require innovative thinking and a multifaceted approach.

This is an important and timely piece. Misinformation has become a major obstacle to informed decision-making, especially on critical issues like mining, energy, and commodities. I’m looking forward to seeing the proposed new strategies to address this challenge.

Agreed, misinformation in these sectors can have serious real-world consequences. Developing more effective approaches to identify and counter false narratives is crucial.

Misinformation is a complex, multifaceted issue that requires a nuanced, evidence-based approach. I’m curious to see what insights this assessment yields and how it might inform new strategies for addressing the spread of false narratives, especially in technical domains.

Agreed, a more sophisticated, data-driven approach is needed. Tackling misinformation requires a deep understanding of how it spreads and what motivates its propagation.

The failure to curb the spread of misinformation is deeply concerning. I hope this assessment leads to a fundamental rethinking of the strategies and tools used to combat this problem, especially when it comes to technical, scientific, and economic topics.

Absolutely, the current approaches have clearly fallen short. We need innovative, multifaceted solutions that can keep pace with the evolving nature of misinformation online.