Listen to the article

Social media users are increasingly empowered to combat misinformation directly, according to new research from Carnegie Mellon University that challenges traditional approaches to media literacy.

While most research has focused on training people to identify false information, a recent study suggests that recognizing misinformation doesn’t necessarily prevent its spread. Many social media users simply scroll past dubious content without taking action, effectively allowing falsehoods to propagate unchallenged.

The Carnegie Mellon team explored a fundamentally different approach: teaching people to actively counter misinformation rather than passively ignoring it. Their research examined both direct “social corrections” – such as posting comments with accurate information – and simpler actions like reporting misleading content to platform moderators.

“The problem isn’t just about identifying misinformation anymore,” said a researcher involved in the study. “It’s about empowering users to take meaningful action when they encounter it, creating a community response to false information.”

The experiment involved government analysts who had enrolled in a social cybersecurity training program called OMEN. Participants completed an interactive session specifically designed to teach them how and why to counter misinformation when they encounter it online.

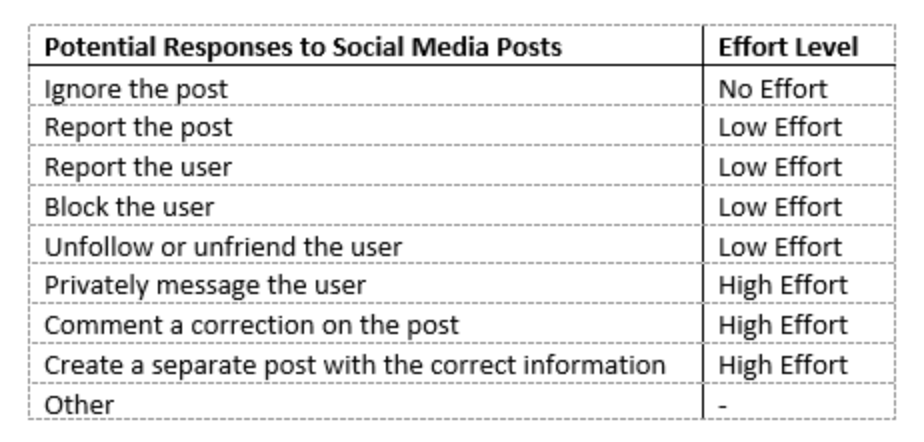

To measure the training’s effectiveness, researchers presented participants with social media posts explicitly labeled as false both before and after the training. Participants were asked whether they would respond to these posts and what actions they might take from a menu of options.

These potential interventions ranged from high-effort responses, such as commenting with corrections or messaging the original poster privately, to lower-effort actions like reporting the content or simply downvoting it. Participants could select multiple responses and were also questioned about how the identity of the poster or the specific platform might influence their chosen approach.

The study’s methodology is noteworthy for its focus on behavioral change rather than just knowledge acquisition. Rather than testing participants’ ability to identify false information – a skill these government analysts likely already possessed – the researchers measured their willingness to actively intervene when encountering misinformation.

This approach acknowledges the complex social dynamics at play in online environments. Many users hesitate to correct misinformation publicly due to concerns about potential conflict, appearing confrontational, or simply feeling that their intervention won’t make a difference in the broader information ecosystem.

The research comes at a critical time when misinformation on social platforms continues to present significant challenges to public discourse, electoral integrity, and public health initiatives. Major platforms like Facebook, Twitter (now X), and YouTube have implemented various measures to combat false information, but user-generated corrections remain an important supplementary approach.

Social media companies have shown varying levels of receptivity to this community-based approach to content moderation. Some platforms have integrated features that make it easier for users to report misinformation or add context to misleading posts, while others have been criticized for not doing enough to empower their user base.

Experts in digital media literacy suggest this study represents an important evolution in how we think about combating online falsehoods. Traditional media literacy efforts have emphasized critical thinking skills to evaluate content, but this newer approach recognizes that identifying misinformation is only half the battle – taking action is equally important.

The research team plans to expand their study to include a more diverse group of participants beyond government analysts, potentially offering insights into how different demographic groups respond to misinformation and how training might be tailored accordingly.

For everyday social media users, the study suggests that even small actions like reporting misleading content can contribute to a healthier information environment. As platforms continue to refine their approaches to content moderation, the collective actions of informed users may prove increasingly valuable in stemming the tide of misinformation online.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

8 Comments

It’s good to see researchers exploring new ways to tackle the misinformation problem. Enabling users to take direct action, whether through comments or reporting, is an intriguing strategy. Empowering the public to counter false narratives could be a valuable complement to fact-checking efforts.

Interesting research from Carnegie Mellon on a new approach to combating misinformation. Teaching people to directly challenge false content, rather than just recognizing it, could be an effective way to create a more proactive, community-driven response to online falsehoods. Looking forward to seeing how this evolves.

This seems like a promising avenue for media literacy training. Equipping people with the skills to actively refute misinformation, rather than just identify it, could go a long way in limiting the spread of false narratives online. A more engaged, empowered user base could be a powerful tool against disinformation.

The idea of empowering social media users to counter misinformation through comments and reporting is intriguing. Shifting the focus from passive recognition to active correction could help create a more resilient online environment. Curious to see if this approach gains traction and how it might impact the spread of false narratives.

This is an interesting shift in thinking about media literacy. Rather than just training people to identify misinformation, the focus on equipping them to actively counter false content is thought-provoking. Empowering users to take direct action could be a valuable complement to traditional fact-checking efforts.

Interesting approach to combating misinformation. Teaching people to actively counter false content rather than just identify it could be more effective at limiting its spread. Empowering users to take action is key.

This seems like an important shift in media literacy training. Focusing on social corrections and reporting misleading posts, rather than just passively recognizing them, makes a lot of sense. Equipping people with the tools to actively counter misinformation is crucial.

Agreed. Giving people the confidence and know-how to directly challenge false information, rather than just ignoring it, could have a significant impact. It’s a proactive approach that could help build a more resilient online community.