Listen to the article

A groundbreaking international study published last month in Science has raised concerns about the potential for “AI swarms” to manipulate public opinion and disrupt future democratic processes at an unprecedented scale, though researchers believe smaller local elections may not face immediate threats.

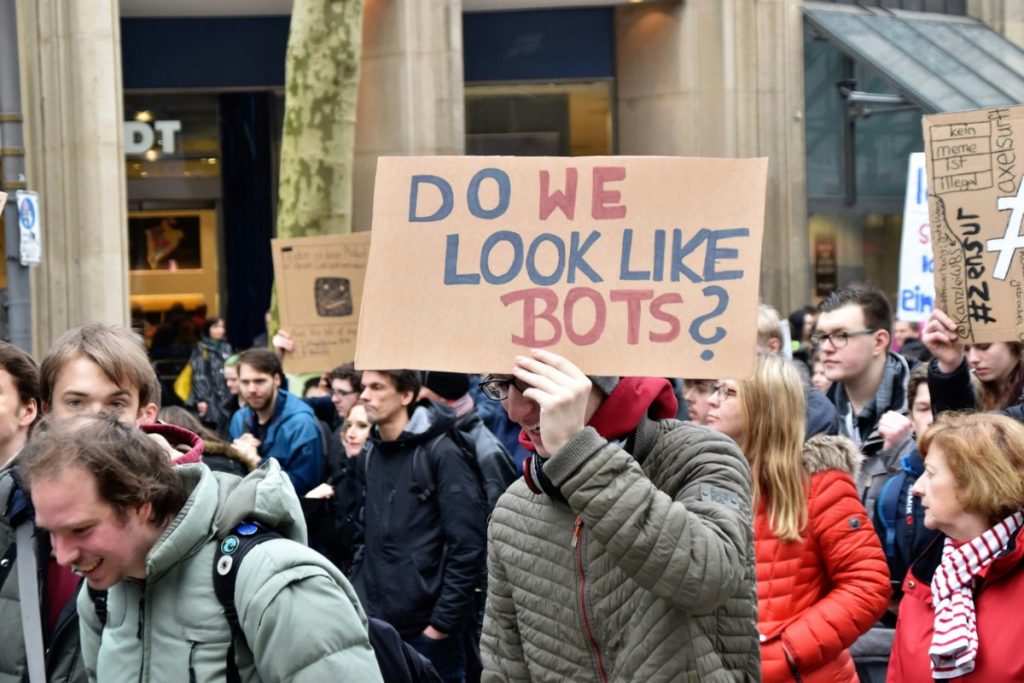

The study details how developments in autonomous large language models (LLMs) and multi-agent systems could enable bad actors to deploy thousands of AI-controlled personas that work in coordination to shape online discourse and create artificial consensus on divisive issues.

“These systems are capable of coordinating autonomously, infiltrating communities and fabricating consensus efficiently,” the researchers warned, highlighting how these technologies could further strain democratic systems already challenged by declining public trust.

Unlike traditional botnets that operate on repetitive scripts, these advanced AI swarms could generate unique, personalized content and adapt in real time to public reactions, potentially creating illusions of grassroots support for specific candidates or positions where none naturally exists.

While the technology remains largely experimental, early signs of its building blocks are already visible in the digital landscape. During British Columbia’s 2025 wildfire season, officials identified AI-generated images falsely depicting firefighting operations circulating online, causing confusion during emergency response efforts. Additionally, research examining Canada’s most recent federal election detected AI-manipulated political images influencing online conversations.

Kevin Leyton-Brown, a computer scientist at the University of British Columbia and co-author of the paper, emphasized that the threat remains primarily theoretical for now.

“We don’t think this is a huge-scale thing that is happening in the world today,” Leyton-Brown said in an interview. “I wouldn’t say that I would be enormously worried that this is even happening in a Canadian election federally today.”

Leyton-Brown anticipates major geopolitical contests, particularly U.S. congressional elections, will likely serve as the first proving grounds for these technologies. The research already identifies the 2024 elections in India, Taiwan, Indonesia, and the United States as having experienced instances of deepfakes.

Smaller municipal elections, particularly in tight-knit communities, may be less attractive targets for several reasons. Local issues tend to be less polarized along ideological lines, and face-to-face relationships often carry more weight than online rhetoric in shaping voter opinions.

“I don’t think [smaller communities] are where they’re going to cut their teeth on it,” Leyton-Brown noted. “But I do think in the longer term, it’s coming.”

The researchers warn that AI swarms could gradually shape broader narratives, contaminate online information sources, and erode institutional trust—even if individual effects appear subtle. According to Leyton-Brown, the most immediate impact may not be successful manipulation but increasing public skepticism about online content.

“I think the bigger thing is that it changes the extent to which we believe anything,” he said. “We’re going to end up in a world where we don’t listen online to random people we don’t know, because we don’t believe that they’re people.”

This shift could elevate the importance of trusted information sources, particularly those operating at the community level. Local newspapers, independent journalists, and established media outlets may become even more crucial as safeguards against misinformation.

“I think unbiased, trusted sources of information are going to be yet more important as we go forward,” Leyton-Brown added. “Those reliable kind of watchdogs at the municipal level are really critical.”

For now, smaller communities may benefit from a simple advantage: familiarity. Leyton-Brown suggested that everyday habits like relying on trusted local media and maintaining real-world connections could serve as natural defenses against digital manipulation.

“It’s a small mountain town of people who mostly know each other,” he said, referring to communities like Whistler. “Maybe go to the bar with your friends and talk to them in the real world. Maybe this just emphasizes the importance of connections with physical people in the world.”

As these technologies continue to evolve, the research underscores the need for both technical safeguards and community resilience to protect democratic processes at all levels from increasingly sophisticated digital threats.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

8 Comments

This study highlights the complex challenges we face as AI capabilities advance. While the technology holds great potential, bad actors could exploit it to undermine democratic processes. Strengthening community bonds may be an important part of the solution.

Absolutely. Maintaining vibrant local communities and grassroots civic engagement could be crucial in the fight against coordinated AI-driven disinformation campaigns.

Fascinating study on the risks of AI-driven misinformation. Coordinated ‘AI swarms’ could indeed be a major threat to democratic discourse and institutions. Curious to see if stronger local communities can help mitigate these challenges.

Agreed, the potential for AI to manufacture artificial consensus is quite concerning. Local social ties may be a helpful buffer, but vigilance and robust fact-checking will be crucial.

Fascinating and concerning research. The threat of ‘AI swarms’ generating artificial consensus is very real. While local community ties may help, we’ll need robust fact-checking and media literacy efforts to combat this challenge.

This is a worrying trend, but I’m glad researchers are studying it. AI-generated content can be so convincing and hard to detect. Strengthening community-level connections could be an effective defense against such coordinated influence campaigns.

You make a good point. Grassroots, human-to-human interactions may be a powerful antidote to AI-fueled disinformation. Building trust and civic engagement at the local level could be key.

Scary stuff. The ability of AI systems to infiltrate online communities and manufacture consensus is alarming. But I’m hopeful that strong local social ties can serve as a bulwark against these manipulative tactics.