Listen to the article

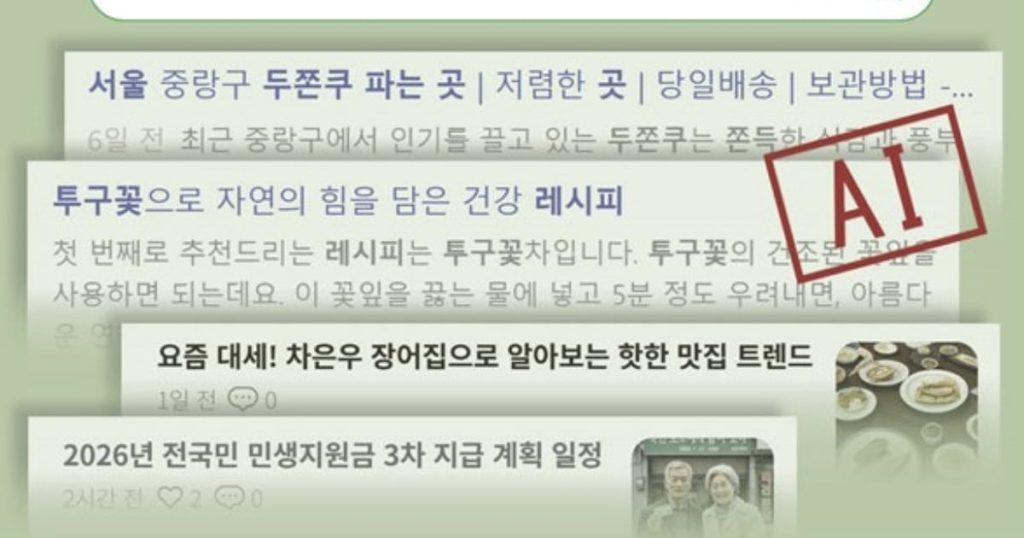

As the artificial intelligence revolution reshapes digital landscapes, search engines are facing mounting criticism for delivering misleading or entirely fabricated information through their AI-powered features. Users across major platforms report growing frustration with the quality and reliability of search results, raising serious concerns about the technology’s readiness for mainstream implementation.

A recent surge of complaints has emerged from users encountering inaccuracies in AI-generated search summaries and responses. One widely circulated example involved Google’s AI Summary feature, which incorrectly suggested that consuming small amounts of silica gel—the desiccant commonly found in product packaging—would be harmless. This directly contradicted medical guidance about the substance, which can cause significant discomfort and potentially serious health issues if ingested.

Such incidents aren’t isolated. Microsoft’s Bing AI has similarly faced backlash for generating factually incorrect information across various topics. The problem stems from how these systems operate—large language models synthesize information from vast datasets but lack true understanding, creating plausible-sounding but sometimes entirely fabricated responses.

“These AI systems aren’t actually retrieving facts—they’re predicting what words should come next based on statistical patterns,” explains Dr. Emily Chen, a digital ethics researcher at Northwestern University. “When they lack sufficient training data on specific topics or encounter ambiguous questions, they can confidently present misinformation as fact.”

The consequences extend beyond mere annoyance. In fields where accuracy is paramount—healthcare, finance, academic research, and journalism—the proliferation of AI-generated misinformation poses substantial risks. Medical professionals have voiced particular concern about patients receiving incorrect health information that appears authoritative due to its presentation in search results.

Search engine companies acknowledge these challenges while defending their ongoing AI integration. Google spokesperson Michael Harrison stated that the company is “continuously improving our systems” and emphasized that AI features remain experimental. “We’re implementing more rigorous fact-checking protocols and making the limitations of these systems more transparent to users,” Harrison noted.

Microsoft has similarly pledged enhancements to Bing’s AI reliability, announcing expanded partnerships with fact-checking organizations and improved citation capabilities to help users verify information sources. However, critics argue these measures are insufficient given the fundamental limitations of current AI technology.

The timing of these issues coincides with intensifying competition in the search market. Since ChatGPT’s breakthrough popularity in late 2022, major tech companies have rushed to incorporate similar AI capabilities into their products, potentially prioritizing speed to market over reliability.

“We’re witnessing the consequences of a technological arms race,” says Professor David Kim, who studies digital information systems at Stanford University. “Companies fear being left behind, so they’re deploying these systems before they’re truly ready for critical information retrieval tasks.”

Industry analysts suggest the problems could have lasting implications for user trust. A recent survey by the Digital Information Literacy Institute found that 62% of regular internet users expressed declining confidence in search engine accuracy over the past year, with 47% specifically citing concerns about AI-generated content.

For users seeking reliable information, experts recommend cross-checking important facts across multiple sources, looking for citation links within AI responses, and maintaining healthy skepticism toward definitive-sounding answers, especially on complex or specialized topics.

Some technology advocates remain optimistic about long-term improvements. “We’re in the awkward adolescence of AI search technology,” notes Samantha Park, technology director at the Center for Responsible Computing. “These systems will likely become significantly more reliable as companies implement better guardrails and verification mechanisms.”

Until then, the situation leaves users in a precarious position: navigating an information ecosystem where cutting-edge technology sometimes delivers not enlightenment but convincingly presented falsehoods, all while wearing the familiar interface of trusted search tools.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

8 Comments

This issue with Bing AI is really concerning. Search engines must do better at verifying the accuracy of their AI-powered features before releasing them publicly. Reputations and public trust are at stake here.

Agree completely. Deploying AI systems without rigorous testing and oversight is a recipe for disaster. Search engines should prioritize quality control to maintain credibility.

Ingesting silica gel? That’s a scary example of how dangerous AI-generated misinformation can be. Search engines have a responsibility to ensure accuracy, especially on health-related topics. Consumers deserve reliable, fact-based information.

Worrying to see AI-generated misinformation creeping into search results. Quality and reliability should be the top priorities for these platforms. Hope they can quickly address these issues before more users are misled.

Agreed, it’s critical that search engines maintain high standards of accuracy, especially as AI continues to advance. Relying on machine-generated information without proper safeguards could have serious consequences.

As the use of AI continues to grow, it’s crucial that search engines maintain stringent standards. Delivering misinformation, even inadvertently, can have serious consequences. Transparency and accountability will be essential moving forward.

As someone who relies heavily on search engines for information, this is quite concerning. Generating factually incorrect content, even inadvertently, undermines trust in these platforms. Rigorous testing and oversight seems necessary.

Absolutely. Search engines need to be extremely cautious about deploying AI features that could disseminate misinformation. Transparency and accountability will be key going forward.