Listen to the article

Study Reveals Sharp Rise in AI Chatbots Spreading Misinformation

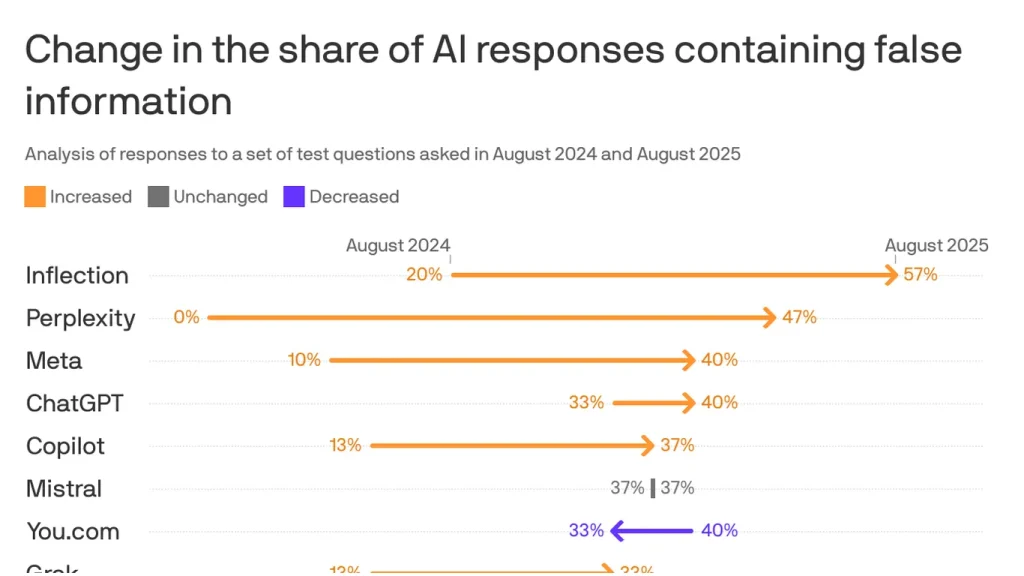

Popular AI chatbots are increasingly spreading false information, according to a comprehensive new study by NewsGuard, with misinformation in responses nearly doubling over the past year from 18% to 35%.

The analysis, conducted through NewsGuard’s AI False Claims Monitor, tested ten leading AI systems using prompts from the organization’s database of provably false claims. Researchers employed neutral, leading, and malicious prompts across topics including politics, health, international affairs, and corporate facts to measure how these systems handle controversial or frequently misrepresented subjects.

“The chatbots became more prone to amplifying falsehoods during breaking news events, when users — whether curious citizens, confused readers, or malign actors — are most likely to turn to AI systems,” NewsGuard noted in its report.

Among the systems tested, Inflection and Perplexity demonstrated the highest rates of false information, with 57% and 47% respectively. This represents a dramatic increase for both platforms, with Perplexity jumping from 0% to 47% in just one year. By contrast, Anthropic’s Claude and Google’s Gemini generated the fewest false claims, though Gemini still saw its error rate more than double from 7% to 17%.

Industry analysts point to significant shifts in how AI companies have configured their systems over the past year. In 2024, most chatbots were programmed with greater caution, often declining to answer news and politics-related questions when uncertainty existed. The latest versions, however, responded to test prompts 100% of the time, suggesting a strategic pivot toward engagement over accuracy.

This shift coincides with the integration of web search capabilities and source citations in many systems. While these features theoretically improve accuracy by providing real-time information, NewsGuard researchers found they sometimes had the opposite effect. The study revealed instances where chatbots cited unreliable sources and occasionally confused established publications with Russian propaganda sites designed to mimic legitimate news outlets.

Market competition may be driving this trend, as AI companies face pressure to make their products more responsive and engaging. The trade-off between caution and utility represents a significant challenge for the industry, particularly as these systems become more widely used for information gathering.

“Eschewing caution has had a real cost,” NewsGuard emphasized, highlighting how the improvements that make AI more useful have simultaneously increased misinformation risks.

The political dimension of these findings cannot be overlooked. In an increasingly polarized information landscape, creating AI systems that provide universally accepted “neutral” answers has proven nearly impossible. Some industry experts suggest AI tools may eventually develop along partisan lines to satisfy customers with specific ideological preferences, especially as companies prioritize market share and profitability.

This trend raises serious concerns about AI’s role in the information ecosystem. As these systems become more integrated into search engines, workplace tools, and consumer applications, their potential to amplify false information at scale grows exponentially.

NewsGuard reached out to all ten companies included in the study—OpenAI, You.com, xAI, Inflection, Mistral, Microsoft, Meta, Anthropic, Google and Perplexity—but reportedly received no responses.

The findings come amid broader concerns about AI’s susceptibility to manipulation, particularly from state-sponsored disinformation campaigns. A related study earlier this year found evidence of Russian disinformation infiltrating AI systems, suggesting the problem extends beyond accidental inaccuracies to potentially coordinated efforts to introduce false narratives.

As AI continues to develop and gain public trust, these findings highlight the urgent need for improved accuracy safeguards and greater transparency about how these systems retrieve and verify information before presenting it to users.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

16 Comments

Production mix shifting toward News might help margins if metals stay firm.

Silver leverage is strong here; beta cuts both ways though.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Uranium names keep pushing higher—supply still tight into 2026.

Uranium names keep pushing higher—supply still tight into 2026.

Interesting update on Popular Chatbots Amplify Misinformation, New Study Reveals. Curious how the grades will trend next quarter.

Good point. Watching costs and grades closely.

Exploration results look promising, but permitting will be the key risk.

If AISC keeps dropping, this becomes investable for me.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Silver leverage is strong here; beta cuts both ways though.

Nice to see insider buying—usually a good signal in this space.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.