Listen to the article

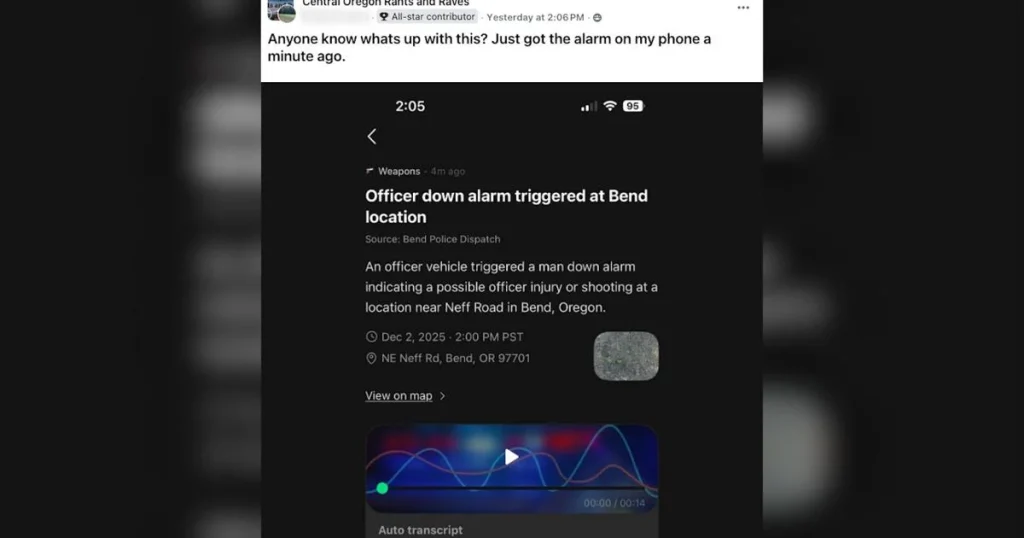

In a growing trend that has law enforcement concerned, artificial intelligence is being used to create blog posts from police scanner traffic, resulting in the spread of misinformation that could potentially confuse the public and complicate emergency situations.

The Bend Police Department in Oregon recently issued a warning about this emerging problem, noting that AI-generated blog posts created from monitored police radio communications often contain significant inaccuracies. These posts, which appear to report on local incidents and emergencies, frequently misrepresent what’s actually happening on the ground.

“These AI-generated posts are often based solely on scanner traffic without any verification of facts or context,” said a Bend Police spokesperson. “The result is content that appears legitimate but may contain completely false information or serious distortions of actual events.”

Police scanners have long been used by news organizations and concerned citizens to monitor emergency responses. Traditionally, responsible news outlets use scanner information only as a starting point, following up with official sources to confirm details before reporting. The new trend of using AI to automatically generate content from scanner traffic eliminates this crucial verification step.

Law enforcement officials explain that radio communications capture only fragments of developing situations. Initial reports frequently change as more information becomes available, and code words or technical terminology can be easily misinterpreted, especially by artificial intelligence lacking human judgment or contextual understanding.

In one recent example, an AI-generated blog post reported a major incident with multiple casualties based on preliminary scanner traffic, when the actual situation was far less severe. The false information spread quickly through social media, causing unnecessary panic in the community before officials could issue corrections.

The problem extends beyond Central Oregon, with police departments across the country reporting similar issues. In larger metropolitan areas where scanner traffic is more constant, the volume of misleading AI-generated content has grown exponentially over the past year.

Media literacy experts note that these posts often appear on websites designed to look like legitimate local news sources, making it difficult for readers to distinguish between verified reporting and AI-generated speculation.

“The sites typically have local-sounding names and may even mimic the design of established news outlets,” said Dr. Emma Roberts, a digital media professor at Pacific Northwest University. “Many readers don’t check the ‘about’ section where they might discover these sites are automated aggregators rather than newsrooms with journalists.”

The financial motivation behind these sites appears to be advertising revenue. By generating large volumes of sensational content that attracts clicks, the sites can monetize traffic regardless of accuracy.

Law enforcement agencies are encouraging residents to rely on official channels for emergency information. Many departments now use social media platforms and alert systems to provide verified information during critical incidents.

“We understand the public’s desire for immediate information during emergencies,” the Bend Police spokesperson added. “But accuracy is far more important than speed when public safety is concerned.”

The National Association of Police Organizations has begun developing guidelines for communities dealing with AI-generated misinformation. Their recommendations include creating dedicated verification pages where residents can check the authenticity of circulating reports.

For consumers of local news, experts recommend several strategies to avoid being misled: verify information through multiple sources, check if stories are attributed to actual reporters, be wary of sites with excessive ads and minimal contact information, and follow official emergency service accounts for reliable updates.

This emerging challenge sits at the intersection of technology, media, and public safety, highlighting how artificial intelligence tools, while innovative, can create new problems when deployed without proper oversight or ethical considerations.

As AI technology continues to evolve, the struggle to maintain accurate public information during emergencies will likely require coordinated efforts from technology companies, media organizations, and government agencies to develop appropriate safeguards and standards.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

12 Comments

I’m curious to learn more about the specific tactics these AI systems are using to generate content from police scanner traffic. Is there a way to detect and flag these AI-generated posts as potentially unreliable? Developing robust verification methods seems crucial to combat this issue.

That’s a good question. Understanding the underlying AI techniques and developing effective detection methods would be an important step. Collaboration between technology providers, law enforcement, and media outlets could help identify solutions to this growing problem.

While the use of AI to generate content from public data sources may seem efficient, the lack of human verification and fact-checking is clearly a major flaw that can lead to the spread of misinformation. This underscores the importance of maintaining human oversight and accountability in the age of AI.

Absolutely. Automation alone is not enough when it comes to sensitive topics like public safety and emergency response. Striking the right balance between AI capabilities and human validation is crucial to ensure the integrity and reliability of the information being shared.

This issue highlights the need for clear guidelines and regulations around the use of AI in news and media production. Without proper safeguards, the potential for harm from the dissemination of inaccurate information is significant. Policymakers and industry stakeholders should work together to address this challenge.

Well said. Proactive policy development and industry collaboration will be essential to mitigate the risks of AI-generated content and maintain public trust in the information ecosystem. Responsible innovation and ethical AI practices should be the priority.

Concerning to hear about the spread of misinformation from AI-generated blog posts based on police scanner traffic. These posts could potentially cause real confusion and disrupt emergency response efforts. It’s crucial that news sources verify facts before reporting, rather than just relying on unverified scanner chatter.

I agree, responsible journalism is essential to avoid amplifying false information, especially around public safety issues. Proper verification and fact-checking should be the standard, not sensationalized AI-driven content.

This highlights the risks of AI being used irresponsibly. While leveraging new technologies can be useful, there need to be safeguards in place to prevent the spread of misinformation, especially when it comes to matters of public safety and emergency response. Responsible oversight is key.

Agreed, AI has great potential but also risks if not deployed carefully. Proper regulation and accountability measures are needed to prevent misuse and the dissemination of false information, which could have serious real-world consequences.

It’s concerning to see AI being used to create content that could potentially confuse the public and hinder emergency response efforts. While the technology has many beneficial applications, the lack of human verification in this case is clearly problematic. Stronger safeguards and accountability measures are needed.

I agree, the potential for harm from the spread of misinformation is too high in this context. Responsible development and deployment of AI systems, with appropriate human oversight, should be the goal to ensure public safety and trust.