Listen to the article

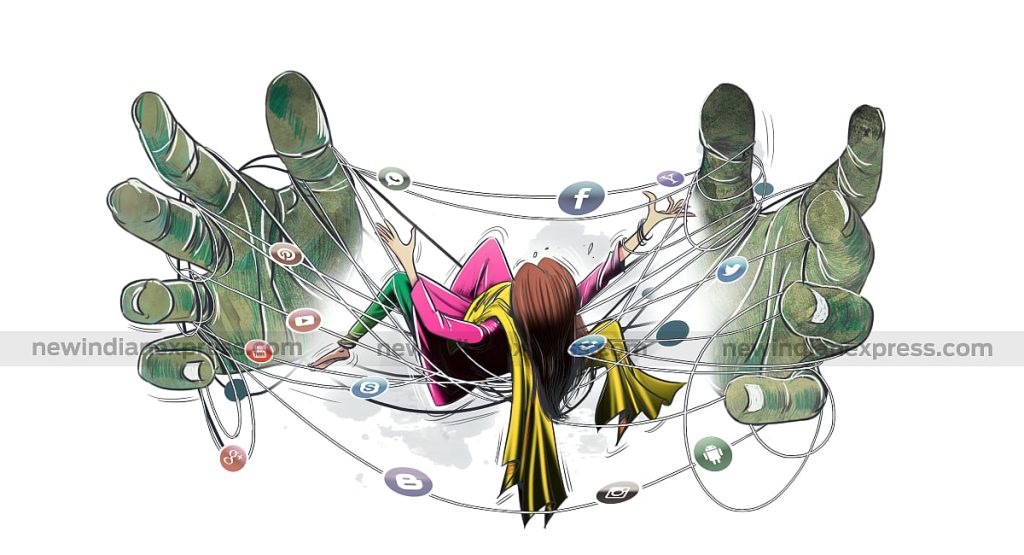

In an era of digital overload, the battle against false information has reached unprecedented levels, challenging our ability to discern truth from fiction. As content floods across social media platforms and news sites, the line between accurate reporting and manipulated narratives continues to blur, creating what experts now consider a crisis of information integrity.

Misinformation—defined as inaccurate or false information—has evolved into more dangerous forms. When deliberately weaponized, it transforms into disinformation, designed specifically to deceive audiences. Similarly, malinformation takes factual content out of context with malicious intent, particularly in interpersonal communications.

The more sinister disinformation targets entire populations, seeking to manipulate public opinion and behavior. Its impact extends far beyond mere confusion, potentially swaying election outcomes and influencing perceptions of armed conflicts. Critical global discussions around climate change and public health have been particularly vulnerable to such manipulation.

“The digital landscape has created perfect conditions for false information to thrive,” says Dr. Maria Ressa, journalist and Nobel Peace Prize laureate. “What makes today’s environment different is the speed, scale, and precision with which disinformation can target specific audiences.”

Recent years have witnessed disinformation campaigns gaining unprecedented momentum, creating alternate versions of reality for different audience segments. Professional operations known as “troll farms” and automated “bot farms” systematically push tailored narratives that hijack public discourse, often drowning out fact-based conversations.

While rumors and propaganda have historically emerged during societal crises, their current reach and resilience represent a troubling development. Traditional fact-checking and logical argumentation once provided effective countermeasures, but these approaches struggle against today’s sophisticated disinformation ecosystem.

The technological acceleration has created perfect storm conditions. Real-time communication platforms, democratized broadcasting tools, artificial intelligence applications, and algorithmic content distribution have collectively transformed the digital media environment into an echo chamber where misleading information thrives and multiplies.

Social media platforms have attempted to implement safeguards, but critics argue these measures remain insufficient. “The business model of most platforms inherently rewards engagement, and unfortunately, provocative or misleading content often generates the most interaction,” explains digital ethicist Dr. Tristan Harris, co-founder of the Center for Humane Technology.

The World Economic Forum has recognized this threat in stark terms. Its Global Risks Report 2025 specifically highlights misinformation and disinformation as “persistent threats to societal cohesion and governance,” warning these phenomena actively erode trust within and across societies worldwide.

The consequences extend beyond individual confusion. Communities increasingly exist in separate information ecosystems, diminishing shared reality and complicating collective problem-solving on crucial issues from pandemic response to climate action.

Media literacy initiatives have gained traction as one response to this crisis. Educational programs teaching critical evaluation of sources, recognition of emotional manipulation tactics, and understanding of how algorithms shape information exposure have shown promise in building public resilience.

Technological solutions also continue to develop, with artificial intelligence being deployed to detect synthetic media and flag potentially misleading content. However, the same technologies creating the problem often face limitations in solving it.

As governments worldwide consider regulatory approaches, debates continue about balancing free speech protections with safeguards against harmful disinformation. The European Union’s Digital Services Act represents one of the most comprehensive attempts to create accountability for platforms regarding illegal and harmful content.

For individuals navigating this complex landscape, experts recommend a multi-source approach to news consumption, conscious consideration of emotional reactions to content, and recognition that absolute certainty rarely exists in complex issues.

The information integrity challenge represents one of the defining issues of our digital age, requiring coordinated responses from technology companies, governments, educators, and citizens to preserve the factual foundation necessary for functioning democracies.

Verify This Yourself

Use these professional tools to fact-check and investigate claims independently

Reverse Image Search

Check if this image has been used elsewhere or in different contexts

Ask Our AI About This Claim

Get instant answers with web-powered AI analysis

Related Fact-Checks

See what other fact-checkers have said about similar claims

Want More Verification Tools?

Access our full suite of professional disinformation monitoring and investigation tools