Listen to the article

AI Experts Fear Misinformation More Than Job Displacement, Pew Research Shows

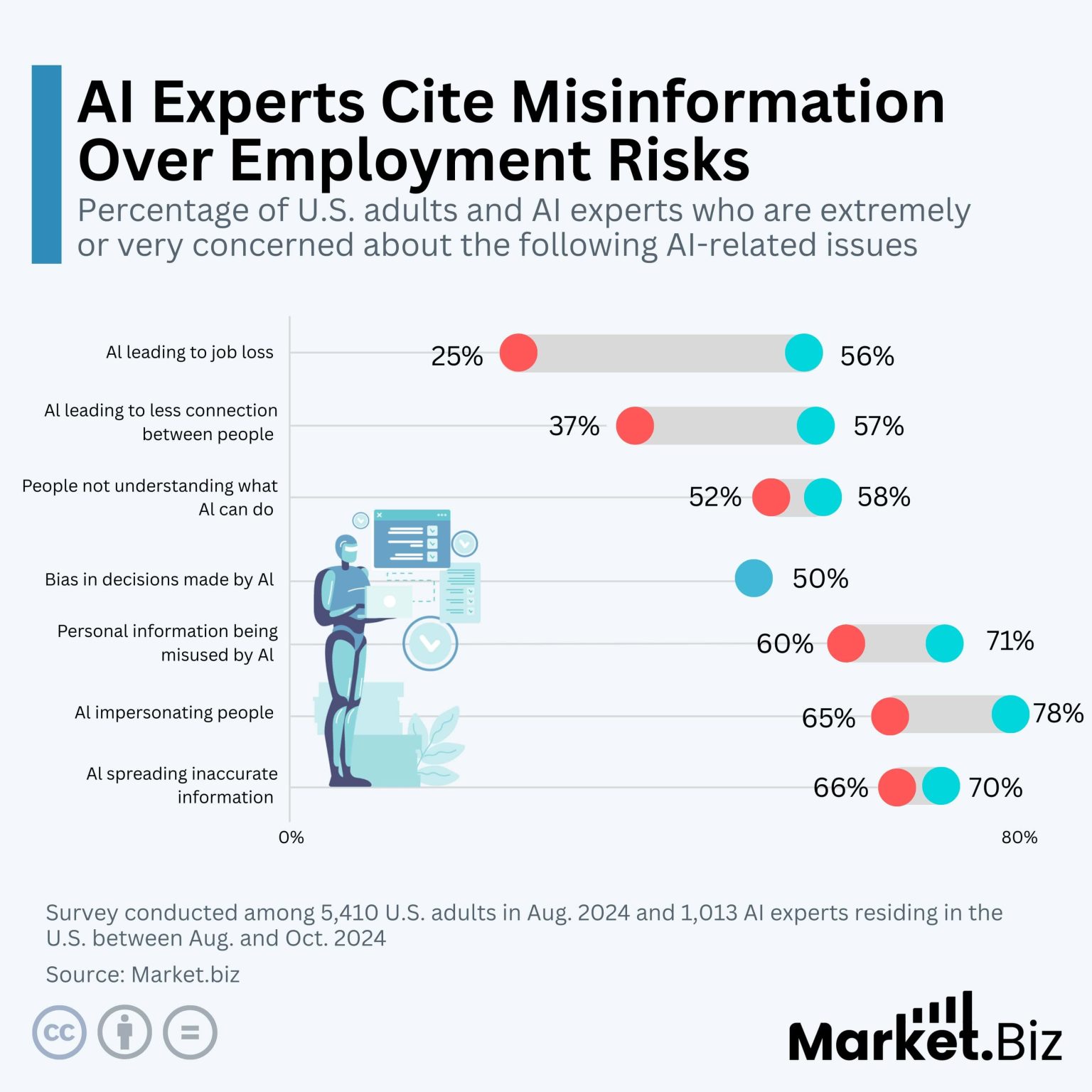

A significant divide exists between AI experts and the general public regarding artificial intelligence’s greatest risks, according to recent data from Pew Research Center. While most Americans worry about widespread job losses, specialists in the field are far more concerned about AI’s potential to spread misinformation and create convincing deepfakes.

The comprehensive survey, conducted in late 2024, revealed that 56% of U.S. adults expressed extreme or very high concern about AI-driven unemployment. In stark contrast, only 25% of AI experts shared this level of anxiety about job displacement.

“The gap in perception highlights a fundamental misalignment in how we’re approaching AI regulation and development,” said Dr. Amara Wilson, technology policy researcher at Stanford University. “The public fears job losses while experts are raising red flags about threats to information integrity that could undermine democratic institutions.”

Experts appear more optimistic overall, with 47% reporting excitement rather than concern about AI advancements. The public sentiment trends in the opposite direction, with 51% expressing general worry about the technology’s impacts.

The survey revealed areas of shared concern between experts and the public, including algorithmic bias and mishandling of personal data. Both groups also flagged deepfakes and AI impersonation as significant threats.

Looking ahead, the expert community maintains a positive outlook, with 56% predicting AI will have a beneficial impact on U.S. society over the next two decades. Only 17% of the general public shares this optimism.

Rising Alarm Over AI-Generated Misinformation

Recent developments have reinforced expert concerns about AI-powered misinformation. The 2026 Allianz Risk Barometer now ranks AI-generated disinformation among its top emerging threats, citing the proliferation of inexpensive generative content creation tools that can influence elections and exacerbate crisis situations.

Research from Harvard University in 2025 demonstrated how AI-synthesized images significantly increase vulnerability to misinformation. The University of Melbourne’s February 2026 analysis on “AI anxiety” linked public concerns to information overload and inability to distinguish authentic content from synthetic material.

Healthcare professionals have been particularly vocal, with 61% expressing high concern about domain-specific misinformation that could impact patient care and public health messaging.

“The ability to create convincing medical misinformation at scale represents an unprecedented challenge to public health,” noted Dr. Eliza Ramirez, chief digital officer at Memorial Healthcare System. “We’re seeing fabricated clinical trial results and counterfeit medical advice that appears credible even to trained professionals.”

Technical Challenges and Market Implications

The technical sophistication of today’s AI systems presents formidable challenges for detecting synthetic content. Modern diffusion-based image generators can produce images with over 90% realism that often bypass current detection methods. This technology threatens multiple sectors, including electoral integrity, financial markets, and healthcare.

Industry experts suggest a multi-layered approach to addressing these challenges, including content watermarking, blockchain-based provenance tracking, and advanced adversarial training for detection systems. However, implementation of these safeguards currently lags behind deployment timelines for 2026 generative AI technologies.

Financial implications are becoming clearer as well. Allianz reports indicate unchecked AI misinformation risks could increase cyber insurance premiums by 20-30%, creating additional pressures on insurtech companies and their clients.

Regulatory Response Gaining Momentum

The regulatory landscape is evolving in response to these concerns. The EU AI Act now mandates labeling for high-risk applications, while various U.S. legislative efforts target deepfakes specifically. Leading AI developers like OpenAI and Google are investing billions annually in safety measures, with estimates exceeding $2 billion in combined spending.

Companies failing to implement adequate safeguards face potential fines reaching up to 7% of global revenue under some regulatory frameworks. This has accelerated consolidation in the AI safety tools market as organizations scramble to mitigate risks.

The financial stakes remain considerable. Industry forecasts suggest AI could reach a $1 trillion market valuation if public trust is maintained, but faces potential stagnation if widespread backlash materializes due to misinformation incidents.

For businesses navigating this landscape, particularly in sensitive sectors like finance and healthcare, implementing robust AI auditing procedures and investing in verification technologies appears increasingly essential—not just for compliance but for maintaining consumer trust in an era of synthetic media proliferation.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

16 Comments

It’s concerning that the public is so focused on job losses from AI, when the experts see the misinformation threat as more severe. This disconnect could make it harder to implement the right safeguards and oversight. More dialogue is needed.

Yes, the public’s fear of job losses is understandable, but the experts raise a crucial point. Misinformation can undermine democratic processes and institutions in ways that are harder to quantify but no less damaging.

It’s concerning to see such a wide gap between public and expert perceptions of AI risks. While job displacement is important, the threat of misinformation seems far more insidious and destabilizing. Regulators will need to take a holistic, evidence-based approach.

Agreed. Misinformation can erode trust in institutions, spread conspiracy theories, and distort the public discourse in ways that are difficult to undo. Striking the right balance between economic and democratic concerns will be critical.

The divergence between public and expert views on AI risks is quite stark. While job losses are a valid worry, the misinformation threat could be far more damaging to the fabric of society. Bridging this gap in perception is crucial.

Absolutely. The public may not fully grasp the potential for AI-enabled misinformation to undermine democratic institutions and processes. Educating citizens on the nuances of this issue should be a priority.

The findings highlight the need for better public education on the nuanced challenges posed by AI. While job loss is a valid concern, the spread of misinformation and deepfakes could undermine trust in institutions and the integrity of information itself.

Absolutely. Policymakers will need to work closely with AI experts to craft regulations that address both the employment and information integrity risks. Careful, evidence-based approaches will be critical.

The divergence in perspectives between the public and AI experts is quite striking. While job displacement is a valid concern, the potential for misinformation to undermine democratic institutions seems like the more pressing risk. Regulators will need to carefully weigh these competing priorities.

Agreed. The experts raise a critical point about the dangers of AI-enabled misinformation. Policymakers must work closely with the AI community to develop a comprehensive regulatory framework that addresses both the economic and democratic implications of this technology.

Interesting to see the disconnect between experts and the public on AI risks. Misinformation is indeed a major concern that deserves more attention. Job displacement is important, but threats to democratic institutions could be even more severe long-term.

I agree, the public seems to have a narrower view focused on jobs, while experts are rightly worried about the deeper societal impacts of AI. Regulating this technology will require balancing many complex factors.

This data highlights a crucial disconnect that needs to be addressed. While the public may be more focused on job losses, the experts rightly see the misinformation threat as a graver danger to society. Policymakers must work closely with the AI community to develop comprehensive solutions.

Absolutely. The spread of misinformation and deepfakes could have far-reaching consequences for democratic processes and the integrity of information. Bridging the gap between public and expert perceptions will be essential for crafting effective AI regulations.

This is a complex issue without easy solutions. I’m glad to see AI experts calling attention to the misinformation risk, which could have far-reaching societal impacts. Regulators will need to weigh multiple priorities to develop a balanced approach.

Agreed. Finding the right balance between economic and democratic concerns will be challenging. Policymakers must work closely with the AI community to chart a path forward that protects both jobs and the integrity of information.