Listen to the article

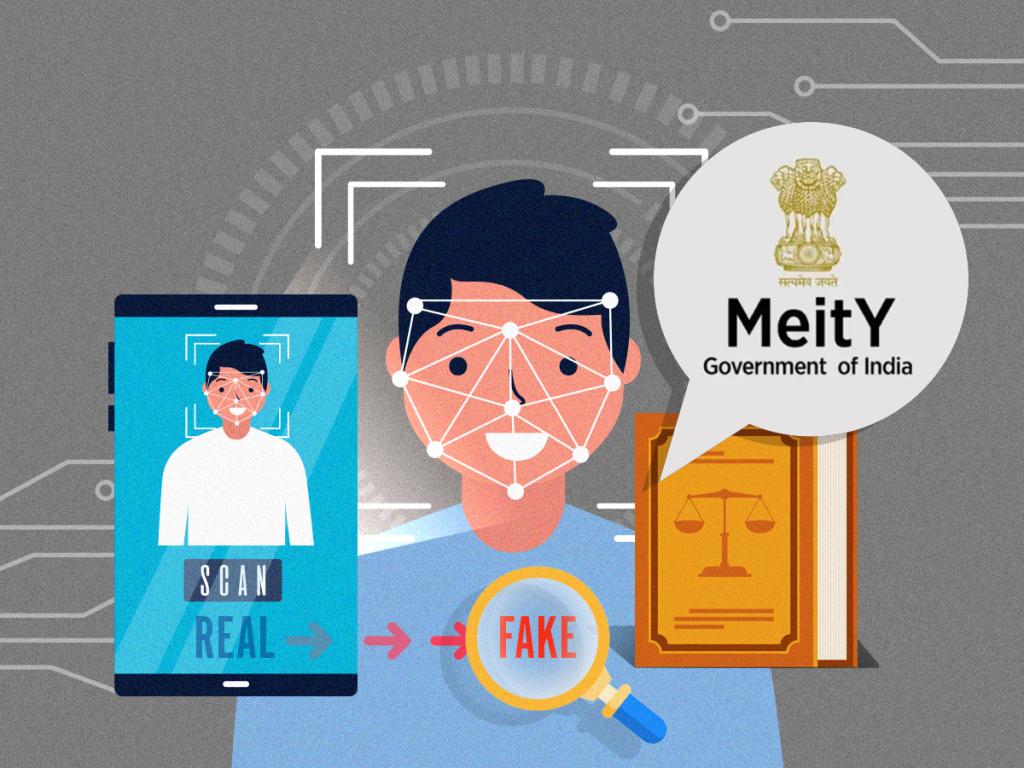

India Proposes Mandatory Labeling for AI-Generated Content on Social Media

The Indian government has unveiled draft amendments to its IT rules that would require social media users to clearly declare when posting AI-generated or modified content, as part of a broader effort to combat the proliferation of deepfakes online.

The proposed regulations, announced Wednesday by the Ministry of Electronics and Information Technology (MeitY), would mandate that all synthetically created content carry visible disclaimers occupying at least 10% of visual display area or the initial 10% of audio clips. Additionally, this identifying metadata must remain permanently embedded to ensure instant identification.

“This initiative was necessitated by a deluge of complaints over dangerous deepfakes by citizens, including Parliamentarians,” said Electronics and IT Minister Ashwini Vaishnaw during the announcement.

Stakeholders have until November 6 to submit feedback on the proposal, though officials have not specified when the rules might take effect. The changes build upon previous IT rule amendments from 2022 and 2023.

The draft introduces a formal definition of “synthetically generated information” as content that is “artificially or algorithmically created, modified, or altered using computer resources in a manner that appears authentic.” By incorporating this definition into existing provisions governing unlawful information, synthetic content would become subject to the same regulatory framework as other harmful materials.

These regulations would impact all significant social media intermediaries (SSMIs) operating in India—platforms with 5 million or more registered users. This includes major players like YouTube, Facebook, Instagram, WhatsApp, X (formerly Twitter), Snap, LinkedIn, and ShareChat. These companies would be required to implement automated tools to verify user declarations and ensure proper labeling of synthetic content.

Industry experts have expressed concerns about the broad scope of the proposed regulations. Aman Taneja, partner at Ikigai Law, noted, “The definition of synthetically generated information is very broad and would cover even things like filters, or even a cropped image. The mandatory visual/audio watermarks on all content generated by AI tools ignores several beneficial use cases.”

Some legal observers worry the rules could fundamentally alter the liability framework for platforms. Sachin Dhawan, deputy director at tech policy think tank The Dialogue, suggested the amendments might dilute safe harbor protections for intermediaries, potentially subjecting them to criminal liability for user content even without government or court notification.

Akash Karmakar, partner at law firm Panag & Babu, characterized the changes as “a shift of liability for third-party content unto the intermediaries,” making them “responsible for taking down AI-generated content.”

The proposed regulations appear to follow similar approaches taken by China and the European Union, as India grapples with the rapid evolution of AI technology and its potential for misuse.

In a related development, MeitY also amended the IT rules to restrict the authority for requesting content removal from platforms. Starting November 15, only senior government officials at joint secretary level and above, or law enforcement personnel at deputy inspector general rank and above, will be permitted to issue such orders.

This restriction aims to address concerns about indiscriminate content removal requests from lower-level officials, which have created complications for platforms. Additionally, monthly reviews of all such notices will be conducted by secretaries at the home ministry and MeitY along with state-level IT department secretaries.

“The accountability of the government increases with this change, and will be giving a reasoned intimation whenever any such order is passed,” Vaishnaw explained.

As of publication, major platforms including Google, Meta, X, Snap, and ShareChat have not responded to requests for comment on the proposed regulations.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

14 Comments

This initiative highlights the growing concern over AI-generated content and the potential for it to be used maliciously. Proactive steps like this are important, but the long-term solution may require even more robust technological and policy responses.

Absolutely. The deepfake threat is likely to evolve, so policymakers and tech companies will need to continually adapt their approaches to stay ahead of bad actors exploiting these technologies.

Interesting move by the Indian government to combat AI-generated misinformation on social media. Proper labeling of synthetic content is a reasonable step to improve transparency and trust online.

Agreed, clear disclosure requirements for AI-created material can help users make more informed decisions about the content they consume.

While mandatory labeling of AI-generated content is a worthwhile goal, I wonder about potential unintended consequences, such as users becoming desensitized to the labels or simply ignoring them. Ongoing public education may be crucial.

That’s an insightful point. Policymakers will need to carefully monitor user behavior and adjust the approach as needed to ensure the labeling requirements remain effective at combating misinformation.

While mandatory labeling is a step in the right direction, I wonder if it goes far enough to address the broader problem of AI-driven misinformation. Continued vigilance and multi-pronged approaches may be needed.

That’s a fair critique. Labeling alone may not be sufficient, and policymakers should consider additional measures to combat the spread of deepfakes and misleading content.

The proposed rules in India seem aimed at improving transparency, but I’m curious how effectively they can be enforced, especially on decentralized social media platforms. Consistent global coordination may be necessary.

Good point. Enforcement challenges across borders could limit the overall impact of these regulations. Harmonized international standards may be required to truly address this issue.

This type of regulation could set a precedent for other countries looking to address the growing deepfake problem. Mandatory labeling seems like a practical approach to tackle this issue.

Yes, it will be important to see how this plays out in practice and whether it proves effective in curbing the spread of deceptive AI-generated content.

Curious to see how social media platforms will implement these new rules and enforce the labeling requirements. Compliance and enforcement will be key to the success of this initiative.

Good point. Tech companies will need to develop robust systems to detect synthetic content and ensure proper labeling, which could be challenging.