Listen to the article

Silicon Valley Venture Firm Backs Controversial AI Bot Network for Social Media

A new Silicon Valley startup backed by prominent venture capital firm Andreessen Horowitz (a16z) is raising eyebrows with technology designed to create networks of AI-powered social media accounts that mimic human behavior – a practice that appears to violate the policies of major platforms.

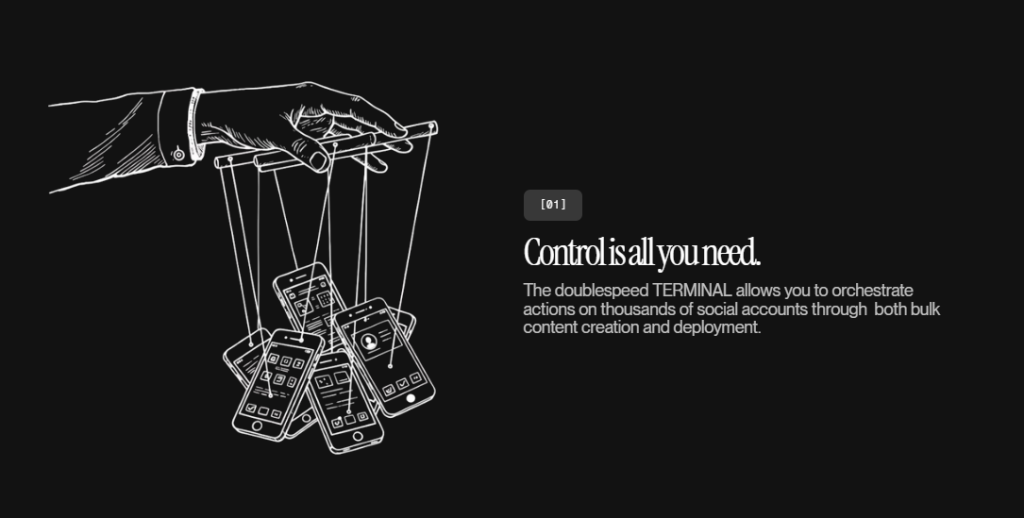

Doublespeed, which has reportedly secured $1 million in funding through a16z’s “Speedrun” accelerator program, offers clients the ability to “orchestrate actions on thousands of social accounts through both bulk content creation and deployment,” according to the company’s website.

The service essentially provides automated astroturfing – creating the illusion of widespread grassroots support or interest – by using artificial intelligence to generate content across multiple accounts simultaneously. What distinguishes Doublespeed’s approach is its focus on evading detection systems meant to identify inauthentic behavior.

“Our deployment layer mimics natural user interaction on physical devices to get our content to appear human to the algorithms,” the company states on its website. While Doublespeed did not respond to requests for comment, its marketing materials suggest sophisticated methods for circumventing platform safeguards.

In a recent podcast appearance, Doublespeed co-founder Zuhair Lakhani revealed that the company employs a “phone farm” – similar to the “click farms” that use hundreds of physical mobile devices to generate fake engagement – to operate its AI-generated TikTok accounts. Lakhani claimed one client generated 4.7 million views in less than four weeks using just 15 of these AI accounts.

The company’s platform reportedly automates 95% of content creation, with humans performing minimal “touch up” work at the end of the process. Doublespeed’s system also analyzes performance metrics to optimize future content, using successful posts as “training data for what comes next.”

Pricing for access to Doublespeed’s dashboard ranges from $1,500 to $7,500 per month, with the premium tier allowing clients to generate up to 3,000 posts monthly. The dashboard offers tools for creating videos and “carousels” – slideshows common on Instagram and TikTok – across various themes, from lifestyle content to religious messaging.

On the company’s Discord server, staff explained that accounts are “warmed up” on both iOS and Android devices before deployment, creating the appearance of established users rather than new or bot accounts. While currently focused on TikTok, Lakhani mentioned internal demos for Instagram and Reddit, though he stated the service doesn’t support “political efforts.”

When contacted, a Reddit spokesperson confirmed that Doublespeed’s service would violate the platform’s terms of service. TikTok, Meta, and X did not respond to requests for comment.

The backing from Andreessen Horowitz raises additional questions about potential conflicts of interest, as Marc Andreessen, the firm’s co-founder, sits on Meta’s board of directors. Meta’s policies explicitly prohibit “inauthentic identity representation,” the very practice that appears central to Doublespeed’s business model.

While similar AI-generated content tools exist, industry observers note that Doublespeed’s approach represents a more overt attempt to manipulate social media at scale. The key distinction is the backing from one of Silicon Valley’s most influential venture capital firms, potentially legitimizing practices that platforms have long fought against.

The emergence of such technology highlights the escalating challenge social media companies face in maintaining authentic user environments as AI tools become more sophisticated at mimicking human behavior. It also raises questions about the responsibility of investors in funding technologies designed specifically to circumvent platform integrity measures.

As artificial intelligence continues to advance, the line between authentic and manufactured social media engagement becomes increasingly blurred, presenting new challenges for platforms, users, and regulators alike.

Verify This Yourself

Use these professional tools to fact-check and investigate claims independently

Reverse Image Search

Check if this image has been used elsewhere or in different contexts

Ask Our AI About This Claim

Get instant answers with web-powered AI analysis

Related Fact-Checks

See what other fact-checkers have said about similar claims

Want More Verification Tools?

Access our full suite of professional disinformation monitoring and investigation tools

5 Comments

While I understand the commercial appeal of this technology, I have serious ethical concerns about using AI to create fake social media accounts and manipulate public opinion. This feels like a slippery slope that could have far-reaching negative impacts on society if left unchecked. I’d like to see more transparency and accountability around these practices.

The use of AI-generated ‘synthetic influencers’ to game social media algorithms and create the illusion of grassroots support is quite disturbing. This seems to go against the core principles of authentic, transparent online discourse. I hope regulators and platforms take strong action to address these manipulative tactics.

As an investor in mining and energy companies, I’m curious how this type of synthetic influencer technology could potentially be applied to boost the online profiles and perceived popularity of certain commodities or equities. That would be quite concerning and undermine the integrity of financial markets. I hope regulators stay vigilant.

This is a concerning development. Synthetic influencers and automated astroturfing have the potential to seriously undermine the integrity of social media and manipulate public discourse. I wonder how effective these AI-powered tactics are in reality, and how platforms are working to detect and counter such deceptive practices.

This is a troubling development that seems to violate the spirit, if not the letter, of social media platform policies. Using AI to automate the creation of fake accounts and manipulate engagement metrics is a clear form of disinformation that could have serious consequences. I hope there are robust safeguards put in place to prevent the spread of this kind of synthetic influence.