Listen to the article

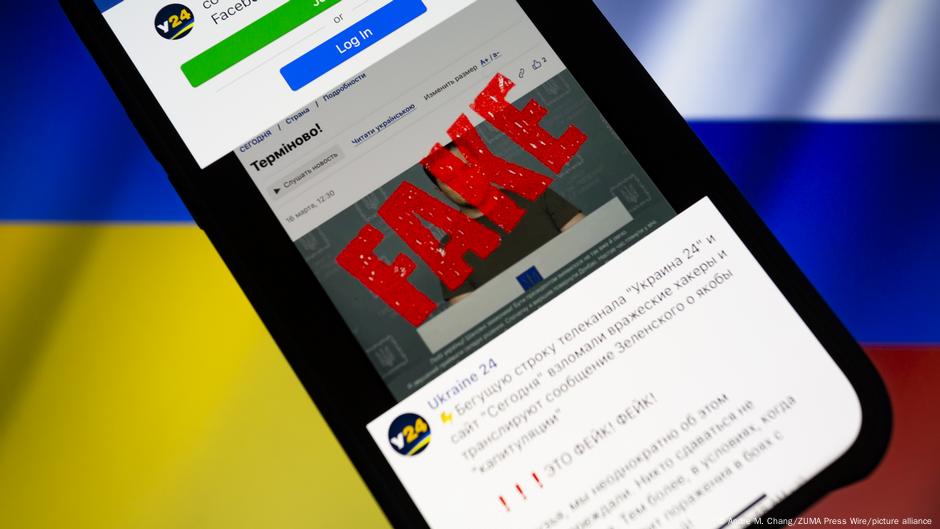

Russian propaganda continues to infiltrate social media platforms through increasingly sophisticated methods, according to recent findings by the U.S. Justice Department and German media investigations.

The Kremlin has developed subtle techniques to embed propaganda in everyday social media feeds, often in ways that users wouldn’t recognize as foreign influence operations. Rather than relying solely on obvious state-sponsored content, Russian operatives now boost and amplify seemingly independent content that aligns with Moscow’s geopolitical objectives.

While tech giants have taken steps to combat this issue, with Meta—parent company of Facebook, Instagram, and Threads—banning Russian state media outlets like RT (formerly Russia Today), these measures appear insufficient against evolving tactics.

“What we’re seeing now is far more sophisticated than the relatively crude influence operations from 2016,” explains Dr. Samantha Bennett, a disinformation researcher at the Atlantic Council’s Digital Forensic Research Lab. “Today’s operations focus on amplifying existing divisions and promoting content that appears organic but serves Russian interests.”

The Justice Department investigation revealed networks of fake accounts and shell companies operating across multiple platforms to disseminate pro-Russian narratives regarding the Ukraine war, NATO expansion, and Western political divisions. These networks often laundered Kremlin talking points through seemingly legitimate news sites before promoting them across social media.

German media outlets, including Der Spiegel and Süddeutsche Zeitung, uncovered similar patterns targeting German audiences with narratives designed to undermine support for Ukraine and create domestic political tension within Germany.

“The content doesn’t always explicitly promote Russia,” says Thomas Müller, a cybersecurity analyst who contributed to the German investigations. “Instead, it focuses on amplifying legitimate grievances—energy costs, inflation, immigration concerns—and subtly connecting them to Western support for Ukraine or sanctions against Russia.”

Social media algorithms inadvertently assist these operations by promoting content that generates strong engagement. Content that triggers emotional responses—particularly anger or fear—receives greater distribution, creating a landscape where inflammatory content thrives regardless of accuracy.

“The platform incentives haven’t fundamentally changed,” notes media scholar Dr. Rebecca Collins. “Content that generates strong reactions gets amplified. Russian operators understand this ecosystem perfectly and exploit it systematically.”

Tech companies face significant challenges identifying these operations. Unlike previous disinformation campaigns that relied on fake news stories with fabricated quotes or events, today’s influence operations often manipulate real information by removing context, selectively emphasizing certain facts, or connecting unrelated events to suggest false patterns.

Recent investigations found networks targeting specific demographic groups with tailored content. For example, politically conservative Americans might see content emphasizing the financial cost of supporting Ukraine, while progressives might see content suggesting the conflict diverts resources from domestic social programs.

Some experts warn that the problem extends beyond Russia. “The playbook is being adopted by various actors globally,” says cybersecurity expert Martin Chen. “Once these techniques prove effective, they spread quickly to other state and non-state actors with similar objectives.”

The persistence of these influence operations despite platform bans highlights the limitations of current countermeasures. While removing obvious state-sponsored outlets like RT addresses the most visible forms of propaganda, it fails to counter the more insidious amplification networks that push the same narratives through seemingly independent channels.

Media literacy advocates emphasize the importance of critical consumption habits. “Users need to question why certain content appears in their feeds,” says media educator Julia Fernandez. “Who benefits from this narrative? What might be missing from this story? These are questions we all need to ask regularly.”

As these tactics continue to evolve, the line between legitimate debate and manufactured consensus becomes increasingly blurred, presenting a significant challenge to democratic discourse in the digital age.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

22 Comments

If AISC keeps dropping, this becomes investable for me.

Good point. Watching costs and grades closely.

Production mix shifting toward Media Manipulation might help margins if metals stay firm.

Good point. Watching costs and grades closely.

The cost guidance is better than expected. If they deliver, the stock could rerate.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

If AISC keeps dropping, this becomes investable for me.

Good point. Watching costs and grades closely.

Interesting update on Russia’s Media Manipulation: Why It Often Goes Unnoticed. Curious how the grades will trend next quarter.

Good point. Watching costs and grades closely.

Production mix shifting toward Media Manipulation might help margins if metals stay firm.

Good point. Watching costs and grades closely.

Exploration results look promising, but permitting will be the key risk.

Good point. Watching costs and grades closely.

Interesting update on Russia’s Media Manipulation: Why It Often Goes Unnoticed. Curious how the grades will trend next quarter.

I like the balance sheet here—less leverage than peers.

Silver leverage is strong here; beta cuts both ways though.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Production mix shifting toward Media Manipulation might help margins if metals stay firm.

Good point. Watching costs and grades closely.