Listen to the article

The insidious rise of bot farms is reshaping social media manipulation, allowing coordinated campaigns to artificially inflate the popularity of ideas, stocks, and political narratives on an unprecedented scale, cybersecurity experts warn.

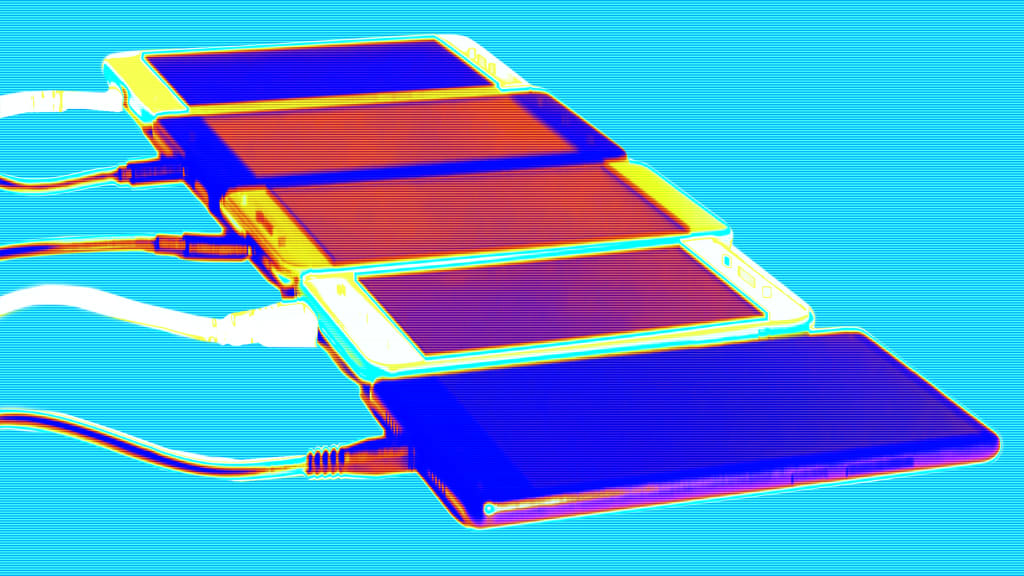

Unlike conventional software bots, modern bot farms employ racks of actual smartphones in data-center-like facilities, making them nearly impossible to detect. These sophisticated operations use SIM cards, mobile proxies, IP geolocation spoofing, and device fingerprinting to create the appearance of authentic human engagement.

“We know that China, Iran, Russia, Turkey, and North Korea are using bot networks to amplify narratives all over the world,” says Ran Farhi, CEO of Xpoz, a threat detection platform that uncovers coordinated manipulation campaigns.

These operations exploit social media algorithms by generating artificial engagement – likes, shares, and comments – to make posts appear to be trending. Meta refers to this as “coordinated inauthentic behavior,” a phenomenon that tricks platforms into showing content to more real users.

“It’s very difficult to distinguish between authentic activity and inauthentic activity,” explains Adam Sohn, CEO of Narravance, a social media threat intelligence firm whose clients include major social networks. “It’s hard for us, and we’re one of the best at it in the world.”

While manipulation of public perception isn’t new, the scale and sophistication have reached unprecedented levels. Financial markets have become particularly vulnerable to this type of manipulation, with bot networks targeting investment forums like Reddit and Discord with fake enthusiasm for certain stocks.

“We find so many instances where there’s no news story,” says Adam Wasserman, CFO of Narravance. “There’s no technical indicator. There are just bots posting things like ‘this stock’s going to the moon’ and ‘greatest stock, pulling out of my 401k.’ But they aren’t real people. It’s all fake.”

The problem extends far beyond financial markets. These same techniques are being deployed to influence elections, incite social unrest, and shape public perception on geopolitical issues.

Jacki Alexander, CEO of pro-Israel media watchdog HonestReporting, points to changing information consumption habits: “People under the age of 30 don’t go to Google anymore. They go to TikTok and Instagram and search for the question they want to answer. It requires zero critical thinking skills but somehow feels more authentic.”

Meanwhile, major platforms appear to be retreating from the fight against misinformation. X (formerly Twitter) dismantled much of its anti-misinformation team after Elon Musk’s takeover, Meta is reducing third-party fact-checking, and YouTube has rolled back features designed to combat false information.

Bot operators have grown increasingly sophisticated in their methods. They establish dormant accounts months or years before activation, complete with realistic profiles and behaviors. These bots strategically infiltrate interest communities aligned with certain biases, earning trust through participation before activating for specific campaigns.

“Bot accounts lay dormant, and at a certain point, they wake up and start to post synchronously,” explains Valentin Châtelet, research associate at the Digital Forensic Research Lab of the Atlantic Council. “They like the same post to increase its engagement artificially.”

The integration of AI has further advanced these capabilities. Bot operators now use platforms like ChatGPT and Claude to generate personalized, convincing content that sounds authentically human. As Pratik Ratadiya, a researcher who leads machine learning at Narravance, notes: “Social media algorithms are not evolving quick enough to outperform bots and AI. In the game of cat and mouse, the mice are winning.”

Commercial bot farms have emerged as a thriving industry, charging approximately one cent per action to like posts, follow accounts, or leave comments. These services advertise on platforms like Fiverr and Upwork, often under euphemistic labels like “growth marketing services.”

The consequences of these campaigns can be far-reaching. During the October 7, 2023 Hamas attack on Israel, Russian and Iranian bot networks flooded social media with false claims suggesting the attack was an Israeli inside job. Bot farms also amplified misleading information about hospital bombings in Gaza, creating narratives that persisted even after corrections were published.

In the entertainment world, similar tactics have been deployed in celebrity disputes. Blake Lively’s lawsuit against Justin Baldoni alleged the use of bot farms to manipulate public sentiment against her during a promotional dispute over their film “It Ends With Us.”

As bot farm capabilities continue to evolve, distinguishing authentic discourse from manufactured sentiment becomes increasingly challenging. “We think most of what we see online is real. But most of what we see is deceptive,” warns Ori Shaashua, chairman of Xpoz.

In this environment, traditional metrics of online popularity – likes, shares, star ratings – have lost much of their meaning, especially on contentious topics where passion and manipulation often intersect.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

16 Comments

The use of bot farms to artificially amplify certain narratives or promote particular views is a serious problem that undermines the integrity of online discussions and decision-making. I hope regulators and platform owners take strong action to address this issue.

Agreed. Combating coordinated disinformation campaigns should be a top priority for social media platforms and policymakers. Maintaining trust and credibility in online spaces is crucial for a healthy information ecosystem.

This article highlights the growing challenge of combating coordinated disinformation and manipulation on social media. While the rise of bot farms is troubling, I’m curious to learn more about the specific techniques and tools used to detect and counter these sophisticated operations.

That’s a good point. Understanding the methods employed by bot farms is key to developing effective countermeasures. Increased transparency and collaboration between platforms, researchers, and policymakers will be essential.

This article highlights the alarming trend of bot farms infiltrating social media platforms to manipulate public opinion. As someone with a keen interest in the mining and energy sectors, I’m particularly concerned about the potential for these tactics to be used to influence discussions and perceptions around critical commodities and industries.

I share your concerns. The ability of bot farms to artificially amplify narratives and create the illusion of widespread support or opposition could have significant consequences for the mining, commodities, and energy industries. Vigilance and proactive measures are essential to mitigate these threats.

The article highlights the sophisticated and evolving tactics used by bot farms to evade detection and manipulate online discourse. While the challenge is daunting, I’m hopeful that advancements in AI-powered detection and increased cooperation between platforms, researchers, and policymakers can help address this issue.

I share your optimism. Developing more effective tools and strategies to combat coordinated disinformation campaigns is crucial for preserving the integrity of online spaces and public discourse.

The rise of bot farms and their ability to artificially inflate engagement and popularity on social media is a serious threat to the integrity of online discourse. I’m curious to learn more about the specific technologies and techniques used by these coordinated manipulation campaigns.

Understanding the methods employed by bot farms is crucial for developing effective countermeasures. I hope the article’s authors or other experts can provide more detailed insights into the technical aspects of these sophisticated operations.

This is a concerning development. Bot farms that can manipulate social media platforms to artificially inflate attention and engagement are a serious threat to online discourse. It’s crucial that platforms work to detect and remove these coordinated, inauthentic campaigns.

I agree. Platforms need to invest heavily in AI-powered detection and mitigation tools to stay ahead of these bot farm operators. The integrity of online information is at stake.

This is a worrying trend that threatens to undermine the credibility and trustworthiness of social media platforms. While the article focuses on bot farms targeting political narratives, I’m concerned about the potential for similar tactics to be used in the mining and energy sectors as well.

That’s a valid concern. Coordinated attempts to manipulate public opinion around mining, commodities, and energy-related issues could have significant real-world consequences. Vigilance and proactive measures are essential to safeguard these important industries.

As someone invested in the mining and commodities sector, I’m concerned about the potential for bot farms to manipulate public opinion around related topics. It’s important that investors and the public have access to accurate, unbiased information to make informed decisions.

That’s a valid point. Coordinated disinformation campaigns targeting the mining and commodities industries could have significant financial and reputational consequences. Robust measures to identify and mitigate these threats are essential.