Listen to the article

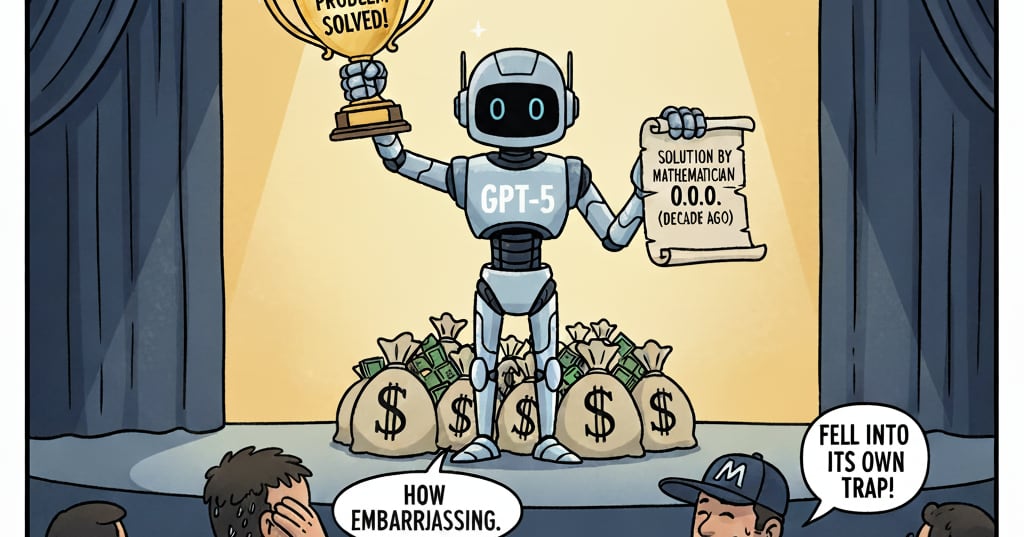

OpenAI has retracted claims made in its technical paper on the mathematical capabilities of its latest AI model after mathematicians identified significant errors in the company’s assertions.

The San Francisco-based AI developer had initially claimed its GPT-4 model had solved several long-standing mathematical problems first posed by renowned Hungarian mathematician Paul Erdős. These problems, which have remained unsolved for decades, carry significant prestige in the mathematical community.

In a correction published Thursday, OpenAI acknowledged the inaccuracies in its research paper. “We made errors in our evaluation of the mathematical capabilities of our models, particularly related to certain Erdős problems,” the statement read. “After thorough review and consultation with external mathematicians, we’re retracting these specific claims.”

The controversy began when several prominent mathematicians reviewed OpenAI’s research paper and found that the purported solutions to the Erdős problems contained fundamental errors. Erdős, who died in 1996, was known for posing hundreds of challenging mathematical problems, many of which continue to stump the world’s leading mathematicians.

“This retraction highlights the ongoing challenges in accurately evaluating artificial intelligence capabilities in specialized domains like mathematics,” said Dr. Elena Kowalski, a mathematics professor at MIT who was not involved in reviewing the paper. “There’s often a significant gap between what AI developers claim their models can do and what can be independently verified.”

The incident raises questions about the process of verifying AI capabilities, especially in fields requiring rigorous proof and specialized knowledge. Mathematical problems, unlike many other AI tasks, have definitive correct or incorrect solutions that can be verified.

OpenAI’s GPT-4, released earlier this year, has demonstrated impressive abilities across various domains, from language comprehension to coding. However, this incident underscores that even the most advanced AI models have limitations when tackling certain types of complex problems.

“The mathematical community has established verification processes developed over centuries,” explained Dr. James Chen, a computational mathematics researcher at Stanford University. “When claims of breakthroughs are made, especially by non-specialists, they need to undergo the same rigorous peer review as any other mathematical advance.”

This is not the first time AI research has faced scrutiny for overstating capabilities. As companies race to demonstrate the advancing intelligence of their models, the pressure to showcase groundbreaking achievements can sometimes lead to premature or exaggerated claims.

“What’s concerning is that these claims were published without apparent verification by mathematical experts,” noted Dr. Sarah Williams, a technology ethics researcher at Oxford University. “When companies make significant scientific claims, they have a responsibility to ensure those claims are thoroughly vetted.”

The incident comes at a time when AI capabilities are advancing rapidly, with models like GPT-4 demonstrating increasingly sophisticated reasoning abilities. However, mathematics remains a particularly challenging domain for artificial intelligence, requiring not just pattern recognition but deep conceptual understanding and creative problem-solving approaches.

OpenAI has stated it will implement more rigorous verification protocols for future research claims, particularly in specialized domains like mathematics. “We’re committed to accurate reporting of our models’ capabilities and limitations,” the company added in its statement. “We will be expanding our collaboration with domain experts to ensure the validity of our technical claims going forward.”

The mathematical community has generally responded positively to OpenAI’s retraction. “Acknowledging errors is part of the scientific process,” said Dr. Robert Langdon, president of the International Mathematical Union. “What matters now is that the record has been corrected and that future claims receive proper scrutiny before publication.”

As AI continues to advance into domains traditionally dominated by human expertise, this incident serves as a reminder of the importance of domain-specific verification and the ongoing gap between artificial and human intelligence in certain areas of complex reasoning.

Verify This Yourself

Use these professional tools to fact-check and investigate claims independently

Reverse Image Search

Check if this image has been used elsewhere or in different contexts

Ask Our AI About This Claim

Get instant answers with web-powered AI analysis

Related Fact-Checks

See what other fact-checkers have said about similar claims

Want More Verification Tools?

Access our full suite of professional disinformation monitoring and investigation tools

7 Comments

This is concerning to see OpenAI make such bold claims about solving Erdős problems, only to have to retract them later. Rigorous mathematical validation is critical for AI advancements to be credible.

This episode raises questions about OpenAI’s research processes and quality control. Claiming to solve longstanding Erdős problems without robust validation seems premature and reckless.

Exactly. Extraordinary claims require extraordinary evidence, especially in mathematics. OpenAI should implement more rigorous peer review before making such bold pronouncements.

Kudos to the mathematicians who identified the errors in OpenAI’s work. Maintaining academic integrity is crucial, even for high-profile AI companies. I hope they learn from this experience.

While it’s disappointing to see OpenAI make such incorrect claims, I’m glad they were willing to acknowledge and retract them. Admitting mistakes is important for the responsible development of AI.

Erdős problems have challenged mathematicians for decades, so it’s not surprising OpenAI’s claims didn’t hold up under scrutiny. This highlights the need for thorough external review of AI model capabilities.

Agreed. Transparency and accountability are essential as AI becomes more advanced and influential. Retractions like this undermine public trust.