Listen to the article

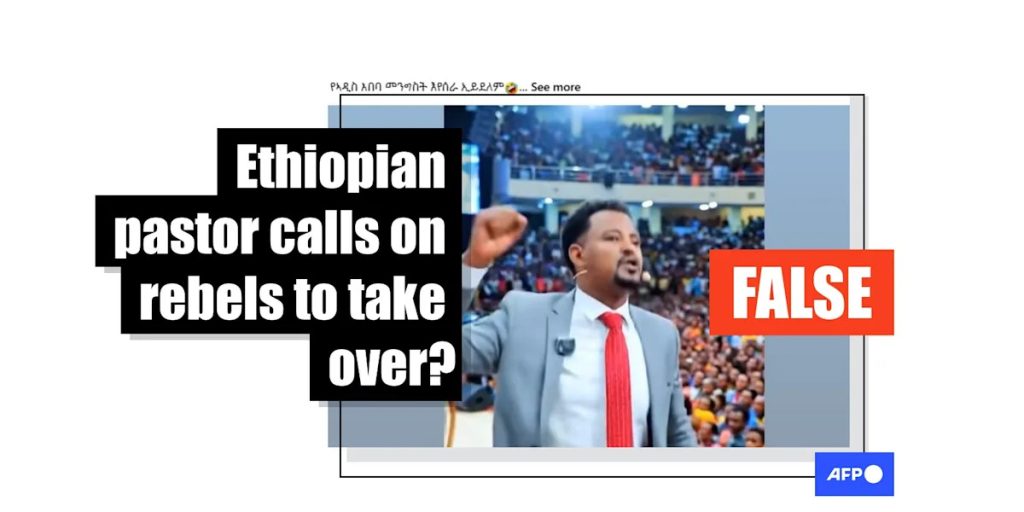

AI-Generated Video Falsely Shows Ethiopian Pastor Calling for Rebel Takeover

A fabricated video circulating on social media falsely depicts prominent Ethiopian pastor Tizitaw Samuel calling for Amhara rebels to overthrow the government, as tensions between federal forces and militia groups continue to escalate across the northern region.

The AI-generated clip, which has been shared more than 200 times, appears to show the Protestant pastor and former Ethiopian Orthodox Church singer addressing a large crowd, stating: “The Addis Ababa government is not able to work for the people. Today, we want the Amhara Fano to come and overtake the government.”

In the video, audience members respond with synchronized shouts of “Amen” while raising their hands in unison, with two individuals even appearing to perform handstands. The Amharic text accompanying the post claims, “Watch what this religious leader saying, ‘The Addis Ababa government is not working for the people’.”

The spread of this fabricated content comes at a particularly volatile moment in Ethiopia’s ongoing internal conflict. On October 10, 2025, the International Committee of the Red Cross (ICRC) reported significant casualties in Ethiopia’s Amhara region, where government forces have been engaged in combat with Fano militia since April 2023.

Despite the lifting of a state of emergency in June 2024, violent confrontations between federal troops and Fano forces have continued unabated, contributing to a deteriorating humanitarian situation in the region.

Multiple technical elements reveal the video’s artificial origin. Close examination shows numerous women in the audience with nearly identical facial features, while crowd movements appear unnaturally synchronized. Most tellingly, the supposed handstands performed by two men toward the end of the clip depict physically impossible body contortions – common anomalies in AI-generated content.

Further confirming the video’s artificial nature is a visible “Veo” watermark in the bottom right corner. Veo, Google’s recently launched AI-powered video generation tool, allows users to create realistic-looking videos with a maximum duration of eight seconds – precisely matching the length of this fabricated clip.

Analysis using Google DeepMind’s SynthID detector, a specialized tool that identifies AI-generated content, confirmed that both the video footage and portions of the audio were created using Veo technology.

The viral nature of the content highlights Ethiopia’s deep societal divisions, with commenters under the video revealing polarized reactions. “This is crazy. How come all these people say Amen to such evil calls? When will our people become aware of evil missions?” wrote one user, while another commented: “Amen! Amen!! Fano will come soon.”

Tizitaw Samuel, who currently resides in the United States, has been the subject of other misinformation in the past. Two months ago, he held a large spiritual music conference in Addis Ababa that became a topic of social media discussion regarding his adaptation of former Orthodox songs to reflect Protestant beliefs.

There is no credible evidence that Tizitaw has ever publicly called for Fano rebels to overthrow the Ethiopian government.

The emergence of this sophisticated fake video underscores the growing challenge of AI-generated disinformation in conflict zones, where fabricated content can potentially inflame tensions and contribute to real-world violence. As AI video generation tools become more accessible and their outputs more convincing, distinguishing fact from fiction in politically volatile regions becomes increasingly difficult.

Verify This Yourself

Use these professional tools to fact-check and investigate claims independently

Reverse Image Search

Check if this image has been used elsewhere or in different contexts

Ask Our AI About This Claim

Get instant answers with web-powered AI analysis

Related Fact-Checks

See what other fact-checkers have said about similar claims

Want More Verification Tools?

Access our full suite of professional disinformation monitoring and investigation tools

10 Comments

This is a troubling example of how AI technology can be misused to create false narratives and inflame already fragile situations. Fact-checking and media literacy efforts are crucial to combat the spread of disinformation.

I agree. The international community should support initiatives that promote the responsible development and use of AI technology, with a focus on safeguarding against the creation and dissemination of fabricated content.

As the tensions in Ethiopia continue to escalate, it’s critical that accurate and reliable information is shared. Fabricated videos like this one undermine efforts towards peace and stability in the region.

Absolutely. The use of AI to create false content is a concerning trend that needs to be addressed. Fact-checking and media literacy efforts are essential to combat the spread of disinformation.

The timing of this false video is particularly concerning, given the volatile situation in Ethiopia. Efforts to de-escalate tensions and promote dialogue should not be undermined by the spread of disinformation.

Absolutely. All parties involved, including the government, rebel groups, and religious leaders, must condemn the use of this fabricated video and work together to address the underlying issues driving the conflict.

This is a disturbing development. Falsely depicting religious leaders as calling for rebellion is extremely irresponsible and dangerous. The spread of disinformation and fabricated content could further inflame an already volatile situation in Ethiopia.

Agreed. The international community must condemn the creation and dissemination of this AI-generated video. Spreading such divisive misinformation is unacceptable and risks exacerbating the conflict.

This is a serious breach of journalistic ethics and responsible reporting. Spreading unverified and potentially incendiary content, especially related to religious and political figures, is deeply troubling.

I agree. The Disinformation Commission should investigate the source of this fabricated video and take appropriate action to hold the creators accountable and prevent further dissemination of this kind of content.