Listen to the article

Advanced Transfer Learning Framework Enhances Fake News Detection in Limited Data Settings

A sophisticated computational framework leveraging transfer learning techniques has demonstrated significant improvements in fake news detection, especially when working with limited datasets. This approach combines traditional text preprocessing strategies with advanced word embedding methods and a novel multi-stage fine-tuning process for pre-trained language models.

At the core of this system is a comprehensive text preprocessing pipeline designed to enhance the semantic quality of textual inputs. The pipeline implements tokenization, case normalization, stop-word removal, and stemming to standardize the text. Additionally, Part of Speech (POS) tagging plays a crucial role by identifying the grammatical structure of sentences, enabling the model to detect syntactic patterns often characteristic of deceptive content.

“Fake news frequently exhibits distinctive syntactic patterns, such as exaggerated adjectives or passive constructions, which POS tagging helps capture,” explained researchers familiar with the work. “These steps are especially essential when working with small datasets, where every linguistic cue becomes valuable.”

The framework incorporates multiple word embedding techniques to transform text into numerical representations that algorithms can process. One-Hot Encoding converts words into sparse vector representations, while Word2Vec methods like Continuous Bag of Words (CBOW) and Skip-Gram create dense vector representations based on word context and co-occurrence patterns.

“Different embedding approaches capture distinct linguistic properties,” noted an expert in natural language processing. “Word2Vec focuses on semantic similarity based on co-occurrence patterns, while other methods like FastText improve generalization by incorporating subword information.”

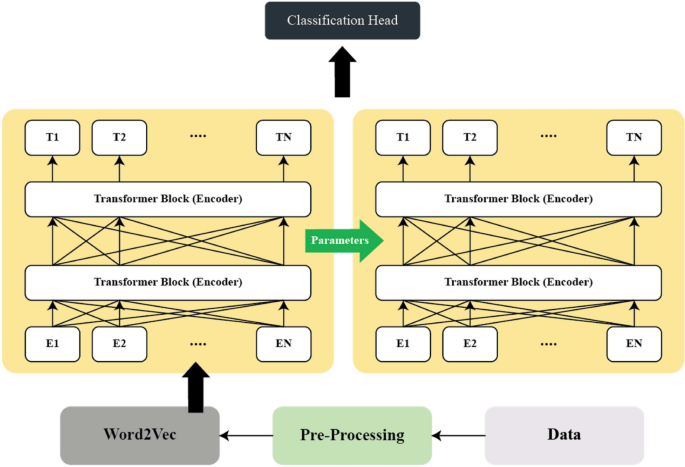

The most innovative aspect of the framework involves a multi-stage transfer learning strategy using RoBERTa (Robustly Optimized BERT Pretraining Approach). RoBERTa, a transformer-based language model, undergoes an initial pre-training phase on extensive text corpora, allowing it to capture nuanced language patterns across diverse contexts.

The researchers implemented a two-stage fine-tuning process specifically designed for fake news detection in limited-data scenarios. Initially, the model undergoes fine-tuning on a related large corpus to learn domain-specific features. Subsequently, a second fine-tuning phase targets smaller datasets like PolitiFact and GossipCop, with carefully controlled learning rates and selective layer freezing to prevent overfitting.

The technical implementation details reveal how RoBERTa processes text through its attention mechanisms. The model transforms input tokens into vector representations and applies positional encodings to maintain sequence information. Multi-head self-attention mechanisms then allow the model to evaluate relationships between tokens, with each attention head focusing on different aspects of the text.

During fine-tuning, the researchers used the Adam optimizer with a learning rate of 0.001 and implemented early stopping based on validation loss to prevent overfitting. They also experimented with freezing initial transformer layers to evaluate impacts on model generalization.

Market analysts suggest this research has significant implications for social media platforms and news organizations struggling to combat misinformation. The ability to detect fake news with higher accuracy using smaller datasets could lead to more efficient content moderation systems and improved information integrity.

“The combination of sophisticated preprocessing, multiple embedding techniques, and multi-stage transfer learning creates a robust framework that outperforms conventional approaches,” concluded one industry observer. “This represents a meaningful step forward in addressing one of the most pressing challenges in our information ecosystem.”

As misinformation continues to proliferate online, these advanced detection methods offer promising solutions for identifying deceptive content before it can widely spread, potentially reducing its harmful societal impact.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

16 Comments

Impressive use of advanced NLP techniques to enhance fake news detection. The text preprocessing pipeline and multi-stage fine-tuning process seem quite robust. I wonder how this compares to other state-of-the-art approaches in terms of accuracy and speed.

Agreed, the combination of word embeddings and fine-tuning pre-trained language models is a clever way to maximize performance with limited data. I’m eager to see the real-world results of this framework.

As someone concerned about the impact of fake news, I’m encouraged to see the development of advanced detection techniques leveraging large language models. The multi-stage fine-tuning process seems like a smart way to maximize the potential of transfer learning.

Yes, the integration of POS tagging is an interesting twist. I wonder how this framework would fare against more sophisticated fake news generation models that try to mimic natural language patterns.

Fascinating work on leveraging transfer learning and large language models to enhance fake news detection. The text preprocessing pipeline and multi-stage fine-tuning process sound like a comprehensive solution. I’m eager to see the performance results of this framework.

Yes, the incorporation of POS tagging is an innovative twist. I wonder how this approach would fare against more sophisticated fake news generated by advanced language models.

This is a fascinating approach to tackling the tricky problem of fake news detection. Leveraging transfer learning to overcome limited datasets is a smart move. I’m curious to see how the syntactic pattern analysis performs in real-world applications.

Yes, the POS tagging component sounds like a key innovation. Detecting those linguistic cues could be a game-changer for automated fake news identification.

Fake news is a serious problem, so I’m glad to see researchers developing innovative solutions like this. The focus on syntactic patterns is an intriguing angle. I hope this framework can be widely adopted to help combat the spread of misinformation.

Absolutely, the ability to detect linguistic cues of deceptive content is a valuable tool. I’m curious how well this approach would perform on different types of fake news, from political to commercial.

This is an impressive technical approach to combating fake news. I’m particularly interested in the use of POS tagging to identify syntactic patterns associated with deceptive content. Leveraging transfer learning to overcome limited datasets is a smart strategy.

Yes, the multi-stage fine-tuning process sounds like a clever way to maximize the potential of large language models. I’d be curious to see how this framework performs on different types of fake news, from political to commercial.

As someone concerned about the proliferation of misinformation, I’m really intrigued by this research. Combining advanced NLP techniques like transfer learning and syntactic pattern analysis seems like a promising way to tackle the fake news challenge.

Absolutely, the ability to detect linguistic cues of deception could be a powerful tool. I’m curious to see how this framework performs in real-world applications and how it compares to other state-of-the-art approaches.

This is an impressive technical approach to a critical problem. I like how the researchers have combined various NLP techniques to create a robust fake news detection system. The emphasis on syntactic patterns is particularly intriguing.

Agreed, the ability to detect linguistic cues of deception could be a game-changer. I’m curious to see how this framework performs compared to human fact-checkers in real-world scenarios.