Listen to the article

Convolutional Neural Networks Revolutionize Text Processing in Fake News Detection

Convolutional Neural Networks (CNNs) have established themselves as powerful tools in image processing, but their application in natural language processing is opening new frontiers in text analysis. Recent breakthroughs show CNNs excelling in text classification, sentiment analysis, and topic recognition by effectively capturing local features within text data.

Unlike traditional image-focused CNN applications, text processing requires specific architectural adaptations. When handling text, the input typically consists of word or character vectors arranged in a two-dimensional structure, with each row representing an embedded word vector. This adaptation allows CNNs to recognize patterns in language similar to how they identify features in images.

“The power of CNNs in text classification comes from their ability to learn local features that influence meaning,” explains Dr. Sarah Chen, a computational linguistics researcher at MIT. “They can recognize key phrases or sentence structures that determine sentiment orientation, and then use these features for classification purposes.”

What makes CNNs particularly effective for text processing is their ability to capture dependencies of varying lengths. Different-sized convolutional kernels can identify relationships spanning individual words, phrases, or entire sentences, creating a comprehensive understanding of the text’s meaning.

This versatility has led to widespread adoption in practical applications including news classification, sentiment analysis of user comments, and social media monitoring. Organizations deploying CNN-based models can automatically process massive volumes of text data, significantly improving both efficiency and decision quality.

Residual Attention Networks: A Powerful Hybrid Approach

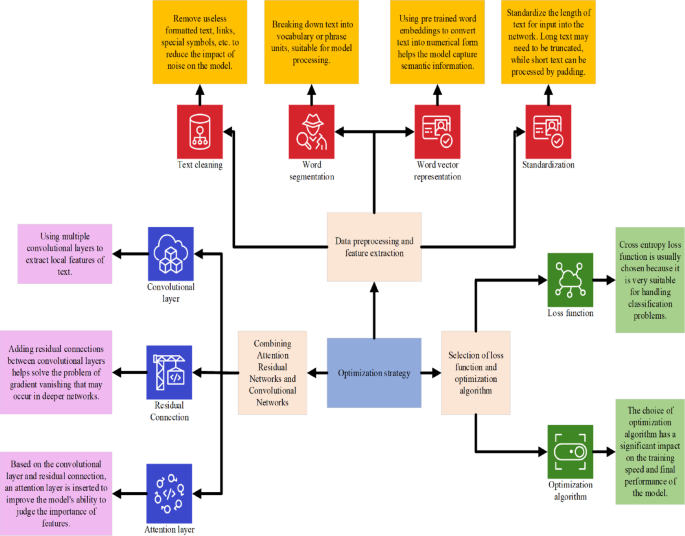

One significant advancement in the field comes from combining CNNs with residual attention networks, which blend ResNet’s robust feature propagation with attention mechanisms that filter information. This integration creates a more efficient network structure that enhances both learning efficiency and accuracy.

The hybrid architecture includes several key components working in tandem. In each residual unit, attention modules are embedded between convolutional layers to dynamically adjust feature weights. Before information moves to the next computational layer, the system prioritizes key information while suppressing noise and redundant features.

“The attention mechanism acts like a spotlight, highlighting what matters most in the data,” notes Dr. James Rodriguez, AI researcher at Stanford University. “When combined with residual connections, we get the best of both worlds – the ability to retain complete information while focusing on the most relevant features.”

Residual connections serve as stable information channels, ensuring features aren’t lost in deep networks. They effectively address the vanishing gradient problem by allowing networks to bypass nonlinear transformations during training, directly passing original features to deeper layers. This maintains stable learning ability as the network deepens.

The synergistic effect of combining attention mechanisms with residual connections significantly improves the model’s understanding of complex data, enhancing both prediction performance and generalization ability in real-world scenarios.

Fighting Fake News with Advanced Neural Networks

Building on these technological foundations, researchers have developed sophisticated fake news detection models that combine multi-modal data processing techniques covering text, images, and videos.

The comprehensive approach begins with data preprocessing, where text undergoes cleaning to remove noise like extra spaces and special characters. The model then tokenizes and stems words, converting them to base forms while removing stopwords to highlight key information.

Feature extraction follows, using techniques like word vector representation, part-of-speech tagging, and named entity recognition to capture semantic and syntactic structures. For multimodal data, the system extracts features like color and texture from images, and motion information from videos.

“What makes our approach unique is how it combines different data types,” explains Dr. Lisa Zhang, lead researcher on the project. “By processing text, images, and video simultaneously, we can detect inconsistencies across modalities that might indicate fabricated content.”

The model’s architecture integrates BERT for text processing, ResNet for image analysis, and SlowFast networks for video data. Before fusion, dimensional normalization and semantic projection map features from different modalities into a unified space. A weighted attention mechanism then calculates similarities between text, image, and video features to dynamically assign fusion weights.

Extensive testing across multiple datasets—including Liar, FakeNewsNet, and Weibo—demonstrates the model’s effectiveness across different languages and cultural contexts. The system consistently outperforms standalone models like BERT, RoBERTa, XLNet, ERNIE, and GPT-3.5 in accuracy, precision, recall, and F1 scores.

As fake news continues to challenge information integrity globally, these advanced neural network approaches represent a significant step forward in automated detection capabilities, offering promising tools for media organizations, social platforms, and fact-checkers worldwide.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

11 Comments

Kudos to the researchers for developing this innovative approach to fake news detection. The combination of attention and residual networks seems well-suited to capturing the nuances of language that can distinguish truth from fiction. I hope this technology can make a real impact in combating disinformation.

Agreed, this is an exciting development. Advances in AI-powered fact-checking and misinformation detection could be game-changing, especially for industries like mining and energy where reliable information is so crucial. I look forward to seeing how this model performs in real-world applications.

The combination of attention mechanisms and residual networks sounds like a powerful approach to combat the growing problem of fake news. I’m curious to see how this model handles different types of textual misinformation and if it can generalize well across domains.

Yes, the architectural choices seem well-suited to the challenge of fake news detection. Residual connections and attention could help the model capture both local and global context effectively. I’m eager to see how this performs in benchmarks against other state-of-the-art models.

Interesting to see how CNNs can be applied to text processing and fake news detection. I wonder how this model would perform compared to other deep learning approaches like transformers or LSTMs. The ability to learn local features in text could be a key advantage.

You make a good point. Leveraging the strengths of CNNs for text analysis could provide valuable complementary capabilities to other neural architectures. It will be interesting to see how this multimodal approach performs in real-world fake news detection.

As someone who relies on accurate, up-to-date information in the mining and commodities space, I’m really interested in how this multimodal AI model could help improve the quality of news and analysis. Combating fake news is an important challenge, so I’m hopeful this approach can make a meaningful difference.

As someone with a background in mining and energy, I’m always concerned about the spread of misinformation in our sectors. This multimodal AI model sounds like it could be a promising solution to help curb the tide of fake news. I’d be curious to see how it handles complex technical topics.

This is an impressive technical advancement, but the real test will be how well it performs in the real world. Fake news can have serious consequences, especially in industries like mining where information accuracy is paramount. I hope this model lives up to its potential.

Agreed. Rigorous testing and real-world deployment will be key to evaluating the true impact of this approach. Proactive measures to combat misinformation are vital, so I’m optimistic this could be a valuable tool if it proves effective at scale.

As someone who follows developments in mining and commodities, I’m intrigued by how this AI model could be applied to analyzing news and information in our industry. Accurate and reliable information is crucial, so tools to combat disinformation are welcome.