Listen to the article

New Framework for Detecting Arabic Fake News Shows Promise

A team of researchers has developed a systematic approach to address the challenges of Arabic fake news detection, offering a promising framework that combines multiple deep learning models and word embedding techniques to achieve high accuracy in identifying false information.

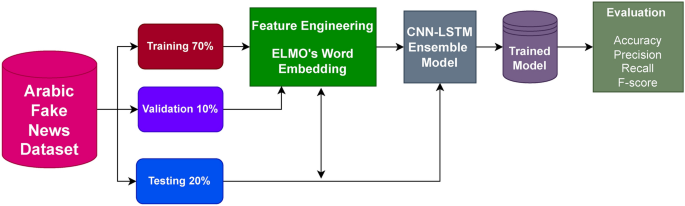

The new methodology takes a comprehensive approach, first implementing and evaluating various deep learning models including Convolutional Neural Networks (CNN), Long Short-Term Memory networks (LSTM), ConvLSTM, EfficientNetB4, Inception, Xception, and ResNet. All these models initially leverage ELMo embeddings for contextual representation of Arabic text.

In a methodical evaluation process, the researchers identified the top four performing models and further analyzed them using multiple word embedding techniques: ELMo, GloVe, BERT, FastText, Subword, and FastText Subword. This thorough comparison allowed them to determine which embedding method works most effectively for Arabic fake news detection.

The study utilizes The Arabic Fake News Dataset (AFND), a publicly available collection from Kaggle that contains news articles from 134 websites. To protect source identities, the URLs have been anonymized with labels like “source_1” and “source_2” in the dataset files. Each source is labeled according to its credibility status as “credible,” “not credible,” or “undecided.”

Word embedding techniques, which map words to numerical values in vector space, play a crucial role in the framework’s ability to understand Arabic text. Unlike traditional methods that treat words as isolated entities, these embedding techniques preserve semantic relationships between words, allowing the system to capture context and meaning.

The study employed several advanced embedding methods, each with unique strengths. GloVe extracts co-occurrence data from large text corpora, while BERT determines a word’s meaning based on its context in a sentence, using bidirectional transformers to understand both forward and backward word relationships. FastText improves representation by incorporating subword information, making it resistant to out-of-vocabulary words—particularly valuable for morphologically rich languages like Arabic.

ELMo, another contextual embedding method, processes entire phrases using bidirectional LSTM neural networks, allowing it to understand multiple layers of context. This makes it especially beneficial for tasks like sentiment analysis and machine translation.

The research team experimented with several deep learning architectures. Multilayer Perceptron (MLP) networks apply non-linear transformations to input data, while CNNs excel at feature extraction. LSTM networks identify long-range dependencies in sequential data, and EfficientNetB4 balances accuracy and computational efficiency through compound scaling of depth, width, and resolution.

Other models tested include Inception-v3, which reduces parameter count through multiple layers and parallel processing; Xception, which compresses input space using specialized convolutions; ResNet, which addresses the gradient vanishing problem in deep networks; and ConvLSTM, which combines convolutions with recurrence to address both short- and long-term dependencies in text data.

The researchers’ proposed ensemble model leverages the strengths of both CNN and LSTM networks using a voting classifier approach. This combination allows the system to benefit from CNN’s feature extraction capabilities alongside LSTM’s sequence modeling techniques, creating a more robust framework for Arabic fake news detection.

To evaluate performance, the team employed standard metrics including accuracy, precision, recall, and F1 score. The framework was also benchmarked against state-of-the-art transformer-based approaches like BERT and RoBERTa to assess its competitiveness.

Additionally, the researchers applied LIME (Local Interpretable Model-agnostic Explanations) techniques to provide interpretability for the predictions, offering insights into how individual features contribute to fake news identification—a crucial aspect for building trust in automated detection systems.

As misinformation continues to pose significant challenges globally, this research represents an important step forward in developing reliable tools to combat fake news in Arabic-language media, where automated detection has traditionally lagged behind English-language solutions due to language-specific challenges and limited resources.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

10 Comments

Tackling fake news in the Arabic-speaking world is a critical need. This multi-model deep learning approach with explainable AI is a promising direction. Glad to see academic research addressing this problem.

Curious to learn if this framework could be extended to detect coordinated disinformation campaigns, not just individual false articles. That would be a valuable next step.

Impressive to see the researchers tackle Arabic fake news using such a comprehensive deep learning approach. The ability to leverage large public datasets like AFND is a major advantage.

I’m curious about the model’s performance on more nuanced or context-dependent cases of misinformation. Can it reliably detect subtle forms of disinformation?

This research seems like an important step in combating the spread of misinformation, especially in languages like Arabic which can pose unique challenges. Leveraging large datasets like the AFND is crucial.

I wonder how this model could be applied to fake news detection in other languages beyond just Arabic. The principles and techniques may have broader applicability.

The combination of CNN, LSTM, and various word embeddings sounds like a robust methodology for Arabic fake news detection. Thorough model evaluation and comparison is essential for developing an effective solution.

Explainable AI is a key aspect here, allowing for better understanding of how the model arrives at its classifications. That transparency is crucial for real-world deployment.

Interesting to see Arabic fake news detection tackled with advanced deep learning models like CNN-LSTM. Analyzing multiple embedding techniques to optimize performance is a smart approach.

I’m curious to learn more about the specific performance improvements they achieved compared to previous methods. The use of explainable AI is also intriguing.