Listen to the article

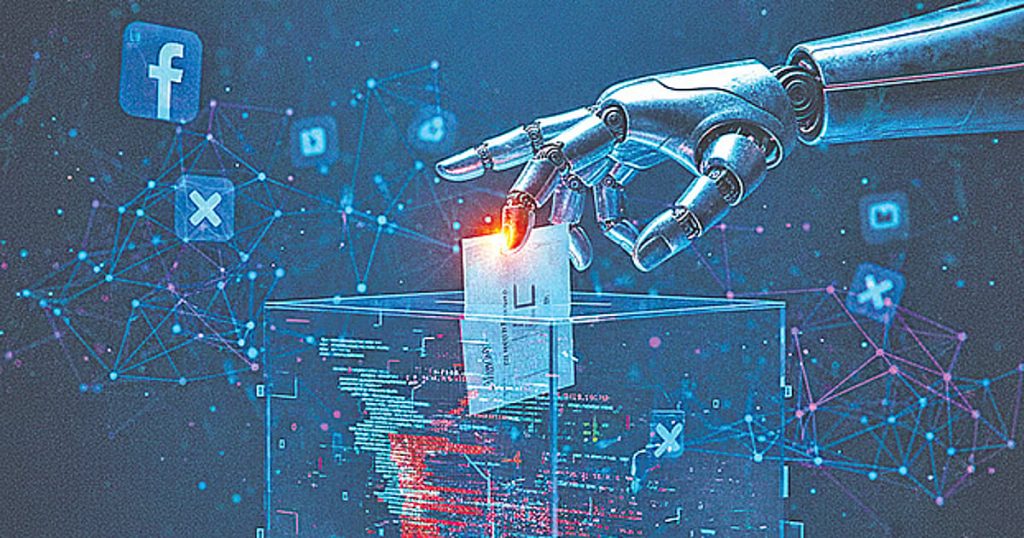

The urgent call for coordinated action against AI misuse has gained momentum as experts warn of growing threats to information integrity and democratic processes. With artificial intelligence technologies becoming more accessible, the potential for sophisticated digital deception has prompted demands for a comprehensive multi-stakeholder approach.

Government authorities and regulatory bodies face mounting pressure to establish clear legal frameworks that specifically address AI-driven misinformation, deepfakes, and digital fraud. Proposals include classifying these activities as criminal offenses with provisions for expedited legal proceedings and significant penalties to serve as deterrents.

Electoral integrity has emerged as a particular concern, with recommendations that election commissions and political authorities develop specific guidelines on AI usage in campaign materials. These guidelines would likely require candidates and political parties to provide written commitments against employing deepfakes or misinformation tactics, establishing accountability for digital content distributed during electoral periods.

“The technological capabilities for creating convincing false content have outpaced our safeguards,” said a cybersecurity expert who requested anonymity due to the sensitivity of their work. “Without clear boundaries and consequences, we risk normalizing digital deception in our political discourse.”

Political organizations themselves bear significant responsibility in this evolving landscape. Recommendations include implementing rigorous internal fact-checking protocols for campaign materials, clearly designating official digital channels to help voters distinguish authentic content, and establishing rapid response teams to address suspicious content that appears to originate from their campaigns.

Law enforcement agencies stand at the frontline of enforcement efforts. Security experts advocate for the creation of specialized cyber and AI forensic units equipped with advanced technical capabilities to detect, trace, and remove fabricated content. These units would require extensive training in digital forensics and collaboration with technology platforms to effectively combat sophisticated AI forgeries.

“The technical complexity of detecting AI-generated content demands specialized expertise,” explained Dr. Maya Rodriguez, director of the Digital Ethics Institute. “What makes this particularly challenging is that detection technologies must continuously evolve as generation capabilities advance.”

Media organizations, technology platforms, and civil society groups represent another critical defense layer. Coordinated fact-checking initiatives have begun to emerge across various countries, combining the reach of major platforms with the credibility of independent verification organizations. These efforts aim to flag misleading content before it achieves widespread circulation.

Digital literacy programs have gained traction as a long-term preventive measure. These initiatives focus on equipping citizens with the critical thinking skills necessary to evaluate online information independently, reducing vulnerability to manipulation tactics.

Industry observers note that technology companies face increasing expectations to implement more robust content authentication systems. Several major platforms have begun testing digital watermarking and content provenance tools that could help users identify AI-generated material.

The multifaceted approach reflects growing recognition that no single entity can effectively address the challenges posed by AI misuse. Rather, effective safeguards require coordination between government regulations, corporate policies, civic education, and individual responsibility.

As artificial intelligence capabilities continue advancing rapidly, the stakes for implementing these protective measures grow higher. Without proactive intervention, experts warn that declining trust in digital information could undermine public discourse, electoral integrity, and ultimately democratic institutions themselves.

“This isn’t just about preventing isolated instances of deception,” emphasized Dr. Rodriguez. “It’s about preserving the foundations of informed citizenship in the digital age.”

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

10 Comments

Kudos to the experts sounding the alarm on this issue. Safeguarding election integrity in the digital age requires ongoing vigilance and a coordinated, proactive approach across government, tech, and civil society.

Exactly. With the proliferation of AI-powered disinformation tools, maintaining public trust in the democratic process has become an urgent priority that demands immediate and sustained attention.

This is a worrying trend that merits serious concern. The threat of deepfakes and AI-driven misinformation undermining free and fair elections is a grave risk to democracy. Decisive action is clearly needed to address this challenge.

Well said. The stakes are extremely high, and a robust, multi-stakeholder response will be essential to protect the integrity of our elections and safeguard the foundations of democratic governance.

This is a complex challenge with high stakes. While the technology itself is neutral, the potential for bad actors to exploit it for political gain is very concerning. A multi-pronged response is clearly needed.

Agreed. Establishing clear rules, improved detection capabilities, and public education will all be crucial components of an effective strategy to mitigate the risks posed by deepfakes and AI-fueled misinformation.

This is a really concerning issue. The potential for deepfakes and AI-driven misinformation to undermine election integrity is quite alarming. Strict regulations and enforcement will be critical to safeguard the democratic process.

I agree, the threat of digital deception is very serious. Clear legal frameworks and stiff penalties are needed to deter these abuses and protect the integrity of our elections.

As AI and deepfake tech becomes more advanced, the risks of electoral fraud and voter manipulation are growing exponentially. Rigorous authentication and fact-checking measures must be implemented to ensure the credibility of political messaging.

Absolutely. Proactive steps by election officials, tech companies, and the public will all be vital to combat the spread of AI-generated disinformation and maintain trust in the democratic process.