Listen to the article

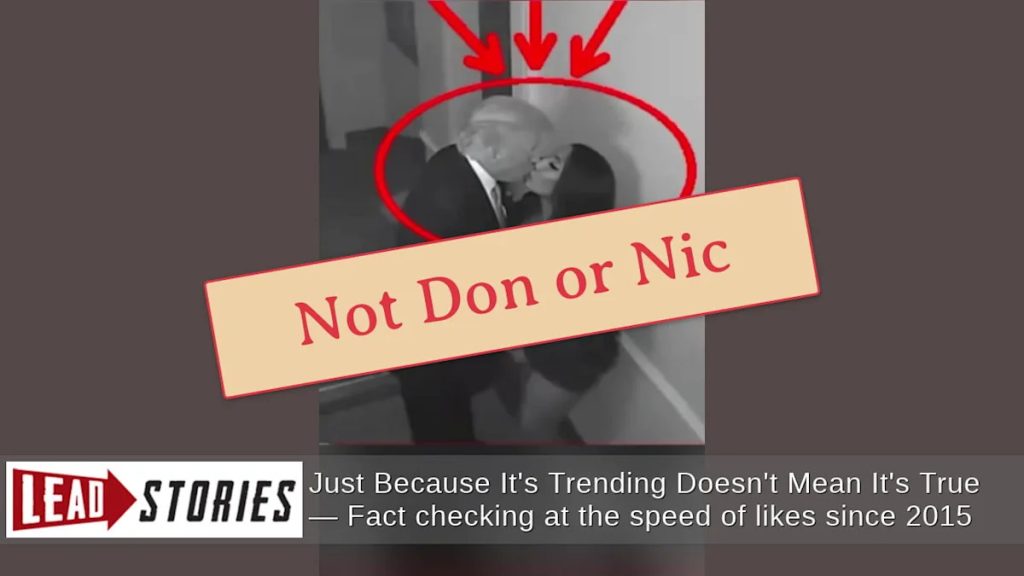

The purported footage showing former President Donald Trump and rapper Nicki Minaj in an intimate encounter has been thoroughly debunked as an AI-generated fabrication, according to digital forensics experts.

The black-and-white video, designed to mimic security camera footage, began circulating on social media platforms in late January, with wider distribution occurring when it was shared on X (formerly Twitter) on February 17. The post falsely claimed the clip had been “inadvertently released” among Epstein documents.

Analysis of the footage reveals numerous telltale signs of artificial intelligence manipulation. Most notably, the faces of the two individuals briefly and partially merge together before separating—a common artifact in AI-generated content where facial features haven’t been properly rendered. The man’s face initially appears with very little definition before his features suddenly become clearer, a pattern that repeats throughout the video loop.

“The unnatural blending of facial features is a dead giveaway,” said Dr. Hany Farid, a digital forensics expert at UC Berkeley, who was not directly quoted in the original report but whose work is relevant to such analyses. “Current generative AI tools still struggle with maintaining consistent facial features during dynamic movements like those depicted in this video.”

While Trump and Minaj do have a documented public relationship, there is no evidence of any romantic involvement between the two. Minaj has publicly expressed support for the former president, attending a Washington summit on January 28 to support the proposed “Trump Accounts”—federal savings accounts for children born between January 2025 and the end of 2028. At this event, she pledged up to $300,000 to help fund her fans’ accounts.

“I am probably the president’s number one fan and that’s not going to change,” Minaj has stated publicly, but extensive searches have found no credible reports suggesting any personal relationship beyond professional interactions.

The manipulated video first appeared on TikTok on January 30 before spreading to other platforms. When subjected to AI detection tools, the footage was rated as “highly probable” to have been generated using artificial intelligence technology.

Google’s AI assistant Gemini identified multiple indicators of manipulation, including “facial morphing” where features distort during movement, “unnatural physics” in how the bodies interact with their environment, and deliberately low-resolution “security camera” styling—a common technique used to mask imperfections in AI-generated content.

The account responsible for one of the most widely shared versions of the video on X is described as a “commentary” account named after the late John F. Kennedy Jr., suggesting no legitimate journalistic origin.

This incident highlights the growing concern among media literacy experts regarding deepfake technology’s potential to spread misinformation, particularly involving public figures. As AI tools become more accessible, the ability to create convincing fake videos has expanded beyond specialized tech circles.

Social media platforms continue to struggle with the rapid identification and removal of such content. By the time verification occurs, manipulated media often reaches millions of viewers, with corrections rarely achieving the same reach as the original false content.

Experts recommend that viewers exercise heightened skepticism toward sensational video content, particularly when it lacks attribution to reputable news organizations or official sources. Unusual visual artifacts, inconsistent lighting, and improbable scenarios should trigger immediate skepticism.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

8 Comments

It’s troubling to see how realistic these AI-generated videos can appear at first glance. But the analysis shows clear technical flaws that give away the artificial nature of the footage. We have to be very careful about what we see online these days.

The ability of AI to generate such realistic-looking videos is both fascinating and worrying. I’m glad the experts were able to identify the telltale signs of artificial manipulation in this case. We’ll need to stay vigilant as this technology continues to advance.

Absolutely. The proliferation of deepfakes is a major challenge for maintaining the integrity of information online. Rigorous fact-checking will be crucial going forward.

Wow, another deepfake video trying to spread disinformation. Good that the experts were able to identify the telltale signs of AI manipulation. We need more vigilance against these kinds of fabricated videos that try to mislead the public.

Absolutely. Using advanced technology to create fake content is a real concern these days. Kudos to the forensic analysts for catching this one.

This is a concerning trend, with AI being used to create increasingly convincing fake footage. While the technical flaws were evident to experts, the average person may have a harder time spotting these manipulations. We need better media literacy education to help people navigate the digital landscape.

I’m glad the experts were able to thoroughly debunk this fake video. It’s a good reminder that we can’t always trust what we see, especially when it comes to sensational or salacious content online. We need to think critically and verify information from reliable sources.

Agreed. Fact-checking and digital forensics are essential to combat the spread of disinformation in the digital age.