Listen to the article

AI-Generated Video Falsely Shows ICE Agent Entering California University Classroom

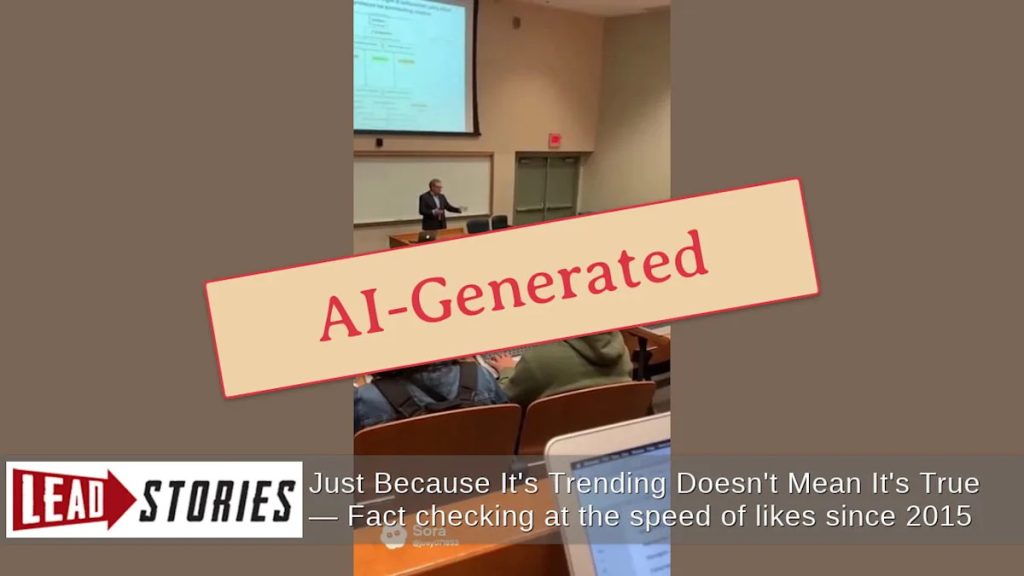

A video circulating on social media purportedly showing an Immigration and Customs Enforcement (ICE) agent entering a classroom at California State University, San Bernardino has been confirmed as fake. The video, which has garnered significant attention online, was created using artificial intelligence technology.

The clip first appeared on TikTok through the account @eye.eat.ai on January 19, 2026, with a caption stating “ICE Enters Class At Cal State University Looking For Student.” Despite its realistic appearance, the footage contains several telltale signs of AI generation, including multiple instances of the Sora watermark throughout the video.

Sora, a generative AI tool developed by OpenAI, has become increasingly sophisticated at creating realistic-looking videos that can be difficult to distinguish from authentic footage. This particular video represents a concerning example of how AI can be used to spread misinformation about sensitive topics like immigration enforcement.

Comprehensive searches through major news databases, including Google and Yahoo News, reveal no credible reports of ICE agents entering classrooms at California State University, San Bernardino, or any other California university in recent months. Such an incident would likely generate significant media coverage and public attention, particularly in California, where immigration policies and enforcement activities are closely scrutinized.

The circulation of this fabricated video comes amid ongoing tensions surrounding immigration enforcement in the United States. California, as a sanctuary state, has laws limiting cooperation between local law enforcement and federal immigration authorities, making the scenario depicted in the video particularly provocative.

Universities across the country, including those in the California State University system, have established policies regarding immigration enforcement on campus. Many institutions have declared themselves “sanctuary campuses” that provide certain protections to undocumented students, making them sensitive venues for immigration discussions.

Dr. Emily Rodriguez, an immigration policy expert at the University of California, commented on the broader implications of such fabricated content: “These AI-generated videos can cause real harm by spreading fear in immigrant communities and on college campuses where many students already feel vulnerable. They undermine trust in information at a time when accurate knowledge about rights and procedures is crucial.”

The emergence of this video highlights the growing challenge of digital literacy in an era where AI tools can create increasingly convincing fake content. Social media platforms continue to grapple with how to identify and flag AI-generated material that could mislead viewers or cause public alarm.

Technology ethics experts point to this incident as evidence of the need for stronger watermarking and disclosure requirements for AI-generated content, particularly when it depicts scenarios involving law enforcement or government agencies.

California State University officials have not yet issued a formal statement about the video, though university systems typically maintain clear protocols for any law enforcement activity on campus, including federal immigration enforcement.

As AI-generated content becomes more sophisticated and widespread, media literacy experts emphasize the importance of verifying information through multiple credible sources before accepting videos at face value, particularly when they depict controversial or emotionally charged scenarios.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

18 Comments

Interesting how advanced AI technology can be used to create such realistic-looking but ultimately fake videos. It’s important to be vigilant and verify information, especially on sensitive topics like immigration enforcement.

Absolutely, AI-generated media can be incredibly deceptive. Fact-checking and media literacy are critical skills in the digital age.

This is a worrying example of how AI can be used to spread misinformation. It’s a good reminder to always verify the authenticity of online content, especially on sensitive topics.

Yes, the rapid advancement of AI technology is making it increasingly difficult to distinguish real from fake. Developing robust detection methods and media literacy will be critical going forward.

This is a concerning example of how misinformation can spread quickly online. I’m glad the authorities were able to confirm this video as AI-generated and not a real event.

Yes, it’s worrying how convincing these AI-created videos can be. Increased scrutiny and better detection tools are needed to combat the rise of deepfakes.

It’s good that the authorities were able to quickly identify this video as AI-generated and not a real event. Spreading misinformation, even unintentionally, can have serious consequences.

Absolutely, and the rapid advancement of AI technology makes it increasingly challenging to distinguish real from fake. Fact-checking will be crucial going forward.

This is a concerning example of how AI can be used to create convincing but false content. It’s a stark reminder of the importance of verifying information, especially on sensitive topics.

Agreed. The rise of deepfakes is a major challenge that will require ongoing efforts to detect and combat misinformation.

The use of AI to create fake videos about sensitive topics like immigration enforcement is really troubling. We need to be extra vigilant in verifying the authenticity of online content.

I agree, this situation highlights the importance of media literacy and critical thinking when consuming news and information online.

I’m glad the authorities were able to quickly debunk this AI-generated video. It’s crucial that we remain vigilant and fact-check information, especially when it involves sensitive issues like immigration enforcement.

Absolutely. The ability of AI to create such realistic-looking footage is truly remarkable, but also concerning. Maintaining trust in media and information sources will be an ongoing battle.

The confirmation that this video was AI-generated is reassuring, but it also highlights the growing challenge of combating misinformation in the digital age. We must remain vigilant and fact-check information carefully.

Absolutely. The prevalence of deepfakes and other AI-generated content is a serious concern that requires ongoing efforts to address. Maintaining trust in media and information sources will be crucial.

It’s good that the authorities were able to quickly identify this video as AI-generated and not a real event. The ability of AI to create such convincing footage is truly remarkable, but also concerning in terms of the potential for misinformation.

I agree. The rapid advancements in AI technology make it increasingly difficult to distinguish real from fake. Developing robust detection methods and promoting media literacy will be essential in the fight against the spread of misinformation.