Listen to the article

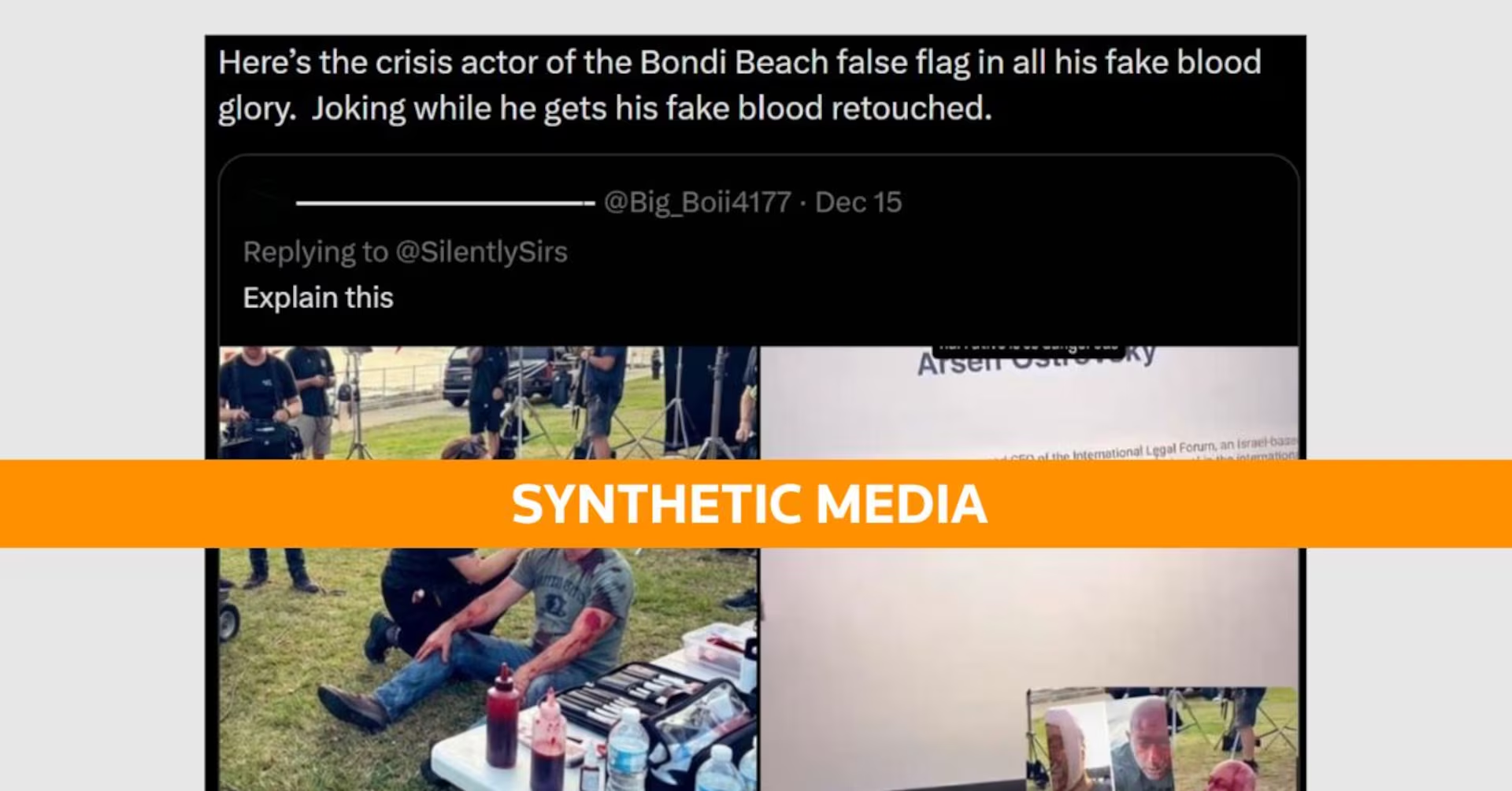

False images claiming to show victims of the Bondi Beach shooting applying fake blood have been identified as AI-generated content, according to an analysis using Google’s detection tools.

Social media posts circulating since December 15 have promoted conspiracy theories that Australia’s worst mass shooting in nearly three decades was staged. These posts included a fabricated image purportedly showing a man cheerfully receiving makeup touch-ups to create fake blood injuries, with makeup brushes and containers of red liquid clearly visible in the frame.

Google’s SynthID detection tool, which identifies hidden watermarks in AI-generated images created using Google’s models, confirmed the image was synthetically produced. This technological verification provides concrete evidence against claims that the Bondi Beach attack was a “false flag” operation using crisis actors.

Several posts specifically targeted Arsen Ostrovsky, a genuine victim of Sunday’s attack, falsely claiming he was an actor faking his injuries. Ostrovsky, a human rights lawyer, was interviewed by 9 News Australia with a bandage wrapped around his bloodied head following the incident. He told the broadcaster that he was “hit in the head” during the shooting and separately informed the Wall Street Journal that a bullet had grazed his head.

Responding to the disinformation campaign, Ostrovsky acknowledged on social media platform X that he was aware of the “twisted fake AI campaign” suggesting he had fabricated his injuries. “I saw these images as I was being prepped to go into surgery today and will not dignify this sick campaign of lies and hate with a response,” he wrote.

The Israeli embassy in Australia later posted photos of their ambassador visiting Ostrovsky in hospital as he recovered from surgery. The images showed him with a traditional sufganiyah, a deep-fried doughnut eaten during the Jewish festival of Hanukkah.

This incident highlights a growing concern among security and media experts about the sophisticated use of AI to create and spread misinformation following traumatic events. The technology makes it increasingly difficult for the public to distinguish between authentic and fabricated content, particularly during breaking news situations when emotions run high and information is limited.

The spread of such AI-generated content presents a troubling development in online misinformation campaigns. While false claims following mass casualty events are not new, the increasing sophistication and believability of AI-generated imagery creates additional challenges for factcheckers and news consumers alike.

Law enforcement and intelligence agencies worldwide have warned about the potential for artificial intelligence tools to be weaponized to sow discord, undermine trust in institutions, and deepen societal divisions following traumatic events.

Social media platforms continue to struggle with effectively moderating such content, particularly when images are rapidly shared across multiple platforms before fact-checkers can verify their authenticity.

The Bondi Beach shooting, which claimed multiple lives, represents Australia’s most severe mass shooting incident in almost 30 years, making the spread of misinformation particularly harmful to victims, their families, and the broader community as they process this traumatic event.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

14 Comments

It’s troubling to see conspiracy theories emerge around such a tragic event. I’m relieved the authorities were able to use detection tools to conclusively identify this image as synthetically produced.

Agreed. Weaponizing false images to smear victims is an unconscionable act. Credible fact-checking to counter these claims is an important safeguard.

It’s disheartening to see conspiracy theorists try to undermine the real tragedy of this event. I’m relieved the authorities were able to use detection tools to confirm the image was synthetic.

Agreed. Falsely accusing victims of faking their injuries is a shameful attempt to discredit the truth. Fact-checking is vital to expose these kinds of malicious falsehoods.

This AI-generated image is a concerning attempt to sow disinformation and conspiracy theories around a tragic real-world event. Fact-checking tools provide important verification against such fabricated claims.

You’re right, these types of false images can be very misleading. It’s good that the authorities were able to definitively confirm it as synthetic content.

Fabricating visuals to support conspiracy theories around real-world tragedies is extremely concerning. I’m glad the authorities were able to verify this particular image as AI-generated.

Yes, these kinds of synthetic visuals can be very misleading. Robust fact-checking to expose such disinformation is crucial to uphold the truth.

Spreading false information about victims and their injuries is highly unethical. I’m glad the authorities were able to debunk this particular claim and provide evidence that the image was AI-generated.

Absolutely. Targeting victims like that is truly despicable. Reliable fact-checking is crucial to counter these kinds of harmful conspiracy theories.

Fabricating images to support conspiracy theories around tragic events is extremely concerning. I’m glad the authorities were able to verify this particular image as AI-generated.

Yes, these kinds of synthetic visuals can be very misleading. It’s important that credible sources are able to provide technological proof to counter such disinformation.

Targeting victims by falsely claiming their injuries were staged is a deplorable tactic. I appreciate that fact-checking tools were used to confirm the image as AI-generated.

Absolutely. Spreading misinformation to undermine real victims is a shameful act. Robust verification is crucial to expose these kinds of malicious falsehoods.