Listen to the article

#

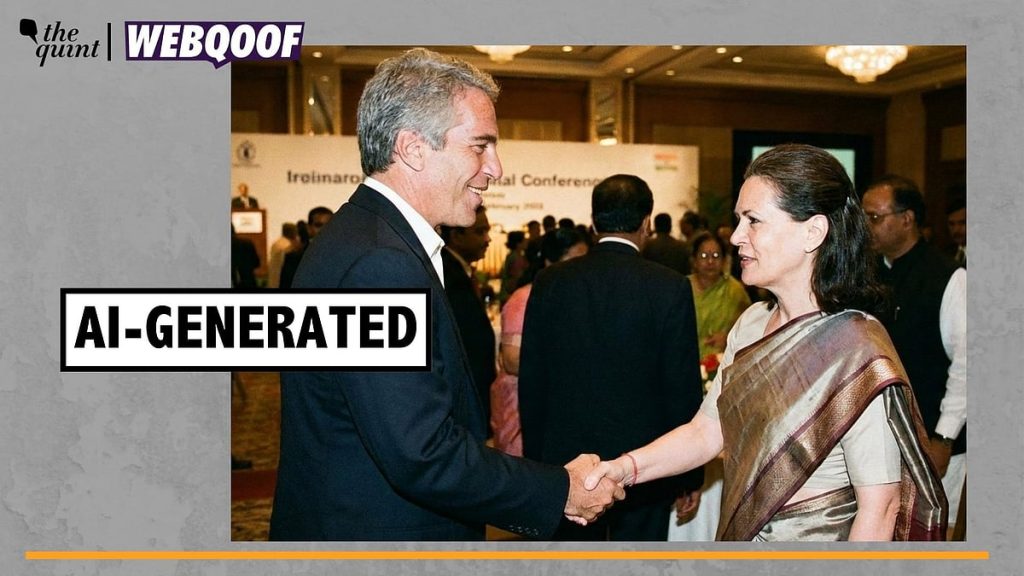

Debunked: Viral Image of Sonia Gandhi with Jeffrey Epstein is AI-Generated

A manipulated image purporting to show Congress leader Sonia Gandhi shaking hands with convicted sex offender Jeffrey Epstein has gained significant traction on social media platforms, misleading thousands of users who believed the interaction was authentic.

The doctored image began circulating widely after X (formerly Twitter) user ‘Avinash Mathankar’ shared it with a provocative caption in Hindi. The post, which translates to “NATION WANT TO KNOW @SupriyaShrinate @abbas_nighat. This meeting took place on which land, its entry is there in which file,” has amassed over 300,000 views, indicating the broad reach of the misinformation.

Upon investigation, fact-checkers have conclusively determined that the image is entirely fabricated using artificial intelligence technology. There is no record of any meeting between Gandhi and Epstein, who died in 2019 while awaiting trial on sex trafficking charges.

This instance highlights a growing trend of AI-generated deepfakes targeting political figures across the spectrum. As artificial intelligence tools become increasingly accessible, such fabrications have emerged as a significant concern during India’s ongoing election season, with political figures often becoming targets of digitally manipulated content designed to damage reputations.

Digital forensic experts point to several telltale signs of AI manipulation in the image, including inconsistent lighting, unnatural hand positioning, and subtle distortions around the faces—characteristics commonly associated with current AI image generation technologies.

“What makes these deepfakes particularly dangerous is their increasing sophistication,” said Dr. Avinash Kumar, a digital misinformation researcher at the Centre for Media Studies in New Delhi. “Even a year ago, the average person could spot most AI-generated images. Today’s tools create much more convincing fakes that require careful scrutiny to identify.”

The Congress party has faced numerous instances of digitally manipulated content during this election cycle. Similar fabricated images have targeted politicians across party lines, including Prime Minister Narendra Modi and other prominent leaders.

Social media platforms like X, Facebook, and WhatsApp have become primary vectors for spreading such misinformation. Despite platform policies against manipulated media, enforcement remains challenging due to the volume of content and the speed at which it spreads.

Media literacy experts recommend that users verify images through reverse image searches, check multiple credible news sources, and maintain healthy skepticism toward provocative images, particularly those showing unexpected meetings between controversial figures.

“The political implications of such fabrications cannot be overstated,” noted political analyst Seema Chowdhury. “In a polarized electoral environment, even debunked images leave lingering impressions on voters. The psychological impact often survives long after the factual correction.”

This incident comes amid heightened scrutiny of misinformation in India, where election authorities have established special units to monitor digital content. The Election Commission of India has repeatedly warned against the spread of misleading content that could potentially influence voter behavior.

Jeffrey Epstein, a disgraced American financier who died in 2019, has become a frequent element in conspiracy theories worldwide due to his connections with powerful global figures before his downfall and controversial death.

As elections progress across India, fact-checkers and media organizations have intensified efforts to quickly identify and debunk such manipulated content. However, the challenge remains formidable as AI-generated imagery continues to evolve in sophistication and believability.

The viral spread of this particular false image serves as a sobering reminder of how quickly misinformation can reach hundreds of thousands of viewers before fact-checks can catch up—a digital reality that continues to challenge democratic discourse in the age of artificial intelligence.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

8 Comments

This is a good example of the dangers of AI-generated deepfakes. While the technology can be used for benign purposes, it’s also being misused to create misleading and potentially harmful content. We need stronger safeguards to address this growing problem.

I agree, the proliferation of deepfakes is a serious issue that needs to be addressed. Fact-checking and media literacy are crucial to combat the spread of misinformation.

This case highlights the need for increased vigilance and media literacy when it comes to online content. While AI can be a powerful tool, its misuse to create disinformation is a serious concern that requires a multi-pronged approach to address.

The use of AI to create fake images is a worrying trend that undermines public trust. It’s essential that we develop robust strategies to identify and counter such manipulations, and promote media literacy to empower people to think critically about online content.

It’s concerning to see how easily these types of fabricated images can gain traction online. We need to be extra cautious about the sources we trust, especially when it comes to politically charged content.

This is a timely reminder of the importance of verifying the authenticity of online content, particularly when it involves sensitive political information. AI-generated deepfakes pose a real threat to the integrity of our public discourse.

Absolutely. Fact-checking and critical thinking are essential skills in the digital age, as the line between reality and fiction continues to blur.

Interesting, it seems like this image was fabricated using AI technology. It’s concerning how easily misinformation can spread these days, especially around political figures. We need to be vigilant in verifying the authenticity of online content.