Listen to the article

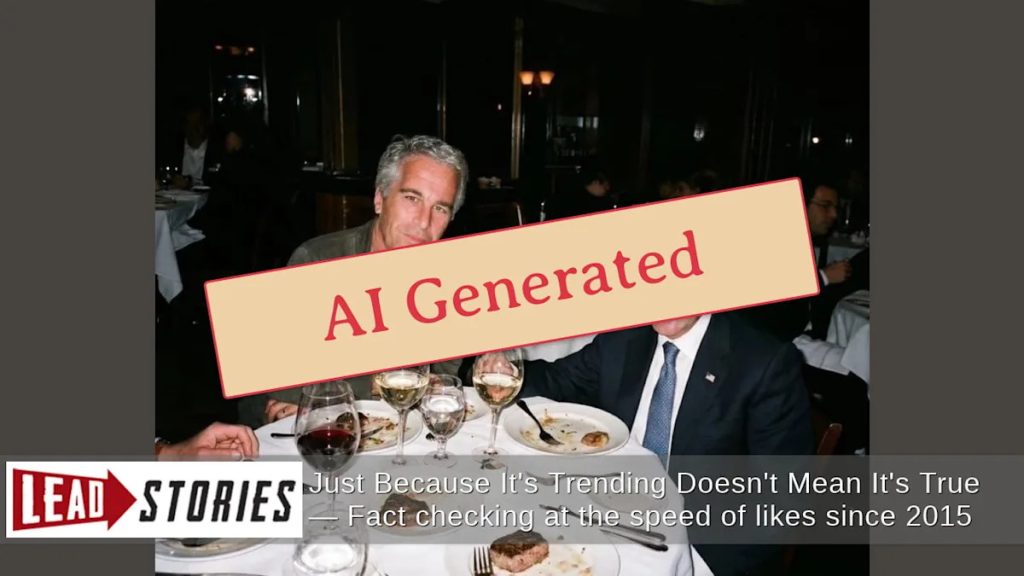

In a digital landscape rife with misinformation, AI technology is increasingly being weaponized to create convincing fake images of public figures. The latest example involves a purported photograph showing Senate Majority Leader Chuck Schumer dining with convicted sex offender Jeffrey Epstein—an image that has been conclusively identified as artificially generated.

Multiple AI detection tools have confirmed the fraudulent nature of the image. When analyzed using Google’s Gemini platform, the system identified a SynthID watermark embedded within the photograph—a digital signature specifically designed to indicate content created or manipulated using Google’s artificial intelligence tools.

The verification process didn’t stop there. Upon uploading the image to several other detection services, each returned results confirming the synthetic nature of the photograph. These sophisticated detection mechanisms are specifically designed to identify the telltale signatures that AI-generated images leave behind, even when they appear convincing to the human eye.

“This is a textbook example of how artificial intelligence can be misused to create political disinformation,” said a digital forensics expert who requested anonymity. “The technology has advanced to the point where casual observers might easily believe such images are authentic documentation of events that never occurred.”

The claim’s verification process went beyond technical analysis. Researchers conducted an exhaustive review of the Department of Justice’s comprehensive Epstein files, meticulously examining all 54 mentions of Senator Schumer within documents available as of February 2026. No legitimate photographs or documentation of any meeting between Schumer and Epstein were discovered.

Further investigation through Google Lens searches revealed that the only instances of this image appear on social media platforms where the fake photograph has been circulating. Comprehensive Google News searches using relevant keywords yielded no credible news reports of the two men ever dining together—something that would have undoubtedly generated significant media coverage had it actually occurred.

The circulation of this fabricated image highlights the growing challenge facing voters and news consumers in distinguishing between authentic and manipulated content, particularly as the United States approaches another contentious election cycle.

Digital misinformation experts note that politically motivated fake images are becoming increasingly sophisticated and difficult to identify without specialized tools. This evolution represents a significant escalation from earlier, more easily spotted manipulations, presenting new challenges for fact-checkers and news organizations.

“What makes these AI-generated images particularly dangerous is their ability to exploit existing narratives and biases,” explained Dr. Jennifer Kavanagh, a senior fellow at the RAND Corporation who studies truth decay and misinformation. “People are more likely to believe and share content that aligns with their existing political views, regardless of its authenticity.”

The circulation of this fake image comes amid heightened political tensions and increased scrutiny of politicians’ associations following the release of various Epstein-related documents. Public interest in these connections has created fertile ground for misinformation to flourish.

Social media platforms continue to struggle with effectively containing the spread of such manipulated content, despite implementing various detection systems and warning labels. By the time fact-checks occur, false images have often already reached millions of viewers.

As AI technology continues to advance, distinguishing between authentic and fabricated visual content will likely become increasingly challenging for the average person. This developing situation underscores the critical importance of digital literacy and a healthy skepticism toward provocative images that emerge on social media, especially those depicting high-profile political figures in compromising or controversial situations.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

8 Comments

It’s alarming to see how AI can be weaponized to create convincing fake images. This underscores the importance of reliable fact-checking and verifying the provenance of digital content, especially around sensitive political issues. We must remain vigilant.

This is a concerning example of how AI can be misused to create politically charged disinformation. Reliable fact-checking and verification processes are critical to exposing these synthetic media and upholding the integrity of our information landscape.

The ability of AI to generate realistic-looking fake images is a serious concern. Rigorous verification processes are crucial to exposing these manipulated media and preserving trust in our information ecosystem. Fact-checking is the best defense against disinformation.

Absolutely. With the rapid advancement of AI, the threat of synthetic content is only going to grow. Robust detection tools and diligent fact-checking are essential to combating the spread of misinformation and maintaining the integrity of our digital discourse.

Interesting how AI is being used to create fake images these days. It’s crucial we have robust detection tools to identify synthetic content and combat misinformation. Fact-checking is so important, especially for sensitive political issues.

Absolutely. AI-generated fakes can be incredibly convincing, so we need cutting-edge verification methods to expose them. Diligent fact-checking is the best defense against the spread of misinformation.

This is a concerning trend – using AI to fabricate images of public figures in compromising situations. It highlights the need for greater digital literacy and vigilance when consuming online content. Fact-checking is crucial to maintaining trust and transparency.

I agree. With the rapid advancement of AI, we must be extra careful about verifying the authenticity of digital media. Robust detection tools are essential to combat the growing threat of synthetic content and political disinformation.