Listen to the article

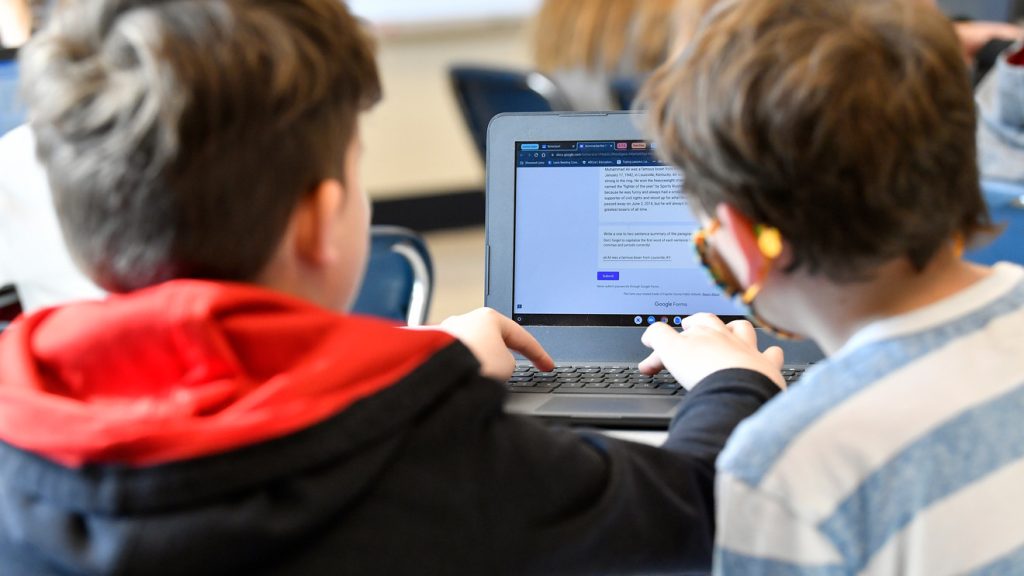

In a landmark move that has drawn global attention, Australia has implemented the world’s first nationwide ban prohibiting anyone under 16 from having social media accounts. The sweeping legislation, which took effect on Wednesday, shifts responsibility away from children and parents, placing the burden of enforcement squarely on technology companies.

The ban comes as part of the Online Safety Amendment (Social Media Minimum Age) Bill 2024, establishing a firm legal age floor for access to major digital platforms. Under the new regulations, parental permission cannot override the restrictions, teenagers cannot self-certify their age, and minors face no penalties for attempting to access these platforms.

“This represents a fundamental shift in how we approach youth online protection,” said a spokesperson from Australia’s eSafety Commissioner’s office. “Rather than asking families to navigate these complex spaces alone, we’re requiring companies to take meaningful action.”

The legislation impacts virtually all major social media platforms, including TikTok, Instagram, YouTube, Snapchat, X (formerly Twitter), Facebook, Reddit, Twitch, Threads, and Kick. A small number of services remain accessible to minors, including YouTube Kids, WhatsApp, Google Classroom, Messenger Kids, and various educational and helpline tools specifically designed for younger users.

According to guidance from Raising Children Australia, platforms must implement age verification methods such as ID-based checks or AI-powered facial age estimation. Companies must also provide alternative verification options for users unable or unwilling to provide identification documents. Enforcement authority falls to the eSafety Commissioner, who can pursue court-imposed penalties against platforms failing to take “reasonable steps” toward compliance.

For the millions of underage Australians currently using these platforms, the change means their accounts must be disabled by the companies themselves. A 14-year-old with an existing TikTok account, for instance, should find their access revoked as companies implement verification systems.

The Australian approach has sparked considerable debate among child safety advocates and digital rights groups. UNICEF Australia, while acknowledging the good intentions behind creating safer online environments, has expressed concerns about the effectiveness of an age-based cutoff.

“A blanket ban doesn’t address the structural issues that make social media potentially harmful,” reads UNICEF’s public statement. “Predatory behavior, harmful content, aggressive algorithms, and inadequate reporting mechanisms remain problems regardless of whether a 15-year-old has an account.”

The organization also criticized the legislative process, noting that young people themselves—the demographic most directly affected by the policy—were minimally consulted during the law’s development.

The Australian legislation comes amid growing global concern about social media’s impact on youth mental health. Research has increasingly linked heavy platform use with anxiety, depression, and body image issues among teenagers, particularly girls. Mental health professionals have pointed to the platforms’ design elements, including endless scrolling feeds and algorithmic content delivery, as potentially addictive and harmful.

The tech industry’s response has been measured. While publicly expressing commitment to user safety, many companies are privately concerned about implementation challenges, particularly regarding reliable age verification methods that balance accuracy with privacy considerations.

In the United States, lawmakers are watching Australia’s implementation closely while pursuing their own regulatory approach. A bipartisan group of senators led by Brian Schatz has introduced the Kids Off Social Media Act, which proposes a more nuanced but still significant set of restrictions.

The U.S. legislation would ban social media accounts for children under 13, reinforcing existing platform policies that are widely circumvented. It would also prohibit algorithmic recommendation feeds for users under 17, effectively eliminating features like TikTok’s “For You Page” and Instagram’s Explore feed for teenagers. The Federal Trade Commission and state attorneys general would have enforcement authority, while schools would be required to limit social media access on their networks.

As Australia begins implementation of its groundbreaking policy, global technology companies and policymakers will be watching closely to assess both its effectiveness and unintended consequences. The Australian experiment may well become a template—or cautionary tale—for other nations grappling with the complex challenge of protecting young people in an increasingly digital world.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

12 Comments

While the intent behind this legislation is admirable, I have concerns about how it may impact young people’s ability to access important social and educational resources online. Careful consideration of unintended consequences is warranted.

That’s a fair concern. Policymakers will need to ensure that legitimate uses of social media by minors are not unduly restricted, while still maintaining strong safeguards.

This is a bold move by Australia to prioritize child safety on social media. While parental guidance is important, tech companies should also bear responsibility for protecting minors on their platforms.

I agree. Putting the onus on companies to enforce age restrictions is a sensible approach, rather than relying solely on parents to monitor their children’s online activities.

The social media age ban raises interesting questions about digital rights, privacy, and the role of government in regulating online spaces. It will be fascinating to see how this policy plays out and if other countries follow suit.

Absolutely. This legislation sets a precedent that could have far-reaching implications for how we balance the benefits and risks of social media, especially for young users.

As a parent, I’m cautiously optimistic about this move. While social media can provide valuable connections, the risks for minors are well-documented. Strengthening protections is a step in the right direction.

I share your perspective. Protecting children online should be a top priority, even if it means challenging the business models of social media giants.

This policy seems like a reasonable compromise, holding tech companies more accountable while still allowing parents to make decisions for their own families. It will be interesting to see the long-term impacts.

Agreed. The challenge will be in striking the right balance between protecting minors and preserving digital freedoms. Careful implementation and monitoring will be key.

From a compliance perspective, I wonder how effectively this age ban can be enforced across all social media platforms. Robust verification systems and international cooperation may be necessary for it to be truly effective.

That’s a good point. Enforcement will be critical, and technology companies will need to invest heavily in age-verification tools and processes to comply with the new regulations.