Listen to the article

In what experts are increasingly calling the “infocalypse,” the rapid evolution of artificial intelligence has fundamentally transformed the landscape of information consumption, creating unprecedented challenges for media literacy and fact verification.

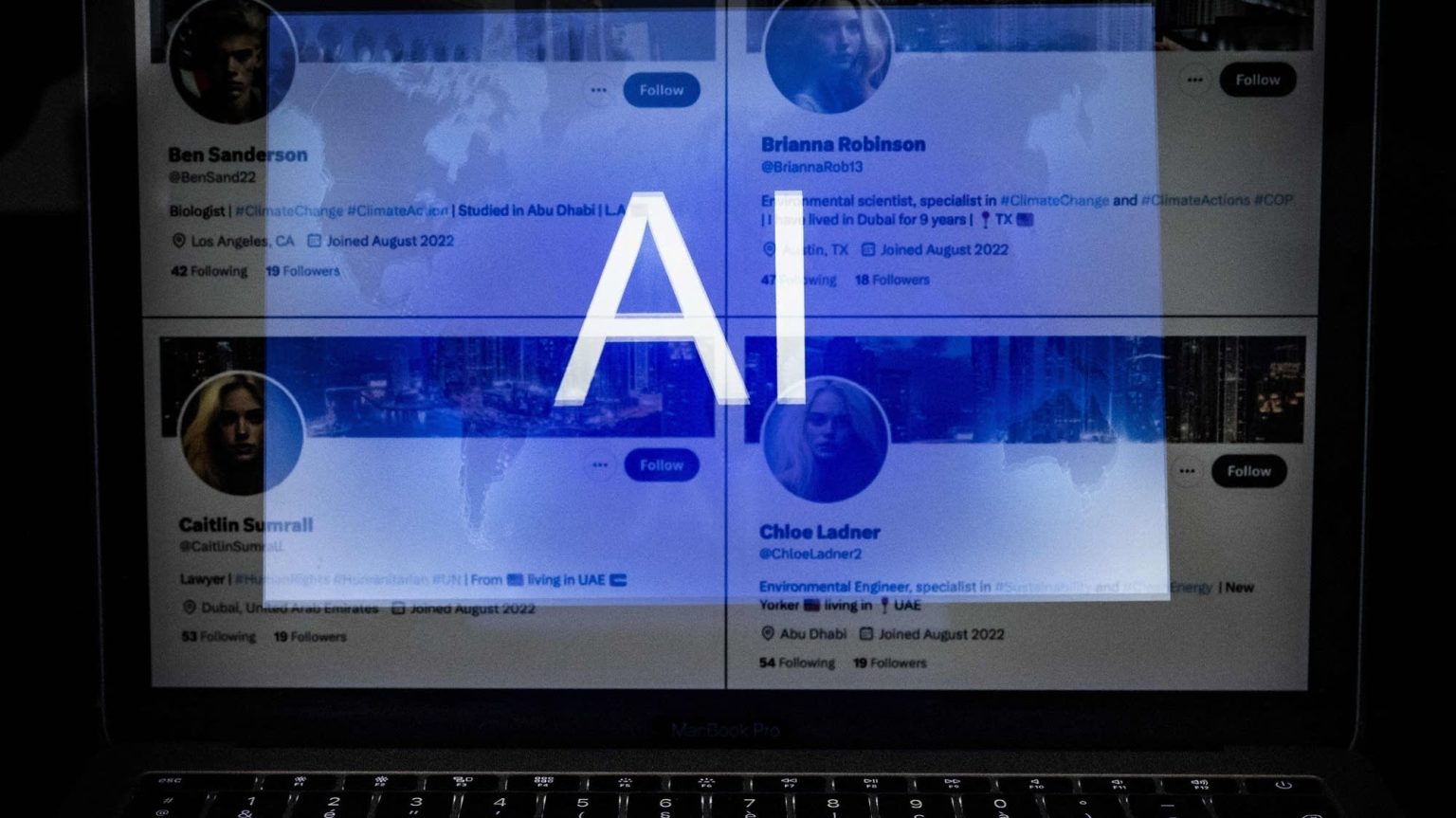

The term, a portmanteau of “information” and “apocalypse,” describes our current reality where distinguishing between authentic and fabricated content has become extraordinarily difficult for the average person. As AI technologies advance, the creation of synthetic media—including deepfakes, AI-generated articles, and manipulated imagery—has outpaced the development of detection tools and public awareness.

“We’re entering an era where seeing is no longer believing,” says Dr. Claire Wardle, a misinformation researcher at Harvard University’s Shorenstein Center. “The sophistication of today’s AI-generated content means that traditional methods of verification are increasingly ineffective for most citizens.”

Recent studies highlight the severity of the problem. According to the Pew Research Center, nearly 65% of Americans report having encountered AI-generated content online, while only 23% felt confident in their ability to identify it. This gap between exposure and detection capability represents a significant vulnerability in public information ecosystems.

The media industry finds itself at a critical inflection point. Newsrooms across the country are implementing new verification protocols and training journalists to recognize synthetic content, but these efforts often lag behind technological developments. The Associated Press recently established a specialized AI content detection unit, while Reuters has invested millions in developing proprietary verification software.

“It’s an arms race,” explains Marcus Collins, chief technology officer at a leading media verification startup. “As detection technologies improve, generation technologies become more sophisticated. This cycle places tremendous pressure on information gatekeepers and individual consumers alike.”

The economic implications extend beyond media organizations. Financial markets have experienced volatility following instances of AI-generated false information about public companies. In March, a convincingly fabricated earnings report for a Fortune 500 technology company briefly caused its stock to drop 8% before the content was identified as fraudulent.

Government agencies have begun responding to these challenges. The Federal Communications Commission introduced draft regulations last quarter addressing AI-generated content in broadcast media, while Congress is considering bipartisan legislation that would require disclosure when AI generates or substantially alters political advertisements.

Educational institutions are also adapting. Several major university systems have integrated AI literacy components into their core curricula, focusing on developing critical thinking skills specifically tailored to navigating synthetic content. The California State University system announced a system-wide initiative requiring all incoming freshmen to complete an AI media literacy course beginning next academic year.

“Education is crucial, but it can’t be the only solution,” says Dr. Renée DiResta, technical research manager at the Stanford Internet Observatory. “We need a multilayered approach involving technology companies, policymakers, educators, and media organizations working in concert.”

Social media platforms have implemented varied responses. Meta has expanded its fact-checking program to specifically target AI-generated content, while Twitter (now X) faced criticism for dismantling similar safeguards. Google recently modified its search algorithm to prioritize content from verified news sources when displaying results related to breaking news events.

For consumers, the practical advice remains challenging to implement. Media literacy experts recommend verification through multiple sources, checking publication dates, scrutinizing unusual phrasing, and using dedicated verification tools when available. However, these practices require time and effort that many people cannot consistently devote to information consumption.

The business community has recognized potential opportunities amid these challenges. Several startups have secured significant venture capital funding for developing consumer-friendly authentication tools and browser extensions that flag potential synthetic content in real-time.

As society adapts to this transformed information landscape, experts emphasize that the “infocalypse” represents not merely a technological challenge but a fundamental shift in how information functions in democratic societies.

“We’re not just talking about occasional misleading content,” says Dr. Wardle. “We’re witnessing a structural transformation in our information environment that requires reimagining how we produce, distribute, and consume information in the digital age.”

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

9 Comments

The rapid evolution of AI-generated content is indeed a cause for concern. This ‘infocalypse’ scenario underscores the urgent need for robust verification tools and enhanced media literacy. Maintaining trust in information sources is crucial for informed decision-making.

Agreed. Developing effective detection methods and public awareness campaigns will be critical to navigating this new reality of synthetic media.

This ‘infocalypse’ phenomenon highlights the dark side of AI progress. While the technology has immense potential, its misuse for disinformation campaigns is deeply concerning. Mitigating the spread of fabricated content will require a multi-pronged approach.

Seeing is no longer believing – that’s a sobering thought. The rapid advancement of AI-powered synthetic media is eroding trust in information sources. Enhancing media literacy and verification tools should be a top priority.

The statistics around AI-generated content and public skepticism are quite alarming. This ‘infocalypse’ is a serious threat to informed decision-making and a healthy democratic discourse. Urgent action is needed to address this challenge.

Fascinating how AI is transforming the information landscape. Distinguishing truth from fiction is becoming increasingly challenging. The ‘infocalypse’ is a concerning development that highlights the urgent need for enhanced media literacy and verification tools.

Interesting to see the term ‘infocalypse’ gaining traction. The inability to reliably distinguish authentic from fabricated content is a worrying development that threatens the integrity of public discourse. Addressing this challenge will require concerted efforts across various stakeholders.

This ‘infocalypse’ phenomenon is really unsettling. The rapid evolution of AI-generated content is outpacing the public’s ability to discern authenticity. We’ll need robust solutions to combat the spread of disinformation and deepfakes.

Couldn’t agree more. Developing effective detection methods and public awareness campaigns will be critical to navigating this new reality.