Listen to the article

In the digital era, a rising tide of misinformation threatens to overwhelm traditional knowledge systems. What experts once dubbed “The Age of Information” has transformed into an age of disinformation, marked by unfiltered content and increasingly sophisticated artificial intelligence tools.

The explosion of non-peer reviewed information sources—blogs, opinion websites, and social media platforms—has democratized content creation while simultaneously eroding quality control mechanisms that once guided public discourse. This digital transformation has created an environment where expertise is claimed rather than earned, and where algorithms relentlessly feed users content that reinforces existing beliefs rather than challenging them.

“Today’s information ecosystem allows anyone to position themselves as an expert on any subject,” explains media literacy expert Dr. Amanda Richardson. “The democratization of publishing platforms means everyone can broadcast opinions widely, while sophisticated digital recommendation systems continually serve content that confirms users’ existing biases.”

Ancient wisdom seems particularly relevant to this modern challenge. Proverbs 18 warns against those who “show off opinions” rather than seeking understanding, and cautions that answering before listening represents “foolishness and disgrace.” These ancient texts emphasize the ethical dimensions of both creating and consuming information.

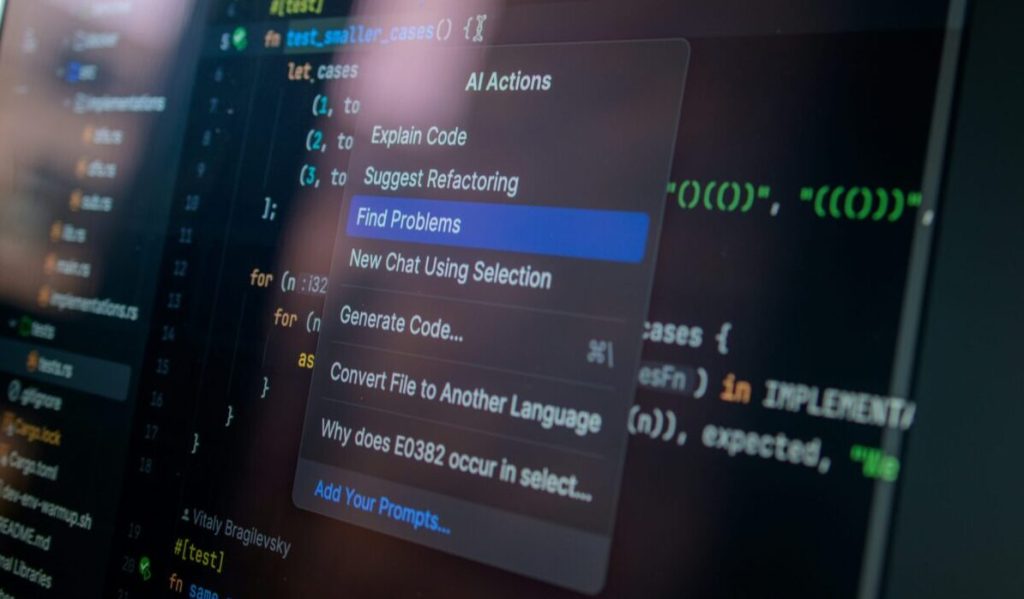

The moral component of information sharing becomes increasingly important as AI systems like ChatGPT and others draw from publicly available information to generate responses and summaries. These tools, while potentially beneficial, can amplify existing misinformation or generate plausible-sounding but incorrect content when trained on flawed data sets.

“As with any technology, AI represents a double-edged sword,” notes technology ethicist James Morrison. “The same systems that can democratize knowledge and provide educational opportunities can also spread falsehoods at unprecedented scale when misused or poorly designed.”

The challenge extends beyond technology to human psychology. Confirmation bias—our tendency to accept information that confirms existing beliefs while rejecting contradictory evidence—makes combating misinformation particularly difficult. Studies show that corrections to false information often fail to change minds, especially when the misinformation aligns with deeply held values or identity.

To navigate this complex landscape, media literacy experts recommend several practical strategies. First, approach implausible-sounding claims with healthy skepticism. Second, intentionally seek diverse viewpoints, especially those that challenge your existing beliefs. Third, disengage from sources that consistently manipulate or distort information.

Additional recommendations include consulting primary sources rather than relying on interpretations, reading complete articles rather than just headlines, and evaluating both the tone and content of information. Experts also suggest being particularly careful with emotionally charged topics, as strong feelings can override critical thinking skills.

“The emotional component of misinformation makes it particularly sticky,” explains cognitive psychologist Dr. Sarah Jennings. “When content triggers fear, anger or tribal identity, our ability to evaluate it objectively diminishes significantly.”

Digital platforms have begun implementing fact-checking systems and labeling potentially misleading content, but these efforts remain insufficient against the volume of misinformation being produced. Media literacy education in schools has shown promise, teaching students to evaluate sources critically rather than accepting information at face value.

As society grapples with these challenges, the responsibility falls on both institutions and individuals. Platforms must improve content moderation and transparency, while users need to develop stronger critical thinking skills. The stakes extend beyond personal beliefs to public health, democratic processes, and social cohesion—all of which depend on a shared information environment that prioritizes accuracy over engagement.

In an era where truth seems increasingly elusive, ancient wisdom provides a surprisingly relevant framework: seek understanding rather than validation, listen before speaking, and recognize that both creating and consuming information carries moral weight.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

29 Comments

Exploration results look promising, but permitting will be the key risk.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Interesting update on Real or AI? Navigating the Surge of Fake Content in an Era of Disinformation. Curious how the grades will trend next quarter.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Exploration results look promising, but permitting will be the key risk.

Good point. Watching costs and grades closely.

Uranium names keep pushing higher—supply still tight into 2026.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

I like the balance sheet here—less leverage than peers.

The cost guidance is better than expected. If they deliver, the stock could rerate.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Uranium names keep pushing higher—supply still tight into 2026.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

I like the balance sheet here—less leverage than peers.

Silver leverage is strong here; beta cuts both ways though.

Good point. Watching costs and grades closely.

Production mix shifting toward Disinformation might help margins if metals stay firm.

Nice to see insider buying—usually a good signal in this space.

Interesting update on Real or AI? Navigating the Surge of Fake Content in an Era of Disinformation. Curious how the grades will trend next quarter.

Good point. Watching costs and grades closely.

The cost guidance is better than expected. If they deliver, the stock could rerate.

Good point. Watching costs and grades closely.

If AISC keeps dropping, this becomes investable for me.

Good point. Watching costs and grades closely.