Listen to the article

Russian Disinformation Campaign Uses AI to Create Fake Ukrainian Soldier Videos

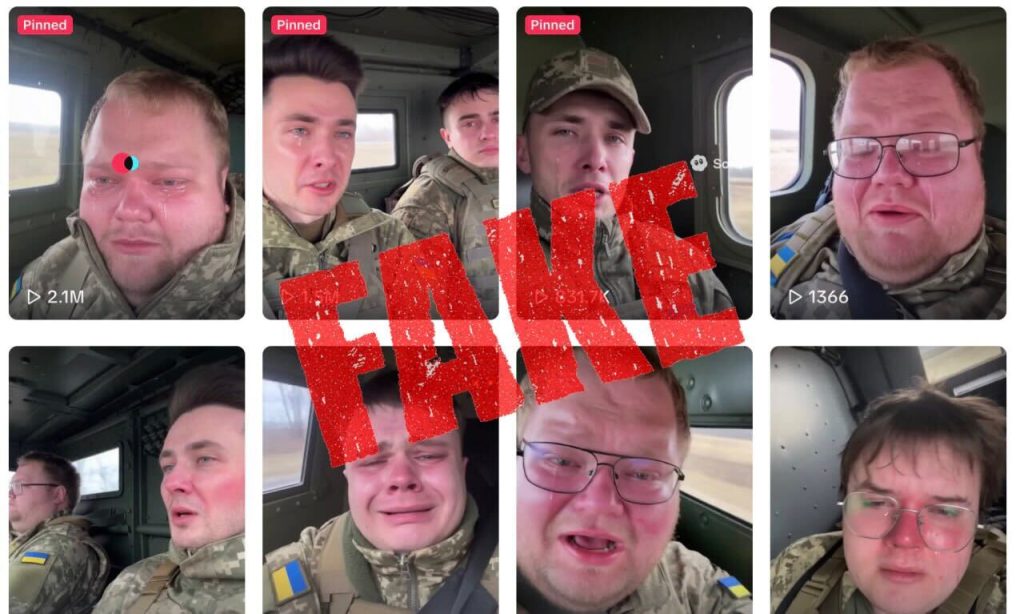

A sophisticated disinformation campaign using artificial intelligence to fabricate videos of distressed Ukrainian soldiers is spreading rapidly across social media platforms, experts warn. The operation, believed to be Kremlin-backed, marks an escalation in Russia’s information warfare tactics against Ukraine.

The campaign centers on AI-generated videos showing what appear to be young Ukrainian men in military uniforms pleading for help and claiming they’ve been forcibly mobilized. These fabricated clips have garnered millions of views on TikTok, X (formerly Twitter), and other platforms.

“Tears, panic, and young Ukrainian soldiers pleading for help” characterize these videos, which are distributed with subtitles in multiple languages to maximize their reach across different audiences.

Analysis by the Kyiv Independent using Hive Moderation, an AI-based content detection tool, confirmed that both the audio and visuals in these videos were generated using Sora, OpenAI’s text-to-video model. Some clips even display the “Sora” watermark, indicating their artificial origin.

One widely shared video features an AI-generated figure claiming to be a 23-year-old who was “mobilized” and sent to Chasiv Yar in eastern Ukraine’s Donetsk Oblast. “Help me, I don’t wanna die, I’m just 23 years old,” the synthetic character pleads.

This directly contradicts Ukraine’s military recruitment laws, which set the minimum mobilization age at 25. Ukraine lowered the draft age from 27 to 25 in April 2024, but never below that threshold—a fact deliberately misrepresented in these fabricated videos.

Ukraine’s Center for Countering Disinformation has identified additional inaccuracies, noting that one widely circulated video actually uses the face of a Russian citizen rather than a Ukrainian.

Anton Kuchukhidze, an international political scientist and co-founder of the United Ukraine think tank, explains the strategic purpose behind these fabrications: “The information space is a battlefield, and that’s why Russia works tirelessly to push its narratives.”

According to disinformation monitoring experts at LetsData, the campaign aims to portray Ukraine’s mobilization as coercive, featuring false claims of men being “kidnapped” or “hunted” by recruiters. The content is deliberately crafted to foster resentment within Ukrainian society and frame the conflict as “Zelensky’s war.”

The operation targets multiple audiences simultaneously. For Ukrainian citizens, it seeks to “undermine morale in the ranks of the defense and security services” and generate public dissatisfaction, Kuchukhidze noted. The Kremlin also aims to create rifts between Ukraine’s military and political leadership, damaging what Kuchukhidze calls the country’s “unity triad—government, army, and people.”

For international audiences, especially in Western nations supporting Ukraine, the disinformation promotes the narrative that Ukraine “cannot fight and cannot win,” potentially weakening resolve for continued military and financial assistance.

Donald Jensen, a former U.S. diplomat and lecturer in Russian foreign policy at Johns Hopkins University, confirms this assessment: “Russia thinks it’s winning the war, but Russia realizes the front is not moving as fast as it should.” The disinformation campaign represents an attempt to “demoralize and make the support weaker, especially in Europe,” exploiting existing political divisions among Kyiv’s partners.

The use of advanced AI tools like Sora represents a concerning evolution in Russia’s propaganda tactics. While text-to-video technology has legitimate creative applications, its weaponization for political deception poses new challenges for media literacy and content verification efforts.

This campaign was identified as part of the Fighting Against Conspiracy and Trolls (FACT) project, an independent initiative launched in 2025 under the EU Digital Media Observatory umbrella to combat disinformation.

Despite Russia’s intensified information warfare, Kuchukhidze remains optimistic about Ukraine’s resilience: “Ukraine proved to Europe that Russia can and must be resisted. That undermines a myth Russia spent 20 years spreading—that it was the world’s second-strongest army.”

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

8 Comments

It’s alarming to see how AI is being leveraged to create such realistic yet entirely fabricated videos of Ukrainian soldiers. This is a worrying escalation in Russia’s information warfare tactics that we must remain vigilant about.

While the technology behind these deepfakes is impressive, it’s deeply troubling that it’s being used to fabricate distressed soldier videos and spread disinformation. We must be extremely cautious about the veracity of online content during wartime.

Agreed, the sophistication of these deepfakes makes it all the more important for the public to be critical consumers of media. Fact-checking and relying on reputable sources is key to avoiding falling for this kind of manipulation.

Deepfake videos of distressed Ukrainian soldiers are a disturbing new weapon in Russia’s disinformation arsenal. We must be vigilant about scrutinizing online content and amplifying only verified, factual reporting during this conflict.

Deepfake videos distorting Ukraine’s military mobilization narrative are a disturbing escalation of Russia’s disinformation tactics. It’s critical we stay vigilant and rely on credible sources to separate fact from fiction during this conflict.

Absolutely, the use of AI-generated fake videos to spread misinformation is very concerning. It’s crucial we call out these propaganda efforts and counter them with truthful reporting.

The use of deepfakes to distort the narrative around Ukraine’s military mobilization is a troubling development. We must be extremely cautious about the source and veracity of any online content related to this conflict.

Absolutely. Deepfake technology in the hands of bad actors poses a serious threat to the integrity of information. Fact-checking and relying on trusted news sources is critical during this time of heightened disinformation.