Listen to the article

In today’s digital age, conflicts have increasingly migrated to online spaces, creating a dangerous ecosystem where disinformation and hate speech flourish. Social media platforms now function simultaneously as battlegrounds and amplifiers of real-world tensions, with profound consequences for societies globally.

While individual users often share content that aligns with their ideological beliefs, state actors and organized groups frequently deploy coordinated campaigns designed specifically to deceive and manipulate. This strategic dissemination of falsehoods—disinformation—aims to mislead populations, damage reputations, and sow division across communities.

Concurrent with disinformation campaigns, hate speech proliferates across digital platforms, often legitimized by political elites. This toxic content targets individuals or groups based on inherent characteristics such as ethnicity, religion, or gender. During conflicts, such rhetoric becomes weaponized to unify populations against perceived enemies, justify violence, or intimidate those who dissent.

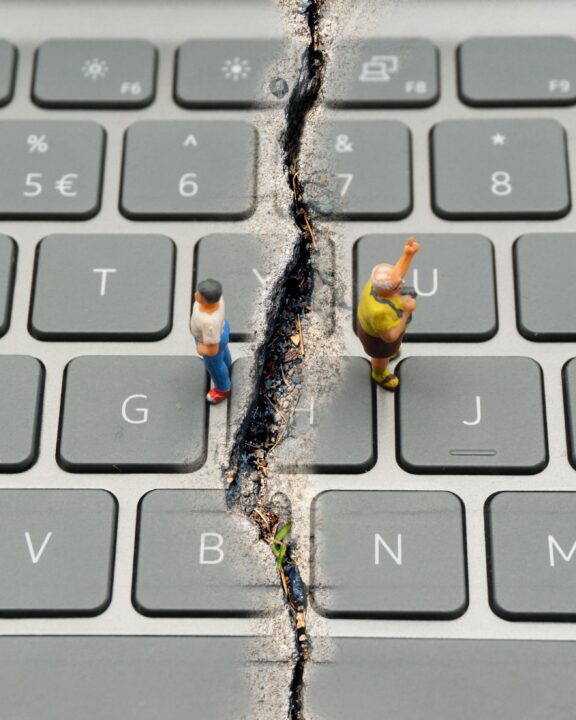

At the center of this digital landscape lies polarization—the fracturing of societies into opposing camps with increasingly rigid worldviews. As individuals become entrenched in supporting one side while dehumanizing the other, disinformation, hate speech, and polarization form a self-reinforcing cycle that deepens societal divisions and sustains conflict.

Empirical research across numerous conflict zones reveals a consistent pattern: disinformation and hate speech surge during political tensions and explode when conflicts turn violent. Warring parties exploit social media to mobilize support, justify military action, and discredit opponents. These digital campaigns have real-world impacts—the United Nations concluded that Facebook played a “determining role” in fueling the genocide against the Rohingya in Myanmar, demonstrating how online hate can catalyze actual violence.

In conflict settings, disinformation operates as a sophisticated ecosystem designed to control narratives. It distorts truth, minimizes suffering, and erodes empathy. This weaponization of digital spaces has been evident in multiple ongoing conflicts, including in Palestine, where disinformation campaigns have contributed to the vilification of victims and the denial of documented atrocities.

Narratives portraying children and survivors as “crisis actors” serve to delegitimize real experiences and shield perpetrators from accountability. Through coordinated networks, conflicting parties craft content aimed at engineering consent, shifting blame, and constructing binary worldviews that leave little room for nuance or dialogue.

The Israeli-Gaza conflict that erupted in October 2023 showcased an unprecedented scale of digital warfare. Israel reportedly launched a comprehensive disinformation campaign including a $2 million covert operation using AI-generated content and bot farms to manipulate public opinion. This sophisticated operation, funded by Israel’s Ministry of Diaspora Affairs, strategically targeted U.S. lawmakers through platforms like Facebook, Instagram, and X with tailored propaganda produced by the Israeli firm STOIC.

Simultaneously, the Israeli Ministry of Foreign Affairs launched graphic YouTube ad campaigns across Europe and North America designed to generate support for military actions. According to the digital rights group 7amleh, these ads violated platform standards yet remained active. There were also reports of people affiliated with Israel offering payment to influencers for distributing pro-Israel messaging.

Iran has likewise intensified disinformation efforts, particularly during the July 2025 escalation following Israel’s offensive. A coordinated Iranian campaign used over 100 bot accounts on X to post more than 240,000 times, aiming to sway U.S. public opinion and deter potential strikes on Iranian nuclear facilities.

Several factors facilitate the spread of disinformation during conflicts, including widespread lack of verification skills, cognitive biases, and the echo chamber effect. People tend to seek information confirming existing beliefs while avoiding content that challenges them. The emotional nature of conflict-related messaging—marked by fear, anger, or grief—further increases susceptibility to manipulation.

Social media platforms have exacerbated these dynamics through algorithms optimized for engagement, which often amplify divisive content. While platforms have primarily addressed violent conflict through reactive content moderation, this approach fails to address deeper design issues that fuel conflict dynamics.

Addressing these challenges requires multifaceted approaches. Research suggests that properly structured communication between groups can reduce polarization, though poorly executed contact can worsen divisions. Practical strategies include fostering intergroup dialogue, encouraging empathy through perspective-taking, and highlighting internal disagreements within political groups to disrupt rigid “us versus them” narratives.

Experts recommend that social media platforms shift from reactive moderation to proactive interventions rooted in platform design. This includes moving away from engagement-based content ranking in sensitive contexts, limiting mass dissemination capabilities, and introducing features that foster meaningful interactions.

Comprehensive structural reforms are also essential. The NYU Stern report “Fueling the Fire” advocates for social media platforms to transparently redesign algorithmic systems to reduce inflammatory content, invest in robust content moderation teams, and collaborate with civil society organizations. It also underscores the need for government intervention through legislation mandating transparency and empowering regulatory agencies to enforce standards.

As digital spaces continue to influence real-world conflicts, addressing the interlinked challenges of disinformation, hate speech, and polarization becomes increasingly urgent. Without coordinated action across sectors, these dynamics threaten not just online discourse but democratic stability itself.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

19 Comments

The article highlights the complex dynamics between online and offline conflicts. Developing effective policies to address disinformation and hate speech will require a nuanced understanding of these interrelated issues.

This is a concerning trend as digital spaces become battlegrounds for influence and propaganda. Protecting free speech while curbing malicious actors will be a delicate balance to strike.

Absolutely. Disinformation can have real-world consequences, so finding the right policy approaches will be vital to safeguard democracy and social stability.

This is a concerning global challenge that requires a coordinated, multi-pronged response. Strengthening digital literacy, improving platform moderation, and fostering social cohesion will all be important elements of the solution.

Absolutely. Addressing the root causes of polarization and promoting constructive dialogue will be crucial to creating more resilient online communities.

The growth of hate speech and its weaponization during conflicts is deeply troubling. Concerted efforts to promote media literacy and civility online are essential to combat these harmful dynamics.

Agreed. Empowering users to think critically about online content and call out abusive rhetoric is a crucial part of the solution.

Disinformation and hate speech can have devastating real-world impacts, especially during times of conflict. Innovative solutions that empower users and strengthen digital citizenship will be key to tackling these challenges.

Agreed. Promoting media literacy and responsible online behavior will be crucial to building more resilient and cohesive communities.

The article highlights the urgent need to address the proliferation of disinformation and hate speech, which can have devastating real-world consequences. Innovative solutions that empower users and promote digital literacy will be crucial to tackling these challenges.

The article raises important questions about the role of digital platforms and the need for comprehensive policy responses. Balancing free speech with harm mitigation will be a delicate but necessary task.

Polarization fueled by disinformation and hate speech is a major challenge. Strengthening digital citizenship and building bridges across divides could help mitigate these concerning trends.

Combating disinformation and hate speech is crucial, but the solutions must balance freedom of expression with the need for accountability. Careful consideration of unintended consequences will be critical.

Combating disinformation and hate speech during conflicts is a critical challenge that requires a multifaceted approach. Strengthening digital citizenship, improving platform moderation, and fostering social cohesion will all be key elements of the solution.

Well said. Developing effective, holistic policies that address the root causes of these issues will be crucial to safeguarding democratic values and social stability.

The article raises important points about the interplay between online and offline conflicts. Developing comprehensive policy frameworks to address this complex issue will require diverse stakeholder input and innovative approaches.

Agreed. Multistakeholder collaboration and a nuanced understanding of the evolving digital landscape will be key to developing effective solutions.

Combating disinformation and hate speech during conflicts is critical to maintain social cohesion and prevent escalation of violence. Proactive steps by platforms, governments, and civil society are needed to identify and mitigate these toxic online trends.

Agreed. Effective moderation and fact-checking are key to counter the spread of deliberate falsehoods. Transparency and collaboration across stakeholders will be crucial.