Listen to the article

In a concerning development ahead of Bangladesh’s elections, artificial intelligence is being weaponized to create deceptive campaign content featuring military personnel, potentially undermining electoral integrity and threatening civil-military relations.

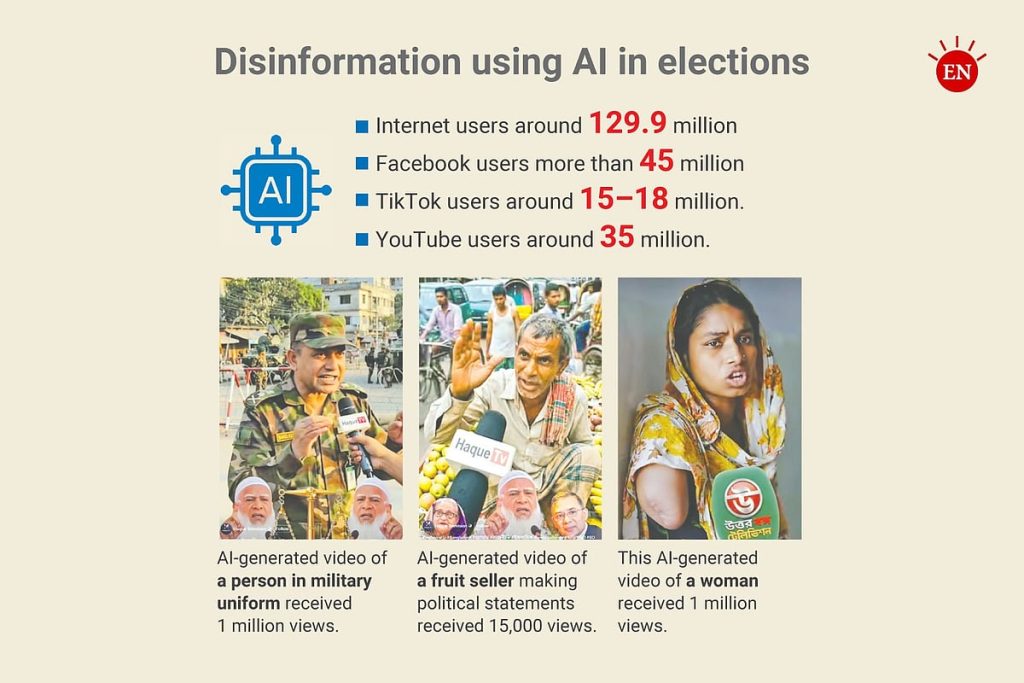

A viral AI-generated video depicting a man in Bangladesh Army uniform endorsing a specific political party has gained massive traction on social media platforms. The sophisticated deepfake has amassed over one million views, 89,000 reactions, 2,500 comments, and 26,000 shares as of yesterday, according to digital analytics.

Fact-checking organizations have confirmed the video’s fraudulent nature, raising alarms about the growing sophistication of AI-generated content and its potential to mislead voters during the crucial election period. Despite being identified as false, the video continues to circulate widely across social platforms.

The Bangladesh Army addressed these manipulations on January 14, issuing an official warning through their Facebook page. Military officials stated that “vested quarters are spreading AI-generated content and misleading photo cards on social media to damage the army’s image,” and urged citizens to exercise critical judgment when encountering such content.

Despite this official warning four days ago, platform moderation has proven insufficient, as the video remains accessible online, continuing to potentially influence public opinion. The incident highlights the challenges facing electoral authorities and social media companies in managing the rapid spread of AI-generated disinformation.

Political organizations have expressed frustration with the limited response to these sophisticated attacks. Ehsanul Mahbub Jubair, assistant secretary general of Jamaat-e-Islami and head of its publicity department, told Prothom Alo, “Jamaat is being targeted the most through attacks and disinformation using AI. We have raised the issue multiple times in meetings with the Election Commission, but the steps taken are not satisfactory.”

The persistence of this content represents a concerning evolution in digital disinformation tactics. By falsely portraying military endorsements of civilian political parties, these videos risk damaging the traditionally neutral stance of Bangladesh’s armed forces and could potentially inflame political tensions in an already polarized electoral landscape.

Digital rights experts point to this case as emblematic of broader challenges facing democracies worldwide. The Bangladesh scenario demonstrates how easy access to increasingly sophisticated AI tools can create convincing fake content that spreads faster than fact-checkers or authorities can respond.

Electoral integrity specialists note that social media platforms’ inherent design prioritizes engagement over accuracy, making viral spread of sensational content—even when false—almost inevitable. Jubair acknowledged this structural challenge, stating, “Social media platforms are open spaces, making it difficult to identify who is doing what.”

The Election Commission’s apparent inability to effectively counter these digital threats raises questions about regulatory preparedness for AI-era elections. Traditional electoral safeguards weren’t designed for an environment where convincing digital forgeries can be created and disseminated within minutes.

For Bangladesh, a country with a history of political volatility, the implications extend beyond this specific election. The apparent impersonation of military personnel in campaign materials risks drawing the armed forces into partisan politics, potentially undermining institutional neutrality.

As voting approaches, electoral authorities face mounting pressure to develop more effective responses to AI-generated disinformation. Without robust countermeasures, political actors may increasingly view synthetic media as a viable campaign tactic, further eroding voter trust in electoral information.

This incident serves as a warning to democracies worldwide that election security now requires sophisticated digital safeguards alongside traditional protections. As AI tools become more accessible and realistic, the frontier between fact and fabrication in political discourse continues to blur, presenting novel challenges to democratic processes globally.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

19 Comments

Interesting update on AI-Generated Videos Fueling Disinformation Spread as Authorities Lag in Response. Curious how the grades will trend next quarter.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Nice to see insider buying—usually a good signal in this space.

Interesting update on AI-Generated Videos Fueling Disinformation Spread as Authorities Lag in Response. Curious how the grades will trend next quarter.

Good point. Watching costs and grades closely.

If AISC keeps dropping, this becomes investable for me.

Good point. Watching costs and grades closely.

If AISC keeps dropping, this becomes investable for me.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Nice to see insider buying—usually a good signal in this space.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.

Exploration results look promising, but permitting will be the key risk.

Good point. Watching costs and grades closely.

Uranium names keep pushing higher—supply still tight into 2026.

Good point. Watching costs and grades closely.

Good point. Watching costs and grades closely.