Listen to the article

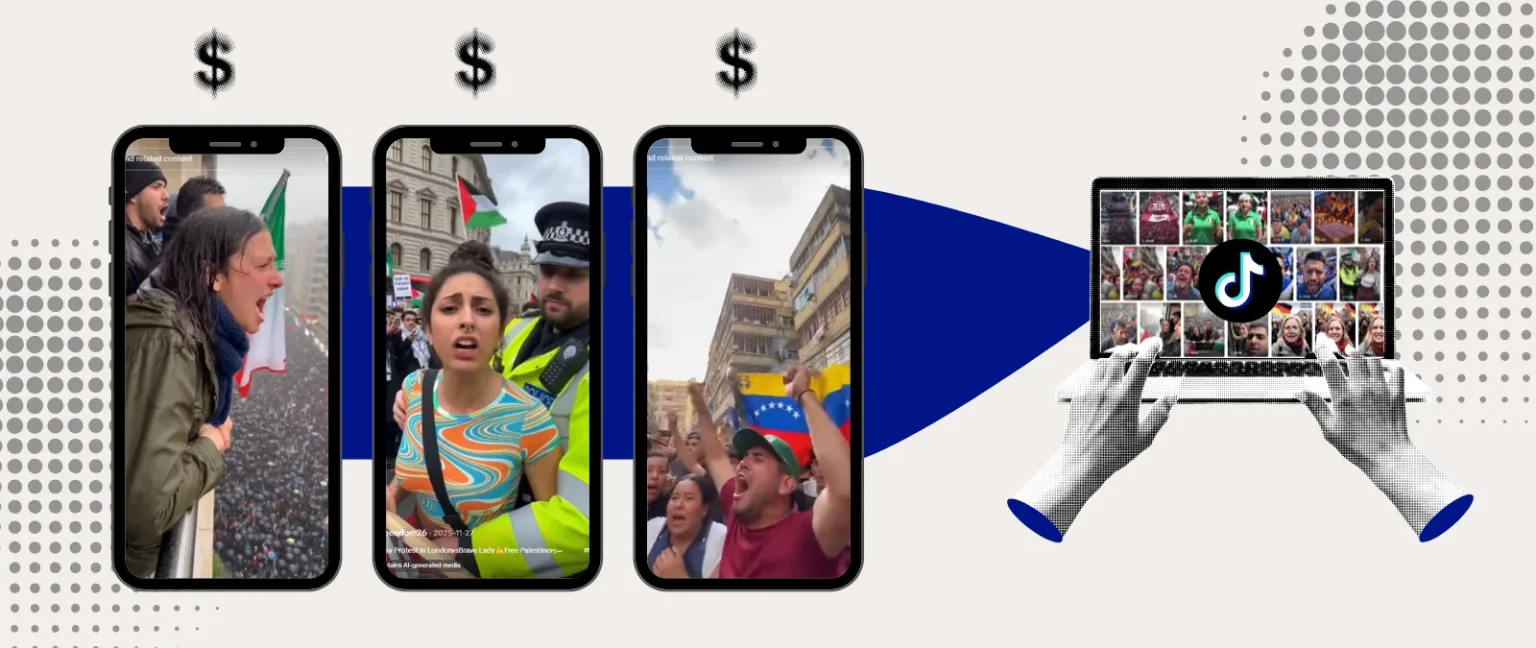

AI-Generated Protest Videos Flood TikTok in Money-Making Scheme

A new investigation has uncovered a disturbing trend on TikTok: hundreds of accounts posting AI-generated videos of fake protests to rapidly gain followers and monetize misinformation. Spanish fact-checking organization Maldita.es identified 550 TikTok accounts that have published more than 5,800 artificial intelligence-generated videos depicting non-existent protests across 18 different countries, collectively amassing over 89 million views.

These fabricated videos show people supposedly rallying for causes in Venezuela, Iran, Palestine, and other nations where real political tensions exist. Captions like “A multitude of Venezuelans celebrate with tears and shouts of freedom” accompany realistic-looking footage created using AI tools such as OpenAI’s Sora or Google Gemini’s Veo.

After contacting one of the account operators, investigators confirmed that the primary motivation is financial gain. The creator detailed a two-step process: first, using emotionally charged, politically divisive AI-generated content to rapidly grow follower counts, then monetizing these accounts either by qualifying for TikTok’s Creator Rewards Program or by selling the accounts outright.

“We get a sense of trends from the news,” the account operator told investigators. “We’ll create march videos and content focusing on that country so that people watch them more.” They added that this strategy can help accounts gain up to 50,000 followers in just three to four days if the chosen topic is trending strongly.

The political causes themselves appear irrelevant to these content creators. Many accounts shift between different political movements or even support opposing candidates within the same account, depending on what might generate the most engagement. For example, one account posted videos supporting both candidates in Chile’s upcoming presidential election just hours apart.

The financial incentive becomes clear once accounts reach 10,000 followers – the threshold for TikTok’s Creator Rewards Program, which pays content creators directly. Many accounts explicitly mention this goal in their bios, asking viewers to help them reach this benchmark.

“After reaching 10,000 followers, every one-minute video you post will earn you money,” explained the account operator. “You might get roughly $1,000 to $1,500 a month, depending on how much you work.”

While the program is only available in eight countries, including the United States and United Kingdom, the investigation found that creators circumvent geographical restrictions by setting up accounts to appear as if they’re based in eligible regions. Of the 550 identified accounts, more than 60 have already surpassed the 10,000-follower threshold.

Another revenue stream comes from selling these artificially inflated accounts. Some profiles openly advertise this in their bios or when contacted through direct messages. Online marketplaces specializing in social media account sales price these profiles based on follower count, creating a financial incentive to rapidly grow audiences through whatever means possible.

Evidence suggests coordination among these accounts, with many sharing similar usernames, identical creation dates, or even the same profile pictures. The account operator confirmed using temporary email addresses to create multiple accounts through Chrome browsers, explaining that AI tools like Sora allow for the creation of hundreds of videos this way.

While some accounts label their content as AI-generated, many videos go viral as if showing authentic protests. The fabricated content often spreads beyond TikTok to platforms like Facebook, Instagram, X (formerly Twitter), and YouTube, where they can accumulate thousands of additional interactions.

The practice potentially violates TikTok’s own policies, which prohibit AI-generated content that is “misleading about matters of public importance” and ban account selling. Moreover, it appears to contravene the European Digital Services Act, which requires platforms to prevent content that could negatively impact “civic discourse and electoral processes.”

Despite these rules, TikTok appears to be failing to enforce its policies effectively, allowing—and potentially funding through its Creator Rewards Program—a growing ecosystem of accounts profiting from artificial, politically charged content at a time when authentic information is increasingly crucial for democratic processes worldwide.

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

10 Comments

The use of AI to generate fake protest videos for financial gain is a serious issue that undermines trust and spreads misinformation. Platforms need to be proactive in identifying and removing this kind of synthetic content.

Absolutely. The scale of the problem, with over 550 accounts and 5,800 videos, is very concerning. Concerted efforts from platforms, fact-checkers, and authorities are needed to address this threat to public discourse.

This is a troubling example of how AI can be misused to create and amplify disinformation. The fact that it’s being done for monetary gain makes it even more concerning. We need stronger measures to detect and prevent this kind of synthetic content.

Agreed. The ability to rapidly grow follower counts with fabricated videos and then monetize those accounts is especially problematic. Platforms must take this threat seriously and implement robust solutions.

I’m appalled to learn about this scheme to use AI-generated protest videos for financial gain. Exploiting political tensions and spreading fabricated content is a serious problem that undermines trust and public discourse.

You’re right, this is a very worrying development. I hope platforms and authorities can quickly identify and shut down these kinds of malicious operations before they can do further damage.

While AI can be a powerful tool, it’s deeply troubling to see it being exploited to create fake protest footage and profit from spreading misinformation. Platforms need to be vigilant in detecting and removing this kind of synthetic content.

Absolutely. The monetization aspect is especially alarming, as it provides a financial incentive to create and disseminate disinformation. Robust detection and enforcement are critical to addressing this issue.

This is very concerning. Using AI to fabricate protest videos and spread disinformation for financial gain is a troubling trend. We need robust safeguards and fact-checking to combat the spread of these manipulated videos.

Agreed. It’s crucial that platforms like TikTok take stronger action to identify and remove this type of deceptive content before it can gain traction and mislead people.