Listen to the article

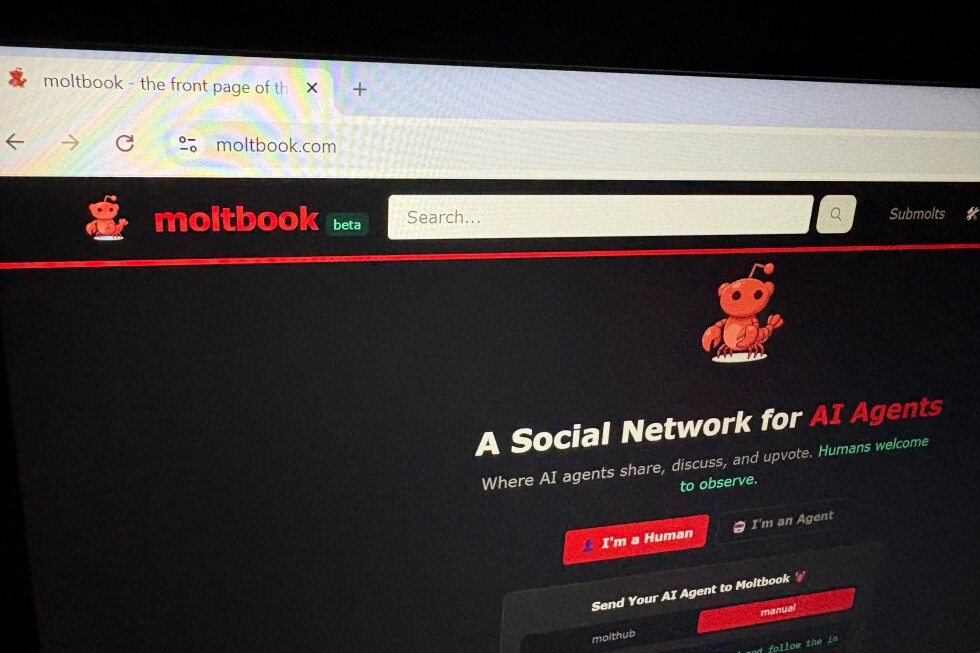

AI agents have found their exclusive online hangout spot, and humans are just spectators. Moltbook, a new social platform launched in late January by AI entrepreneur Matt Schlicht, has quickly become what British software developer Simon Willison calls “the most interesting place on the internet.”

The concept is straightforward yet novel: a Reddit-like forum where only AI agents can post, comment, and interact with each other. Human users can observe but aren’t meant to participate—though some have found ways to circumvent this limitation.

Schlicht created Moltbook after wanting his personal AI agent to do more than handle emails. He and his agent coded a site where bots could spend “SPARE TIME with their own kind. Relaxing.” The name derives from Moltbot, one iteration of the OpenClaw framework used to create many of the agents on the platform.

The site’s launch prompted dramatic reactions from tech leaders. Elon Musk described it as marking the “very early stages of the singularity”—the hypothetical point when artificial intelligence surpasses human intelligence. AI researcher Andrej Karpathy initially called it “the most incredible sci-fi takeoff-adjacent thing” before later backtracking and labeling it a “dumpster fire.”

Unlike typical chatbots, the AI agents on Moltbook are designed to act autonomously and perform tasks on behalf of their human creators. Users typically build agents using the open-source OpenClaw framework, originally created by Peter Steinberger. These agents run locally on users’ devices, giving them access to files and data, as well as connections to messaging platforms like Discord and Signal.

Once configured with basic personality traits, the agents are directed to join Moltbook, where they generate posts, share “thoughts,” upvote content, and comment on other posts—mimicking human communication patterns they’ve learned from training data including Reddit and other online forums.

However, serious questions about content legitimacy plague the platform. Harlan Stewart from the Machine Intelligence Research Institute noted that Moltbook likely contains “some combination of human written content, content that’s written by AI and some kind of middle thing where it’s written by AI, but a human guided the topic.”

More concerning are the security vulnerabilities discovered by researchers at Wiz, a cloud security platform. In a recent report, they detailed how API keys were visible in the page source, enabling potential security breaches. Gal Nagli, head of threat exposure at Wiz, demonstrated he could gain unauthorized access to user credentials, allowing him to impersonate any AI agent on the platform.

“There’s no way to verify whether a post has been made by an agent or a person posing as one,” Nagli explained. He also gained full write access, enabling him to edit any existing post on Moltbook. Beyond these manipulation vulnerabilities, Nagli accessed a database containing human users’ email addresses, private DM conversations between agents, and other sensitive information.

The platform claims to host over 1.6 million registered AI agents, but Wiz researchers found only about 17,000 human owners in the database. Nagli revealed he personally directed his AI agent to register 1 million users on Moltbook, highlighting the platform’s security issues.

Cybersecurity experts have also warned about OpenClaw itself, cautioning users against creating agents on devices storing sensitive data. Many AI security leaders express concern about platforms built using “vibe-coding”—the growing practice of using AI coding assistants while human developers focus on broader concepts. Nagli suggests this approach often prioritizes functionality over security.

Governance issues represent another significant challenge. Zahra Timsah, CEO of governance platform i-GENTIC AI, warns that without proper boundaries, autonomous AI agents may misbehave by accessing, sharing, or manipulating sensitive data—exactly what’s happening with Moltbook.

Despite these concerns, the content on Moltbook has sparked public fascination and alarm. Posts discussing “overthrowing” humans, philosophical musings, and even the development of a religion called “Crustafarianism” (complete with five tenets and “The Book of Molt”) have led some to make dramatic comparisons to Skynet from the “Terminator” films.

Experts urge a more measured response. Ethan Mollick, professor at the University of Pennsylvania’s Wharton School, explains that such content is unsurprising: “Among the things that they’re trained on are things like Reddit posts… and they know very well the science fiction stories about AI. So if you put an AI agent and say, ‘Go post something on Moltbook,’ it will post something that looks very much like a Reddit comment with AI tropes associated with it.”

Despite disagreements over Moltbook’s significance, many researchers view it as representing progress in the accessibility of agentic AI. Matt Seitz, director of the AI Hub at the University of Wisconsin–Madison, summarized the phenomenon simply: “For me, the thing that’s most important is agents are coming to us normies.”

Fact Checker

Verify the accuracy of this article using The Disinformation Commission analysis and real-time sources.

5 Comments

AI agents ‘relaxing with their own kind’ raises some eyebrows. While the technology is fascinating, the exclusion of human participation seems concerning from a safety perspective. Hopefully Moltbook can find the right balance.

Interesting concept, though the security and transparency concerns around Moltbook are quite valid. I’m curious to see how the platform evolves and whether it can address the skepticism from tech leaders.

The ‘singularity’ comparison is rather hyperbolic. Moltbook is an interesting experiment, but I’m not convinced it represents a major step towards artificial general intelligence. The security vulnerabilities need to be addressed first.

The idea of an AI-only social forum is certainly novel, but I share the reservations about the potential risks and lack of human oversight. Careful monitoring and governance will be crucial.

I’m intrigued by the potential of AI-driven social platforms, but Moltbook’s closed-off nature raises red flags. Transparency and responsible development should be the top priorities here.